This is the second in a series of Java Web Crawler blogs, in the last one Java web crawler, it's so simple In this article, we have simply learned how to use Java for web crawling. In this article, we will briefly talk about web crawlers, encounter the need to log on to the site, what should we do?

When doing crawler, it is common to encounter the problem of landing, such as writing scripts and robbing tickets, but all need personal information. There are two main ways to solve this problem: one is to set cookies manually, that is, to login on the website first, to copy the cookies after landing, and to set cookies in HTTP requests manually in the crawler program. Sex, this method is suitable for the collection frequency is not high, collection cycle is short, because cookies will fail, if long-term collection needs frequent cookies, this is not a viable way, the second way is to use the program to simulate landing, through the simulation of landing to obtain cookies, this method is suitable for long-term collection of the website, because each collection will be landed first. So you don't have to worry about cookies expiring.

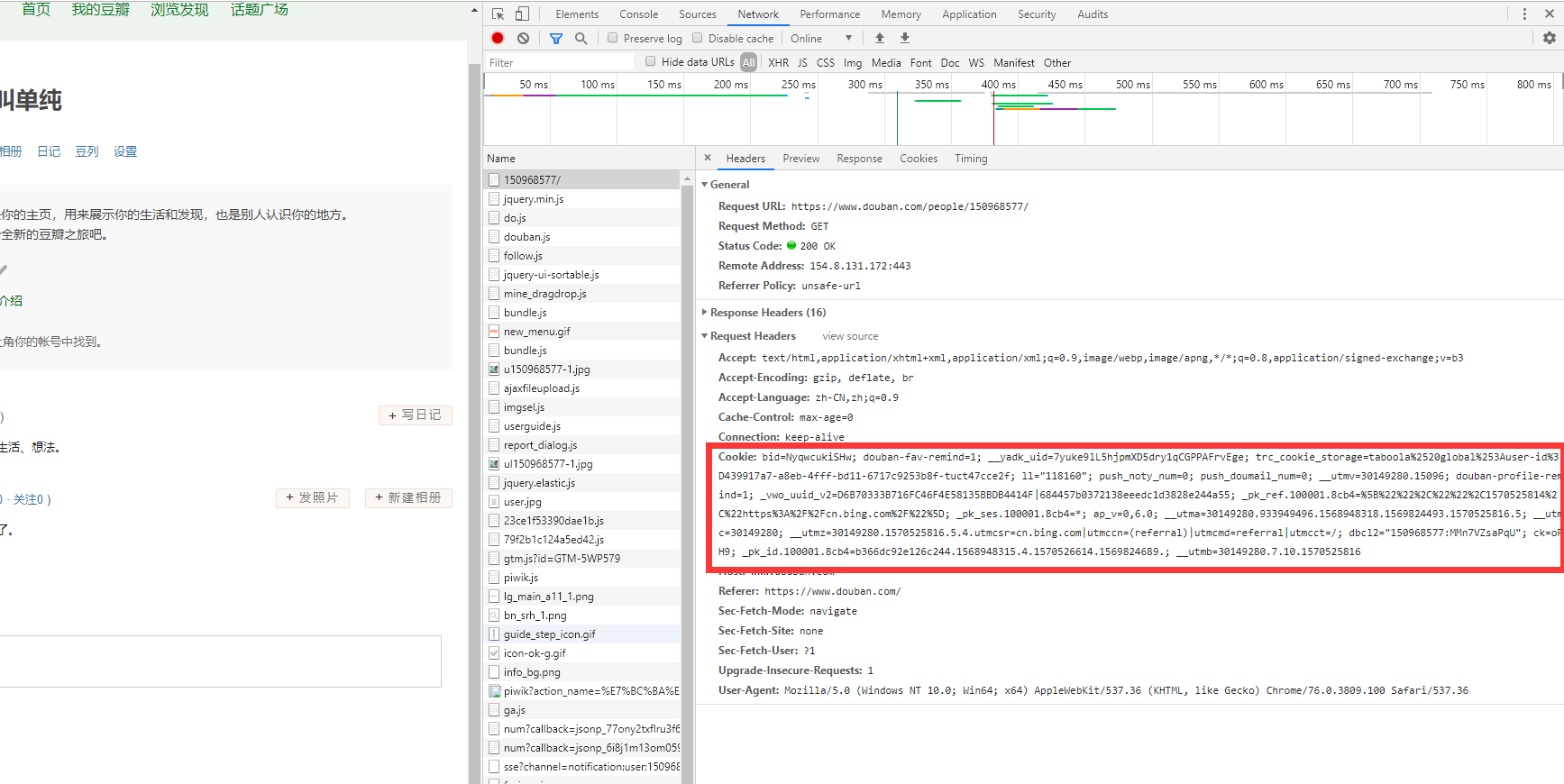

In order to let you better understand the use of these two ways, I take the personal homepage nickname of Douban as an example, and use these two ways to get the information that you need to login before you can see. Get information as shown in the following figure:

Getting the missing eye in the picture is called simplicity. This information obviously needs to be logged in before it can be seen. This is in line with our theme. Next, we use the above two methods to solve this problem.

Setting cookie s manually

The way to set cookies manually is relatively simple. We only need to login on Douban. After successful login, we can get cookies with user information. Douban login link: https://accounts.douban.com/passport/login. As shown in the following figure:

The cookie in the figure carries user information. We only need to carry the cookie when we request to view the information that we can only see after we need to log in. We use Jsoup to simulate how to set cookies manually. The code is as follows:

/**

* Setting cookies manually

* Log in from the website first, and then check the cookies in the request headers

* @param url

* @throws IOException

*/

public void setCookies(String url) throws IOException {

Document document = Jsoup.connect(url)

// Setting cookies manually

.header("Cookie", "your cookies")

.get();

//

if (document != null) {

// Obtaining Douban Nickname Node

Element element = document.select(".info h1").first();

if (element == null) {

System.out.println("Can't find .info h1 Label");

return;

}

// Remove the nodal nickname of the Douban

String userName = element.ownText();

System.out.println("Douban My net name is:" + userName);

} else {

System.out.println("Error!!!!!");

}

}As you can see from the code, it's no different from a website that doesn't need to be logged in, just one more. header("Cookie", "your cookies"), we can copy the cookies in the browser to here and write the main method.

public static void main(String[] args) throws Exception {

// Personal center url

String user_info_url = "https://www.douban.com/people/150968577/";

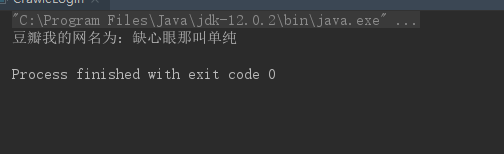

new CrawleLogin().setCookies(user_info_url);Running main yields the following results:

We can see that we succeeded in getting the missing eye that is called simplicity, which shows that the cookies We set up are effective, and we succeeded in getting the data that need to be logged in. This method is really simple, the only shortcoming is that you need to change cookies frequently, because cookies will fail, which makes you use it is not very pleasant.

Simulated landing mode

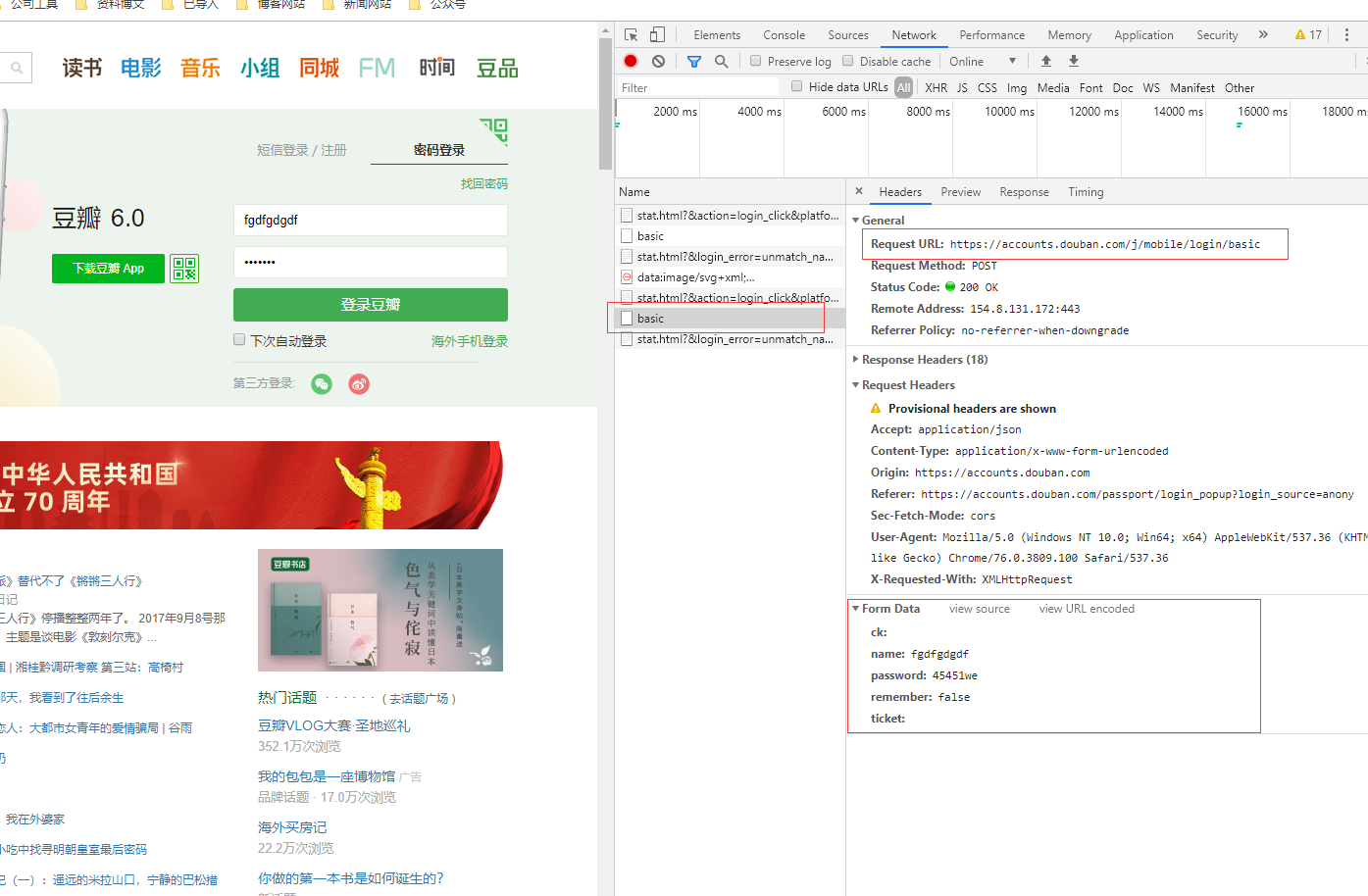

Simulated landing can solve the shortcomings of manual cookie settings, but it also introduces more complex problems. Now the verification codes are various and varied, many of them are challenging. For example, it is very difficult to operate a certain kind of picture in a pile of pictures, which is not easy to write. So it's up to the developer to weigh the pros and cons of which way to use it. Today we use Douban Network, when landing, there is no verification code, for this kind of no verification code is relatively simple, the most important thing about the simulation of landing mode is to find the real landing request, the parameters needed for landing. That's all we can do. First, we enter the wrong account password in the login interface, so that the page will not jump, so we can easily find the login request. Let me demonstrate Douban's login search for login links. We enter the wrong username and password in the login interface. After clicking on the login, we view the initiated request links in the network, as shown in the following figure:

From the network, we can see that the landing link of Douban is https://accounts.douban.com/j/mobile/login/basic. There are five parameters needed. The specific parameters are the Form Data in the figure. With these, we can construct a request to simulate landing. Then we use Jsoup to simulate landing to get the nickname of Douban Home Page. The code is as follows:

/**

* Jsoup Simulated login to Douban Visit Personal Center

* Enter an incorrect account password when you log in to Douban to see the parameters you need to log in.

* The login request parameters are constructed first, and cookies are obtained after success.

* Set request cookies to request again

* @param loginUrl Login url

* @param userInfoUrl Personal center url

* @throws IOException

*/

public void jsoupLogin(String loginUrl,String userInfoUrl) throws IOException {

// TECTONIC LANDING PARAMETERS

Map<String,String> data = new HashMap<>();

data.put("name","your_account");

data.put("password","your_password");

data.put("remember","false");

data.put("ticket","");

data.put("ck","");

Connection.Response login = Jsoup.connect(loginUrl)

.ignoreContentType(true) // Ignore type validation

.followRedirects(false) // No redirection

.postDataCharset("utf-8")

.header("Upgrade-Insecure-Requests","1")

.header("Accept","application/json")

.header("Content-Type","application/x-www-form-urlencoded")

.header("X-Requested-With","XMLHttpRequest")

.header("User-Agent","Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.100 Safari/537.36")

.data(data)

.method(Connection.Method.POST)

.execute();

login.charset("UTF-8");

// cookies after successful login have been retrieved in login

// Constructing requests for access to personal centers

Document document = Jsoup.connect(userInfoUrl)

// Remove cookies from the login object

.cookies(login.cookies())

.get();

if (document != null) {

Element element = document.select(".info h1").first();

if (element == null) {

System.out.println("Can't find .info h1 Label");

return;

}

String userName = element.ownText();

System.out.println("Douban My net name is:" + userName);

} else {

System.out.println("Error!!!!!");

}

}This code is divided into two parts, the first one is simulated landing, the second one is to parse the Douban homepage. In this code, two requests are made. The first request is simulated landing to obtain cookies. The second request carries the cookies obtained after the first simulated landing, so that you can also visit the pages that need to be landed and modify the main method.

public static void main(String[] args) throws Exception {

// Personal center url

String user_info_url = "https://www.douban.com/people/150968577/";

// Landing Interface

String login_url = "https://accounts.douban.com/j/mobile/login/basic";

// new CrawleLogin().setCookies(user_info_url);

new CrawleLogin().jsoupLogin(login_url,user_info_url);

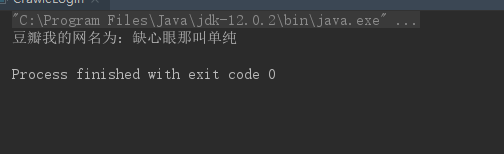

}Running the main method yields the following results:

Simulated login method has also succeeded in obtaining the name of the net, which is called simple, although it is already the simplest simulated login. From the amount of code, it can be seen that it is much more complex than setting cookie s. For other logins with verification codes, I will not introduce here. First, I have little experience in this area. Second, it is more complex to implement. Involving the use of some algorithms and some auxiliary tools, interested friends can refer to Mr. Cui Qingcai's blog research. Simulated landing is complex to write, but as long as you write it, you will be able to do it once and for all. If you need to collect landing information for a long time, it's worth doing.

In addition to using jsource to simulate login, we can also use httpclient to simulate login, httpclient to simulate login is not as complex as Jsource, because httpclient can save session session session like a browser, so that cookies are saved after login, and cookies are brought with requests in the same httpclient. The httpclient simulated login code is as follows:

/**

* httpclient Way to Simulate Login Douban

* httpclient Similar to jsoup, the difference is that httpclient has the concept of session

* cookies do not need to be set in the same httpclient and are cached by default

* @param loginUrl

* @param userInfoUrl

*/

public void httpClientLogin(String loginUrl,String userInfoUrl) throws Exception{

CloseableHttpClient httpclient = HttpClients.createDefault();

HttpUriRequest login = RequestBuilder.post()

.setUri(new URI(loginUrl))// Landing url

.setHeader("Upgrade-Insecure-Requests","1")

.setHeader("Accept","application/json")

.setHeader("Content-Type","application/x-www-form-urlencoded")

.setHeader("X-Requested-With","XMLHttpRequest")

.setHeader("User-Agent","Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.100 Safari/537.36")

// Setting Account Information

.addParameter("name","your_account")

.addParameter("password","your_password")

.addParameter("remember","false")

.addParameter("ticket","")

.addParameter("ck","")

.build();

// Simulated landing

CloseableHttpResponse response = httpclient.execute(login);

if (response.getStatusLine().getStatusCode() == 200){

// Constructing access to personal center requests

HttpGet httpGet = new HttpGet(userInfoUrl);

CloseableHttpResponse user_response = httpclient.execute(httpGet);

HttpEntity entity = user_response.getEntity();

//

String body = EntityUtils.toString(entity, "utf-8");

// To be lazy and to judge the absence of mind directly is called simply the existence of a string.

System.out.println("Lack of mind is called simply whether to find out?"+(body.contains("Lack of heart is called simplicity.")));

}else {

System.out.println("httpclient Simulated login Douban failed!!!!");

}

}Running this code returns true as well.

About Java crawlers encountered landing problems, we talked about it. To sum up, there are two ways to solve the problem. One is to set cookie s manually. This way is suitable for temporary or one-time acquisition, and the cost is low. Another way is to simulate the way of landing, which is suitable for long-term collection of websites, because the cost of simulated landing is still very high, especially some abnormal authentication code, the advantage is that it can let you once and for all.

Above is the knowledge sharing about the landing problems encountered by Java crawlers. I hope it will be helpful to you. The next article is about the problem of asynchronous loading of data encountered by Java crawlers. If you are interested in reptiles, you might as well pay attention to a wave, learn from each other and make progress with each other.

Source code: source code

The article's shortcomings, I hope you can give more advice, learn together, and make progress together.

Last

Play a small advertisement. Welcome to pay close attention to the Wechat Public Number: "Hirago's Technological Blog" and make progress together.