Note: Data saving operations are performed in pipelines.py files

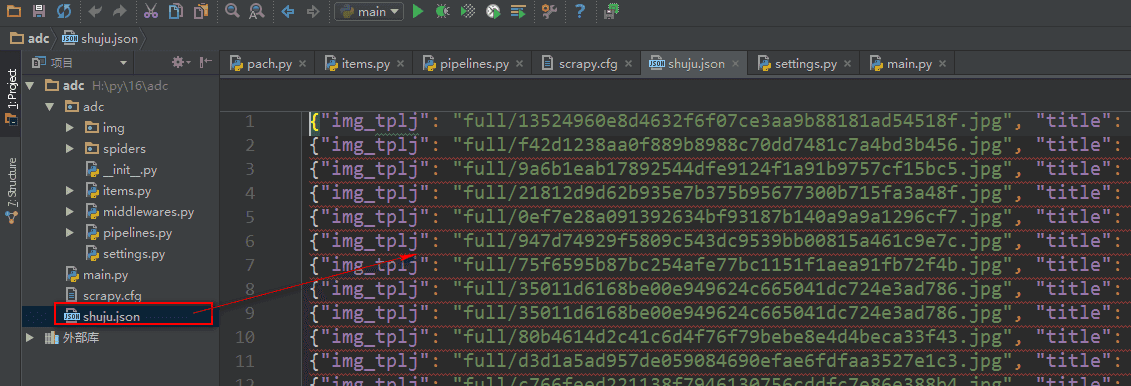

Save the data as a json file

spider is a signal detection

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: http://doc.scrapy.org/en/latest/topics/item-pipeline.html

from scrapy.pipelines.images import ImagesPipeline #Import Picture Downloader Module

import codecs

import json

class AdcPipeline(object): #To define data processing classes, you must inherit object s

def __init__(self):

self.file = codecs.open('shuju.json', 'w', encoding='utf-8') #Open the json file at initialization

def process_item(self, item, spider): #process_item(item) is a data processing function that receives an item, which is the data object from the last yield item of the crawler.

# print('The title of the article is:'+item['title'][0])

# print('article thumbnail url is: '+ item['img'][0])

# print('The path to save the article thumbnails is:' + item['img_tplj']) #Receive the path filled by the Image Downloader after the image download

#Save the data as a json file

lines = json.dumps(dict(item), ensure_ascii=False) + '\n' #Converting data objects into json format

self.file.write(lines) #Write json format data to a file

return item

def spider_closed(self,spider): #Create a method that inherits the spider, which is a signal that triggers the method when the current data operation is complete

self.file.close() #Close open file

class imgPipeline(ImagesPipeline): #Customize an image download, inherit crapy's built-in ImagesPipeline Image Downloader class

def item_completed(self, results, item, info): #Use the item_completed() method in the ImagesPipeline class to get the saved path of the downloaded image

for ok, value in results:

img_lj = value['path'] #Receiving Picture Save Path

# print(ok)

item['img_tplj'] = img_lj #Fill the image save path into the fields in items.py

return item #Container functions that give item to items.py files

#Note: After setting up the custom image downloader, you need to

If you are still confused in the world of programming, you can join our Python Learning button qun: 784758214 to see how our predecessors learned. Exchange of experience. From basic Python script to web development, crawler, django, data mining, zero-base to actual project data are sorted out. To every little friend of Python! Share some learning methods and small details that need attention. Click to join us. python learner gathering place

Save the data to the database

We use an ORM framework sqlalchemy module to save data

Database operation file

#!/usr/bin/env python

# -*- coding:utf-8 -*-

from sqlalchemy.ext.declarative import declarative_base

from sqlalchemy import Column

from sqlalchemy import Integer, String, TIMESTAMP

from sqlalchemy import ForeignKey, UniqueConstraint, Index

from sqlalchemy.orm import sessionmaker, relationship

from sqlalchemy import create_engine

#Configure database engine information

ENGINE = create_engine("mysql+pymysql://root:279819@127.0.0.1:3306/cshi?charset=utf8", max_overflow=10, echo=True)

Base = declarative_base() #Create an SQLORM base class

class SendMsg(Base): #Design table

__tablename__ = 'sendmsg'

id = Column(Integer, primary_key=True, autoincrement=True)

title = Column(String(300))

img_tplj = Column(String(300))

def init_db():

Base.metadata.create_all(ENGINE) #Create the specified table to the database

def drop_db():

Base.metadata.drop_all(ENGINE) #Delete the specified table from the database

def session():

cls = sessionmaker(bind=ENGINE) #Create session maker class, operation table

return cls()

# drop_db() #Delete table

# init_db() #Create tablepipelines.py file

What can I learn from my learning process?

python Learning Exchange Button qun,784758214

//There are good learning video tutorials, development tools and e-books in the group.

//Share with you python enterprise talent demand and how to learn python from zero basis, and learn what content.

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: http://doc.scrapy.org/en/latest/topics/item-pipeline.html

from scrapy.pipelines.images import ImagesPipeline #Import Picture Downloader Module

from adc import shujuku as ORM #Import database files

class AdcPipeline(object): #To define data processing classes, you must inherit object s

def __init__(self):

ORM.init_db() #Create database tables

def process_item(self, item, spider): #process_item(item) is a data processing function that receives an item, which is the data object from the last yield item of the crawler.

print('The title of the article is:' + item['title'][0])

print('Post Thumbnails url Yes,' + item['img'][0])

print('The path to save the article thumbnails is:' + item['img_tplj']) #Receive the path filled by the Image Downloader after the image download

mysq = ORM.session()

shuju = ORM.SendMsg(title=item['title'][0], img_tplj=item['img_tplj'])

mysq.add(shuju)

mysq.commit()

return item

class imgPipeline(ImagesPipeline): #Customize an image download, inherit crapy's built-in ImagesPipeline Image Downloader class

def item_completed(self, results, item, info): #Use the item_completed() method in the ImagesPipeline class to get the saved path of the downloaded image

for ok, value in results:

img_lj = value['path'] #Receiving Picture Save Path

# print(ok)

item['img_tplj'] = img_lj #Fill the image save path into the fields in items.py

return item #Container functions that give item to items.py files

#Note: After setting up the custom image downloader, you need to