[This column is published synchronously [headlines], [knows] and can focus on subscribing to related articles with the same name account and updating them regularly every week]

[This article totals 6129 words, which takes about 17 minutes to read. There are many concepts involved in it. It is suggested to collect before reading. ]

In the previous article "An article takes you to know Kubernetes", we have a certain understanding of kubernetes. In this article, we will continue to discuss kubernetes in depth at the system level. We will look at the basic components of kubernetes and how each component cooperates to support such a complex cluster. System. Following the content of this article, let's take a look at the amazing design insider of kubernetes.

Basic components of Kubernetes

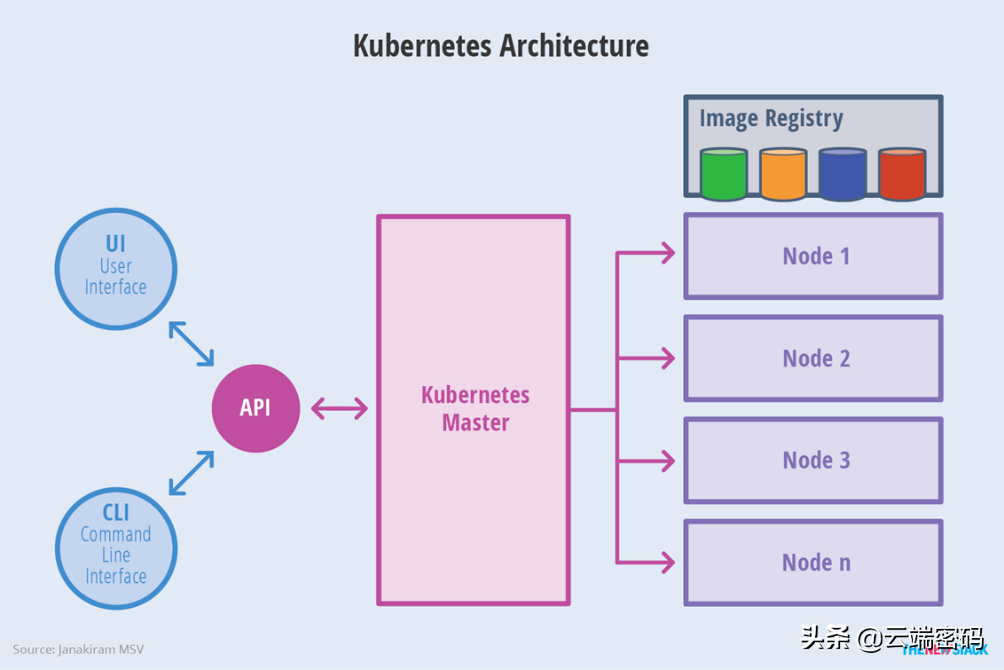

Kubernetes divides the entire cluster into control nodes and work nodes, as shown in the following figure.

Master in Kubernetes refers to cluster control nodes. Each Kubernetes cluster needs a Master node to manage and control the whole cluster. All control instructions in Kubernetes are handled by Master. Cause in the cluster is very important, so in the deployment of multi-node deployment alone.

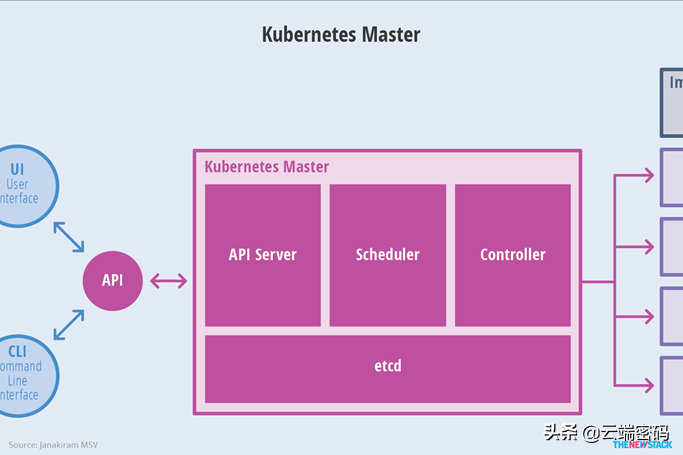

Maste node critical process

Apiserver: Provides the only access for all resources of kubernetes to add, delete and modify. It is also the access for cluster control. It provides http Rest interface to complete cluster management, resource quota, access control, authentication and authorization, and the operation of etcd.

Controller-manager: An automation control center for all resource objects in the cluster, responsible for pod and node management, node controller, service controller, replica controller, service account and token controller, etc.

Scheduler: Responsible for resource scheduling, listen to Apiserver, and query whether there is an unscheduled pod.

Etcd: In the kubernetes system, there are two main services that need to be stored with etcd:

Network Plug-ins: flannel and others need to store network configuration information

Kubernetes itself includes the state of various objects and the configuration of raw information.

In addition to Master nodes, other clusters in the cluster are called Node nodes, and earlier versions are also called Minion. Node node is the specific workload node in the cluster, which can make the physical machine can also be a virtual machine.

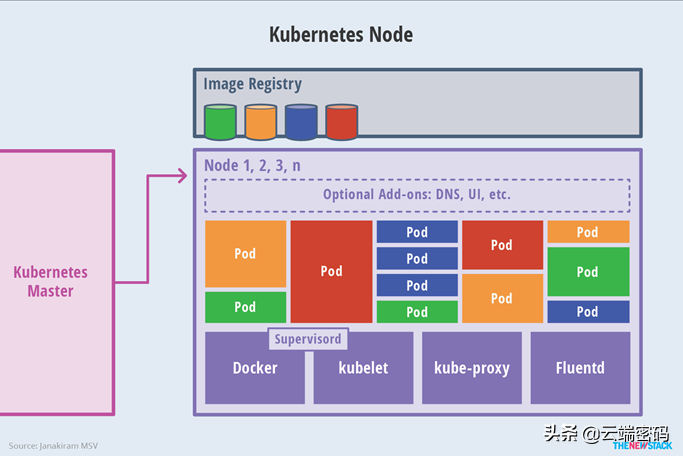

Node node critical processes (including but not limited to the following processes)

Kubelet: Handles the tasks sent to the node by Master, manages the containers of pod and pod. Each kubelet registers its own information on Apiserver, reports regularly to Master on the resource usage of the node, and monitors the container and node information through cAdvisor.

Kube-proxy: forwards access to service to multiple pod instances at the back end to maintain routing information. For each TCP type of k8s service, kube-proxy will build a sockerserver locally to balance the algorithm, using rr load balancing algorithm.

CNI Network Component: As a network standardization component of container platform, providing communication support across network segments for container is the key to realize kubernetes cluster overlay network.

Docker: Kubernetes supports a variety of container tools. At present, Docker is the mainstream container, providing container creation and management for the kubernetes cluster.

Interaction among Cluster Components

Kubernetes is the only entry point for all resource additions, deletions and modifications. Each component sends a request to Apiserve in the form of list-watch. To reduce the pressure of Apiserver, each component uses caching to cache data. In some cases, functional modules do not directly access Apiserver, but indirectly access Apiserver by accessing the cache.

Kubelet&Apiserver

Every time period of kubelet on each node calls Apiserver's REST interface to report its status. Kubelet monitors pod information through the watch interface. Listen for creating, deleting, and modifying events.

Controller-manager&Apiserver

controller-manager includes several controllers. For example, Node Controller module monitors Node information through Watch interface provided by API server and processes it accordingly.

Scheduler&Apiserver

Scheduler listens through the watch interface of API server. After listening to a new copy of pod, Scheduler retrieves all Node lists that meet the requirements of the pod and starts to perform Pod scheduling. After successful scheduling, the pod is bound to specific nodes.

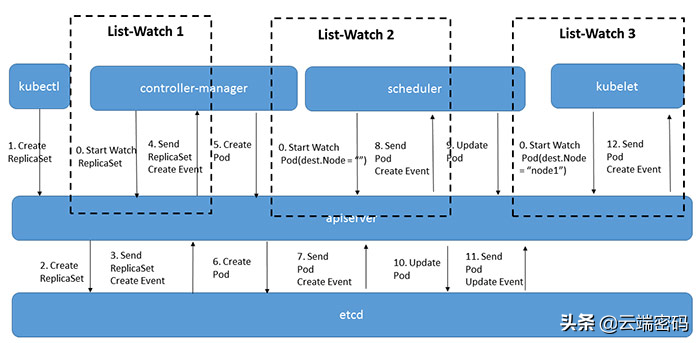

The following is a flow chart created by a typical pod. It can be seen that Apiserver is at the core. All the original data of each functional module in the cluster are checked by kube-apiserver to operate etcd. When these data need to be acquired and manipulated, they are implemented by Apiserver's REST interface.

list-watch mechanism

Kubernetes did not introduce MQ in addition to other distributed systems because its design concept adopted level trigger instead of edge trigger. It only implements the list-watcher mechanism through http+protobuffer to solve the message notification between components. Therefore, before understanding the communication between components, we must first understand the application of list-watch mechanism in kubernetes.

List-watch is a unified asynchronous message processing mechanism of k8s. List lists resources by calling the list API of resources, which is based on HTTP short links. Watch is a watch API that calls resources to monitor resource change events and is based on HTTP long links. In kubernetes, each component updates the resource status by monitoring the resource changes of Apiserver.

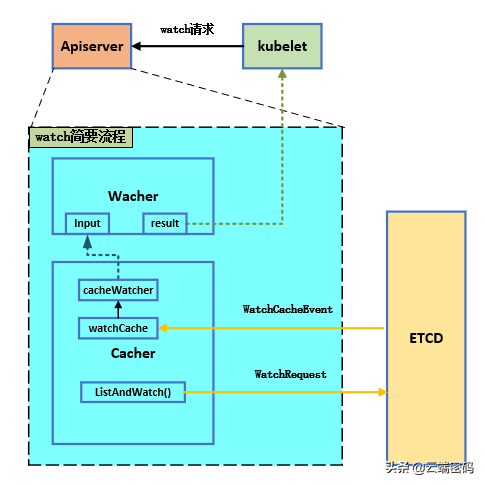

Here is a brief description of watch. The flow chart is as follows:

This part of the flow chart does not look complicated, but in fact the implementation is quite delicate. A brief explanation is given in conjunction with this picture:

First of all, we need to emphasize that the list or watch data are all data from etcd, so in Apiserver, all the design is to get the latest etcd data and return it to the client.

2 When Apiserver listens to the watch request from each component, because the format of list and watch request is similar, it first enters the ListResource function for analysis. If it is resolved as a watch request, it will create a watcher structure to respond to the request. The watcher's life cycle is per http request.

//Each Watch request corresponds to a watcher structure func (a *APIInstaller) registerResourceHandlers(path string, storage rest.Storage,... ... ...... lister, isLister := storage.(rest.Lister) watcher, isWatcher := storage.(rest.Watcher) ...(1) ... case "LIST": // List all resources of a kind. ......

3. A watcher was created, but who will receive and cache etcd data? Apiserver receives etcd events using cacher, which is also a Storage type. Here Cacher can be understood as an instance of monitoring etcd. For a certain type of data, the Cacher sends a watch request to etcd through ListAndWatch(). Etcd synchronizes a certain type of data to the watchCache structure, that is, ListAndWatch() synchronizes the remote data sources to the Cacher structure. The structure of Cacher is as follows:

type Cacher struct {

incomingHWM storage.HighWaterMark

incoming chan watchCacheEvent

sync.RWMutex

// Before accessing the cacher's cache, wait for the ready to be ok.

// This is necessary to prevent users from accessing structures that are

// uninitialized or are being repopulated right now.

// ready needs to be set to false when the cacher is paused or stopped.

// ready needs to be set to true when the cacher is ready to use after

// initialization.

ready *ready

// Underlying storage.Interface.

storage storage.Interface

// Expected type of objects in the underlying cache.

objectType reflect.Type

// "sliding window" of recent changes of objects and the current state.

watchCache *watchCache

reflector *cache.Reflector

// Versioner is used to handle resource versions.

versioner storage.Versioner

// newFunc is a function that creates new empty object storing a object of type Type.

newFunc func() runtime.Object

// indexedTrigger is used for optimizing amount of watchers that needs to process

// an incoming event.

indexedTrigger *indexedTriggerFunc

// watchers is mapping from the value of trigger function that a

// watcher is interested into the watchers

watcherIdx int

watchers indexedWatchers

// Defines a time budget that can be spend on waiting for not-ready watchers

// while dispatching event before shutting them down.

dispatchTimeoutBudget *timeBudget

// Handling graceful termination.

stopLock sync.RWMutex

stopped bool

stopCh chan struct{}

stopWg sync.WaitGroup

clock clock.Clock

// timer is used to avoid unnecessary allocations in underlying watchers.

timer *time.Timer

// dispatching determines whether there is currently dispatching of

// any event in flight.

dispatching bool

// watchersBuffer is a list of watchers potentially interested in currently

// dispatched event.

watchersBuffer []*cacheWatcher

// blockedWatchers is a list of watchers whose buffer is currently full.

blockedWatchers []*cacheWatcher

// watchersToStop is a list of watchers that were supposed to be stopped

// during current dispatching, but stopping was deferred to the end of

// dispatching that event to avoid race with closing channels in watchers.

watchersToStop []*cacheWatcher

// Maintain a timeout queue to send the bookmark event before the watcher times out.

bookmarkWatchers *watcherBookmarkTimeBuckets

// watchBookmark feature-gate

watchBookmarkEnabled bool

}

The watchCache structure is as follows:

type watchCache struct {

sync.RWMutex //Synchronous lock

cond *sync.Cond //Conditional variable

capacity int//History Sliding Window Capacity

keyFunc func(runtime.Object) (string, error)//Getting key values from storage

getAttrsFunc func(runtime.Object) (labels.Set, fields.Set, bool, error)//Get field and label information for an object

cache []watchCacheElement//Loop queue cache

startIndex int//Initial subscript of circular queue

endIndex int//End subscript of circular queue

store cache.Store//

resourceVersion uint64

onReplace func()

onEvent func(*watchCacheEvent)//This function is called every time the data in the cache is added/Update/Delete to get the value of the previous version of the object.

clock clock.Clock

versioner storage.Versioner

}

All operation events are stored in cache, while the latest events are stored in store.

4. Cache Watcher takes all the data since a resourceVersion from watchCache, i.e. initEvents, and then puts the data into the channel of input, outputs it to the channel of result through filter, and returns the data to a client.

type cacheWatcher struct {

sync.Mutex//Synchronous lock

input chan *watchCacheEvent//Input pipeline, Apiserver will be sent to input pipeline by broadcast when events occur.

result chan watch.Event//Output Pipeline, Output to update Pipeline

done chan struct{}

filter filterWithAttrsFunc//Filter

stopped bool

forget func(bool)

versioner storage.Versioner

}

Viewing k8s component communication from a pod creation process

Let's go back to the OD above to create the flow chart. From the figure we can see the following information:

First, each component will also send a watch request to Apiserver at initialization time, i.e. the instructions in Figure 0. Apiserver obtains the routing information of Watch request when creating Kube Apiserver and registering API routing information

2 From Kubectl to Apiserver, every step of creation and update operation will be stored in etcd.

Each component sends a watch request to Apiserver, which retrieves the latest data from etcd and returns it.

Note: When an event occurs, Apiserver pushes the channels in these watchers. Each watcher has its own Filter. If it finds the event it wants to listen to, it sends the data to the corresponding components via the channel.