Introduction to RAID

RAID is called Redundant Array of Independent Disks. The basic idea of RAID is to combine multiple disks into one disk array group, which greatly improves the performance. Initially, it was designed to combine small, inexpensive disks to replace large, expensive disks. At the same time, it was hoped that the disks would fail without compromising access to data, and a certain level of data protection technology was developed.

RAID can give full play to the advantages of multiple hard disks, increase the speed and capacity of hard disks, provide fault-tolerant function to ensure data security, easy to manage the advantages of any hard disk problems can continue to work, will not be affected by damaged hard disks.

Introduction to RAID Disk Array

RAID connects a set of hard disks to form an array to avoid data loss caused by single hard disk damage. It also provides higher availability and fault tolerance than a single hard disk.

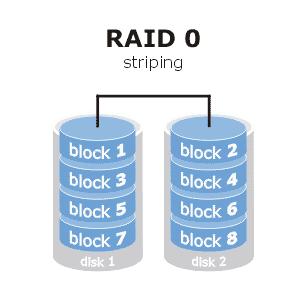

Introduction to RAID 0

RAID 0, commonly known as strip volume, has the same function as zone volume in Windows system. It consists of two or more hard disks into a logical hard disk. It stores data segments in each hard disk, and reads and writes data in parallel. Therefore, its speed of reading and writing is N times faster than that of a single hard disk. Without redundant function, damage to any disk will be induced. The resulting data is unavailable.

-

Characteristic:

- High Reading and Writing

- Unreliable

- Two or more disks of the same size

- Capacity is the sum of the capacity of multiple disks.

-

Building RAID 0

1. Firstly, two hard disks are added to the virtual machine, each of which is 20G. Then, the Linux system is restarted, the added hard disks are identified, and the added hard disks are partitioned and managed, and the partition number is modified to fd (Linux raid automatic).

[root@localhost ~]# cd /dev

[root@localhost dev]# ls

...

cdrom lp2 sda4 tty16 tty38 tty6 vcs6

char lp3 sda5 tty17 tty39 tty60 vcsa

console mapper sdb tty18 tty4 tty61 vcsa1

core mcelog sdc tty19 tty40 tty62 vcsa2

cpu mem sg0 tty2 tty41 tty63 vcsa3

...

[root@localhost dev]# fdisk /dev/sdb

Welcome to fdisk (util-linux 2.23.2).

...

Command (Enter m for help):n

Partition type:

p primary (0 primary, 0 extended, 4 free)

e extended

Select (default p): p

Partition number (1-4, default 1):

Start sector (2048-41943039, default 2048):

The default value 2048 will be used

Last sector, +sector or+size {K, M, G} (2048-41943039, default 41943039):

The default value 41943039 will be used

Partition 1 has been set to Linux type with size of 20 GiB

Command (Enter m for help):t

Selected partition 1

Hex code (input L lists all the code):fd

The partition "Linux" type has been changed to "Linux raid autodetect"

Command (Enter m for help):p

Disk/dev/sdb: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units = sector of 1 * 512 = 512 bytes

Sector size (logic/physics): 512 bytes/512 bytes

I/O Size (Minimum/Optimum): 512 Bytes/512 Bytes

Disk label type: dos

Disk identifier: 0x1570d5d5

Device Boot Start End Blocks Id System

/dev/sdb1 2048 41943039 20970496 fd Linux raid autodetect

// The sdc hard disk operates the same way.

[root@localhost ~]# fdisk -l

Disk/dev/sda: 42.9 GB, 42949672960 bytes, 83886080 sectors

Units = sector of 1 * 512 = 512 bytes

...

Disk/dev/sdb: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units = sector of 1 * 512 = 512 bytes

Sector size (logic/physics): 512 bytes/512 bytes

I/O Size (Minimum/Optimum): 512 Bytes/512 Bytes

Disk label type: dos

Disk identifier: 0x1570d5d5

Device Boot Start End Blocks Id System

/dev/sdb1 2048 41943039 20970496 fd Linux raid autodetect

Disk/dev/sdc: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units = sector of 1 * 512 = 512 bytes

Sector size (logic/physics): 512 bytes/512 bytes

I/O Size (Minimum/Optimum): 512 Bytes/512 Bytes

Disk label type: dos

Disk identifier: 0xe4b54d43

Device Boot Start End Blocks Id System

/dev/sdc1 2048 41943039 20970496 fd Linux raid autodetect2. To see if Linux system installs mdadm software (making soft RAID tools), we need to make RAID disk arrays by mdadm command. (If you want to install this software, you can install it through yum)

-

Command format: mdadm-C-v [/dev/device name of RAID to be created]-lN-nN disk device-xN disk device

-

Common Options

- C: Create

- v: Display the detailed process

- l: RAID level

- n: Number of disks

- x: Retain disks (spare disks)

- D: View the RAID disk array created

- f: Delete a disk in the RAID array

[root@localhost ~]# rpm -q mdadm mdadm-4.0-5.el7.x86_64

3. Make RAID 0 disk arrays and see if they were created successfully. (You can view mdadm-D [device name of RAID] or cat/proc/mdstat by viewing configuration files)

[root@localhost ~]# mdadm -C -v /dev/md0 -l0 -n2 /dev/sd[b-c]1

mdadm: chunk size defaults to 512K //Make sdb1 and sdc1 RAID 0 disk array

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md0 started.

[root@localhost ~]# Mdadm-D/dev/md0//View the details of RAID disk arrays

/dev/md0:

Version : 1.2

Creation Time : Sat Aug 24 09:10:19 2019

Raid Level : raid0

Array Size : 41908224 (39.97 GiB 42.91 GB) //capacity

Raid Devices : 2 //Number of disks

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Sat Aug 24 09:10:19 2019

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Chunk Size : 512K

Consistency Policy : none

Name : localhost.localdomain:0 (local to host localhost.localdomain)

UUID : 9b56f231:d4687383:83705a03:7d5d606a

Events : 0

Number Major Minor RaidDevice State

0 8 17 0 active sync /dev/sdb1 //Disks that make up RAID

1 8 33 1 active sync /dev/sdc1

[root@localhost ~]# Cat/proc/mdstat//View RAID Disk Array Status

Personalities : [raid0]

md0 : active raid0 sdc1[1] sdb1[0] //Output Created RAID Array Information

41908224 blocks super 1.2 512k chunks

unused devices: <none>4. Create a file system (format) for RAID 0 disk, and then mount it to make the disk work properly.

[root@localhost ~]# mkfs.xfs /dev/md0

meta-data=/dev/md0 isize=512 agcount=16, agsize=654720 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=10475520, imaxpct=25

= sunit=128 swidth=256 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=5120, version=2

= sectsz=512 sunit=8 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@localhost ~]# mkdir /opt/md01

[root@localhost ~]# mount /dev/md0 /opt/md01

[root@localhost ~]# df -h

(Sensitive Lexical Separation...)

/dev/sda2 20G 4.3G 16G 22% /

devtmpfs 1.9G 0 1.9G 0% /dev

tmpfs 1.9G 0 1.9G 0% /dev/shm

tmpfs 1.9G 9.0M 1.9G 1% /run

tmpfs 1.9G 0 1.9G 0% /sys/fs/cgroup

/dev/sda5 10G 50M 10G 1% /home

/dev/sda1 2.0G 174M 1.9G 9% /boot

tmpfs 378M 12K 378M 1% /run/user/42

tmpfs 378M 0 378M 0% /run/user/0

/dev/md0 40G 33M 40G 1% /opt/md01Introduction to RAID 1

RAID 1 has the same function as the j mirror volume in Windows system. It consists of at least two hard disks, and the data stored on both hard disks are the same to achieve data redundancy. Without data validation, data is written to two or more disks equally, writing speed is relatively slow, but reading speed is relatively fast.

-

Characteristic:

- High reliability

- Not scalable

- Consisting of two or more disks of the same size

- The capacity is half of the sum of multiple disks.

-

Building RAID 1

1. Firstly, three hard disks are added to the virtual machine, each of which is 20G. Then, the Linux system is restarted, the added hard disks are identified, and the added hard disks are partitioned and managed, and the partition number is modified to fd (Linux raid automatic). I make three disks here because when RAID is used in the enterprise, one or more spare disks will be made. When one of the disks in use is damaged, it will be replaced automatically to make the disks run normally. )

[root@localhost ~]# fdisk /dev/sdb

Welcome to fdisk (util-linux 2.23.2).

Changes will stay in memory until you decide to write them to disk.

Think twice before using write commands.

Device does not contain a recognized partition table

Create a new DOS disk label using disk identifier 0x95ce2aa8.

Command (Enter m for help):n

Partition type:

p primary (0 primary, 0 extended, 4 free)

e extended

Select (default p): p

Partition number (1-4, default 1):

Start sector (2048-41943039, default 2048):

The default value 2048 will be used

Last sector, +sector or+size {K, M, G} (2048-41943039, default 41943039):

The default value 41943039 will be used

Partition 1 has been set to Linux type with size of 20 GiB

Command (Enter m for help):t

Selected partition 1

Hex code (input L lists all the code):fd

The partition "Linux" type has been changed to "Linux raid autodetect"

Command (Enter m for help):p

Disk/dev/sdb: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units = sector of 1 * 512 = 512 bytes

Sector size (logic/physics): 512 bytes/512 bytes

I/O Size (Minimum/Optimum): 512 Bytes/512 Bytes

Disk label type: dos

Disk identifier: 0x95ce2aa8

Device Boot Start End Blocks Id System

/dev/sdb1 2048 41943039 20970496 fd Linux raid autodetect

Command (Enter m for help):w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Synchronizing disks.

// sdc, sdd hard disk same operation.

[root@localhost ~]# fdisk -l

Disk/dev/sda: 42.9 GB, 42949672960 bytes, 83886080 sectors

Units = sector of 1 * 512 = 512 bytes

...

Disk/dev/sdc: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units = sector of 1 * 512 = 512 bytes

Sector size (logic/physics): 512 bytes/512 bytes

I/O Size (Minimum/Optimum): 512 Bytes/512 Bytes

Disk label type: dos

Disk identifier: 0xac9b4564

Device Boot Start End Blocks Id System

/dev/sdc1 2048 41943039 20970496 fd Linux raid autodetect

Disk/dev/sdd: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units = sector of 1 * 512 = 512 bytes

Sector size (logic/physics): 512 bytes/512 bytes

I/O Size (Minimum/Optimum): 512 Bytes/512 Bytes

Disk label type: dos

Disk identifier: 0x7be39280

Device Boot Start End Blocks Id System

/dev/sdd1 2048 41943039 20970496 fd Linux raid autodetect

Disk/dev/sdb: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units = sector of 1 * 512 = 512 bytes

Sector size (logic/physics): 512 bytes/512 bytes

I/O Size (Minimum/Optimum): 512 Bytes/512 Bytes

Disk label type: dos

Disk identifier: 0x95ce2aa8

Device Boot Start End Blocks Id System

/dev/sdb1 2048 41943039 20970496 fd Linux raid autodetect

2. Build RAID 1 disk array with mdadm command and save spare disk; then check if the build is successful.

[root@localhost ~]# mdadm -C -v /dev/md1 -l1 -n2 /dev/sd[b-c]1 -x1 /dev/sdd1

mdadm: Note: this array has metadata at the start and //Build RAID 1 disk array and set up spare disk

may not be suitable as a boot device. If you plan to

store '/boot' on this device please ensure that

your boot-loader understands md/v1.x metadata, or use

--metadata=0.90

mdadm: size set to 20954112K

Continue creating array? //Ask if you want to create and return

Continue creating array? (y/n) y //Enter Y confirmation and return

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md1 started.

[root@localhost ~]# Cat/proc/mdstat//View RAID status

Personalities : [raid1]

md1 : active raid1 sdd1[2](S) sdc1[1] sdb1[0]

20954112 blocks super 1.2 [2/2] [UU]

[========>............] resync = 41.0% (8602240/20954112) finish=0.9min speed=206300K/sec //The two disks that build RAID 1 are synchronized and can not be used properly until the synchronization is completed.

unused devices: <none>

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid1]

md1 : active raid1 sdd1[2](S) sdc1[1] sdb1[0]

20954112 blocks super 1.2 [2/2] [UU] //Synchronized completion, normal use of disk

unused devices: <none>

[root@localhost ~]# Mdadm-D/dev/md1//View RAID details

/dev/md1:

Version : 1.2

Creation Time : Sat Aug 24 10:23:31 2019

Raid Level : raid1

Array Size : 20954112 (19.98 GiB 21.46 GB) //capacity

Used Dev Size : 20954112 (19.98 GiB 21.46 GB)

Raid Devices : 2 //Number of disks used

Total Devices : 3

Persistence : Superblock is persistent

Update Time : Sat Aug 24 10:25:16 2019

State : clean

Active Devices : 2

Working Devices : 3

Failed Devices : 0

Spare Devices : 1

Consistency Policy : resync

Name : localhost.localdomain:1 (local to host localhost.localdomain)

UUID : 459d9233:201d4c25:73f1b967:3b477186

Events : 17

Number Major Minor RaidDevice State

0 8 17 0 active sync /dev/sdb1 //// Disks that make up RAID

1 8 33 1 active sync /dev/sdc1

2 8 49 - spare /dev/sdd1 //Created spare disk3. Create a file system (formatting) for RAID 0 disk, and then mount it to make the disk work properly.

[root@localhost ~]# mkfs.xfs /dev/md1

meta-data=/dev/md1 isize=512 agcount=4, agsize=1309632 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=5238528, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@localhost ~]# mkdir /opt/si1

[root@localhost ~]# mount /dev/md1 /opt/si1

[root@localhost ~]# df -h

(Sensitive Lexical Separation...)

/dev/sda2 20G 4.3G 16G 22% /

devtmpfs 1.9G 0 1.9G 0% /dev

tmpfs 1.9G 0 1.9G 0% /dev/shm

tmpfs 1.9G 9.0M 1.9G 1% /run

tmpfs 1.9G 0 1.9G 0% /sys/fs/cgroup

/dev/sda5 10G 50M 10G 1% /home

/dev/sda1 2.0G 174M 1.9G 9% /boot

tmpfs 378M 12K 378M 1% /run/user/42

tmpfs 378M 0 378M 0% /run/user/0

/dev/md1 20G 33M 20G 1% /opt/si14. Write a file in the mount point and remove a disk by mdadm command to see if the backup disk will be replaced automatically, and then check whether the written file can be viewed properly.

[root@localhost ~]# Touch / opt / si1 / Siti {1.10}. txt // / Create a txt file in si1

[root@localhost ~]# Ls/opt/si1//View files

siti10.txt siti2.txt siti4.txt siti6.txt siti8.txt

siti1.txt siti3.txt siti5.txt siti7.txt siti9.txt

[root@localhost ~]# Mdadm-f/dev/md1/dev/sdb1//Remove sdb1 disk in RAID

mdadm: set /dev/sdb1 faulty in /dev/md1

[root@localhost ~]# Cat/proc/mdstat//View PAID Array Status

Personalities : [raid1]

md1 : active raid1 sdd1[2] sdc1[1] sdb1[0](F)

20954112 blocks super 1.2 [2/1] [_U]

[========>............] recovery = 41.0% (8602368/20954112) finish=0.9min speed=206284K/sec //Start resynchronizing disks

unused devices: <none>

[root@localhost ~]# Mdadm-D/dev/md1//View the details of RAID 1 arrays

/dev/md1:

Version : 1.2

Creation Time : Sat Aug 24 10:23:31 2019

Raid Level : raid1

Array Size : 20954112 (19.98 GiB 21.46 GB)

Used Dev Size : 20954112 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 3

Persistence : Superblock is persistent

Update Time : Sat Aug 24 11:12:25 2019

State : active, degraded, recovering

Active Devices : 1

Working Devices : 2

Failed Devices : 1

Spare Devices : 1

Consistency Policy : resync

Rebuild Status : 86% complete

Name : localhost.localdomain:1 (local to host localhost.localdomain)

UUID : 459d9233:201d4c25:73f1b967:3b477186

Events : 33

Number Major Minor RaidDevice State

2 8 49 0 spare rebuilding /dev/sdd1 //Standby Disk Replaces Removed Disk

1 8 33 1 active sync /dev/sdc1

0 8 17 - faulty /dev/sdb1 //sdb1 is idle

[root@localhost ~]# Ls/opt/si1//View the files in SI1

siti10.txt siti2.txt siti4.txt siti6.txt siti8.txt //Display files, you can view and use them properly

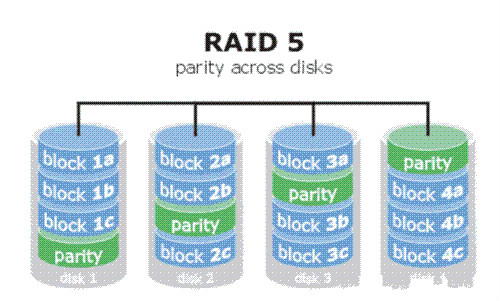

siti1.txt siti3.txt siti5.txt siti7.txt siti9.txtIntroduction to RAID 5

RAID 5 consists of at least three hard disks. It stores data dispersedly on each hard disk in the array, and it also has a data check bit. Data bit and check bit can be verified by algorithm. When one of them is lost, RAID controller can calculate the lost data by algorithm, using the other two data. Original.

-

Characteristic

- High Read, Write General, High Reliability

- At least 3 disks of the same size

- The total disk capacity of n-1/n

-

Building RAID 5

1. Firstly, four hard disks are added to the virtual machine, each of which is 20G. Then, the Linux system is restarted, the added hard disks are identified, and the added hard disks are partitioned, and the partition number is modified to fd (Linux raid automatic). (As with RAID 1 above, three disks are used to build RAID 5 and one is used as backup disks).

[root@localhost ~]# fdisk /dev/sdb

Welcome to fdisk (util-linux 2.23.2).

Changes will stay in memory until you decide to write them to disk.

Think twice before using write commands.

Device does not contain a recognized partition table

Create a new DOS disk label using disk identifier 0x6247f95d.

Command (Enter m for help):n

Partition type:

p primary (0 primary, 0 extended, 4 free)

e extended

Select (default p): p

Partition number (1-4, default 1):

Start sector (2048-41943039, default 2048):

The default value 2048 will be used

Last sector, +sector or+size {K, M, G} (2048-41943039, default 41943039):

The default value 41943039 will be used

Partition 1 has been set to Linux type with size of 20 GiB

Command (Enter m for help):t

Selected partition 1

Hex code (input L lists all the code):fd

The partition "Linux" type has been changed to "Linux raid autodetect"

Command (Enter m for help):p

Disk/dev/sdb: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units = sector of 1 * 512 = 512 bytes

Sector size (logic/physics): 512 bytes/512 bytes

I/O Size (Minimum/Optimum): 512 Bytes/512 Bytes

Disk label type: dos

Disk identifier: 0x6247f95d

Device Boot Start End Blocks Id System

/dev/sdb1 2048 41943039 20970496 fd Linux raid autodetect

Command (Enter m for help):w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Synchronizing disks.

// sdc, sdd and sde hard disks operate the same way.

[root@localhost ~]# fdisk -l

Disk/dev/sda: 42.9 GB, 42949672960 bytes, 83886080 sectors

Units = sector of 1 * 512 = 512 bytes

...

Disk/dev/sdb: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units = sector of 1 * 512 = 512 bytes

Sector size (logic/physics): 512 bytes/512 bytes

I/O Size (Minimum/Optimum): 512 Bytes/512 Bytes

Disk label type: dos

Disk identifier: 0x88c98a9f

Device Boot Start End Blocks Id System

/dev/sdb1 2048 41943039 20970496 fd Linux raid autodetect

Disk/dev/sdd: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units = sector of 1 * 512 = 512 bytes

Sector size (logic/physics): 512 bytes/512 bytes

I/O Size (Minimum/Optimum): 512 Bytes/512 Bytes

Disk label type: dos

Disk identifier: 0xf265b801

Device Boot Start End Blocks Id System

/dev/sdd1 2048 41943039 20970496 fd Linux raid autodetect

Disk/dev/sdc: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units = sector of 1 * 512 = 512 bytes

Sector size (logic/physics): 512 bytes/512 bytes

I/O Size (Minimum/Optimum): 512 Bytes/512 Bytes

Disk label type: dos

Disk identifier: 0x7922c5a3

Device Boot Start End Blocks Id System

/dev/sdc1 2048 41943039 20970496 fd Linux raid autodetect

Disk/dev/sde: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units = sector of 1 * 512 = 512 bytes

Sector size (logic/physics): 512 bytes/512 bytes

I/O Size (Minimum/Optimum): 512 Bytes/512 Bytes

Disk label type: dos

Disk identifier: 0x54d78d57

Device Boot Start End Blocks Id System

/dev/sde1 2048 41943039 20970496 fd Linux raid autodetect2. Build RAID 5 disk array with mdadm command and save spare disk; then check if the build is successful.

[root@localhost ~]# mdadm -C -v /dev/md5 -l5 -n3 /dev/sd[b-d]1 -x1 /dev/sde1

mdadm: layout defaults to left-symmetric //Building RAID 5 Disk Array

mdadm: layout defaults to left-symmetric

mdadm: chunk size defaults to 512K

mdadm: size set to 20954112K

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md5 started.

[root@localhost ~]# Cat/proc/mdstat//View status

Personalities : [raid6] [raid5] [raid4]

md5 : active raid5 sdd1[4] sde1[3](S) sdc1[1] sdb1[0]

41908224 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/2] [UU_]

[=======>.............] recovery = 37.6% (7898112/20954112) finish=1.0min speed=200093K/sec //Synchronizing...

unused devices: <none>

[root@localhost ~]# Mdadm-D/dev/md5//View details

/dev/md5:

Version : 1.2

Creation Time : Sat Aug 24 11:59:51 2019

Raid Level : raid5

Array Size : 41908224 (39.97 GiB 42.91 GB) //Capacity 40G

Used Dev Size : 20954112 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Sat Aug 24 12:01:29 2019

State : clean, degraded, recovering

Active Devices : 2

Working Devices : 4

Failed Devices : 0

Spare Devices : 2

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Rebuild Status : 99% complete

Name : localhost.localdomain:5 (local to host localhost.localdomain)

UUID : 968fa4f5:020f5fec:4c726d63:df4b4b9b

Events : 16

Number Major Minor RaidDevice State

0 8 17 0 active sync /dev/sdb1 //Building RAID 5 disks

1 8 33 1 active sync /dev/sdc1

4 8 49 2 spare rebuilding /dev/sdd1 //Unsynchronized disks

3 8 65 - spare /dev/sde1 //Standby Disk

[root@localhost ~]# Cat/proc/mdstat//Often Seen State

Personalities : [raid6] [raid5] [raid4]

md5 : active raid5 sdd1[4] sde1[3](S) sdc1[1] sdb1[0]

41908224 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU]

//Synchronized completion

unused devices: <none>3. Remove sdb1 disk from RAID 5 array to see if the backup disk will replace sdb1.

[root@localhost ~]# Mdadm-f/dev/md5/dev/sdb1//Remove sdb1 disk from RAID 5

mdadm: set /dev/sdb1 faulty in /dev/md5

[root@localhost ~]# Cat/proc/mdstat//View status

Personalities : [raid6] [raid5] [raid4]

md5 : active raid5 sdd1[4] sde1[3] sdc1[1] sdb1[0](F)

41908224 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/2] [_UU]

[=>...................] recovery = 5.7% (1212928/20954112) finish=1.3min speed=242585K/sec //Start resynchronizing

unused devices: <none>

[root@localhost ~]# Mdadm-D/dev/md5//View details

/dev/md5:

Version : 1.2

Creation Time : Sat Aug 24 11:59:51 2019

Raid Level : raid5

Array Size : 41908224 (39.97 GiB 42.91 GB)

Used Dev Size : 20954112 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Sat Aug 24 12:13:10 2019

State : clean, degraded, recovering

Active Devices : 2

Working Devices : 3

Failed Devices : 1

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Rebuild Status : 14% complete

Name : localhost.localdomain:5 (local to host localhost.localdomain)

UUID : 968fa4f5:020f5fec:4c726d63:df4b4b9b

Events : 22

Number Major Minor RaidDevice State

3 8 65 0 spare rebuilding /dev/sde1 //Replacement of sdb1

1 8 33 1 active sync /dev/sdc1

4 8 49 2 active sync /dev/sdd1

0 8 17 - faulty /dev/sdb1 //sdb1 is idle4. Create (format) the RAID 5 array file system and mount it for use.

[root@localhost ~]# mkfs.xfs /dev/md5

meta-data=/dev/md5 isize=512 agcount=16, agsize=654720 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=10475520, imaxpct=25

= sunit=128 swidth=256 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=5120, version=2

= sectsz=512 sunit=8 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@localhost ~]# mkdir /opt/siti02

[root@localhost ~]# mount /dev/md5 /opt/siti02

[root@localhost ~]# df -h

(Sensitive Lexical Separation...)

/dev/sda2 20G 4.3G 16G 22% /

devtmpfs 1.9G 0 1.9G 0% /dev

tmpfs 1.9G 0 1.9G 0% /dev/shm

tmpfs 1.9G 9.1M 1.9G 1% /run

tmpfs 1.9G 0 1.9G 0% /sys/fs/cgroup

/dev/sda5 10G 50M 10G 1% /home

/dev/sda1 2.0G 174M 1.9G 9% /boot

tmpfs 378M 12K 378M 1% /run/user/42

tmpfs 378M 0 378M 0% /run/user/0

/dev/md5 40G 33M 40G 1% /opt/siti02Be careful:

Every time I create RAID disk arrays, I reinitialize the virtual machine Linux system, because I took a snapshot before installing the Linux system in the virtual machine, which can be restored directly. You can also operate under your own, hope to help you!!!