According to this article Bowen In this paper, virtual nodes can be deployed on ACK cluster by Helm to improve the resilience of cluster. Now, the example of ECI elastic container deployed by virtual node also supports the unified management of stdout output and log files synchronized to Aliyun Log Service (SLS). All logs can be collected in the same log service project. Moreover, the way of collecting logs is consistent with the way of collecting common containers on the cluster and seamlessly combines.

This article will introduce log collection in conjunction with the resilience of virtual nodes.

Deployment of Log Service Support Components in ACK Cluster

stay ACK Cluster Installation Interface Check to use the log service, and the cluster will install the necessary components to support log collection.

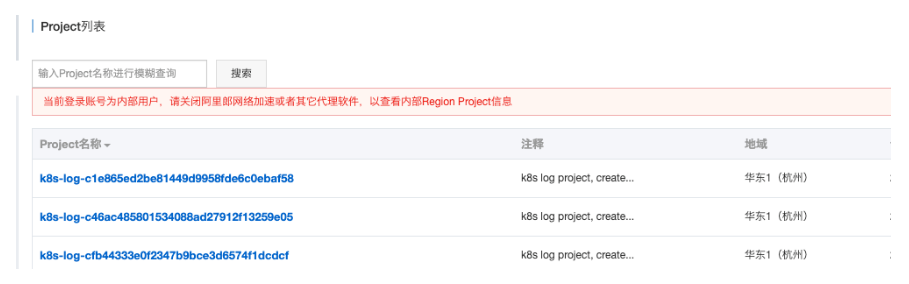

After the cluster is installed, you can Logging Service Console View the project named as k8s-log-{Kubernetes Cluster ID}. The collected business container logs are placed under the project.

Existing clusters can follow Aliyun Help Document Deploy related components. If the relevant log service components are not deployed in the normal cluster, the logs of ECI instances will be collected uniformly into the project at the beginning of eci-log-default-project.

Deployment of virtual nodes

You can follow ACK Container Service Publishes Virtual Node Addresson to Rapidly Deploy Virtual Nodes to Improve Cluster Elasticity This article deploys virtual nodes within the cluster.

Use YAML template to collect common business container logs

The grammar of YAML template is the same as Kubernetes grammar, but in order to specify the collection configuration for container, env is needed to add the collection configuration and custom Tag for container, and the corresponding volumeMounts and volumes are created according to the collection configuration. The following is a simple Deployment example:

apiVersion: apps/v1 kind: Deployment metadata: labels: app: alpine name: alpine spec: replicas: 2 selector: matchLabels: app: alpine template: metadata: labels: app: alpine spec: containers: - image: alpine imagePullPolicy: Always args: - ping - 127.0.0.1 name: alpine env: ######### Configuring environment variables ########### - name: aliyun_logs_test-stdout value: stdout - name: aliyun_logs_test-file value: /log/*.log - name: aliyun_logs_log_tags value: tag1=v1 ################################# ######### To configure vulume mount ####### volumeMounts: - name: volume-sls mountPath: /log volumes: - name: volume-sls ###############################

There are three parts that need to be configured according to your needs, generally in order to configure.

- The first part uses environment variables to create your collection configuration and custom Tag. All configuration-related environment variables are prefixed with aliyun_logs_

The rules for creating collection configurations are as follows:

- name: aliyun_logs_{Logstore name}

value: {Log Collection Path}In the example, two collection configurations are created, in which the env of aliyun_logs_log-stdout represents the configuration of creating a log store named log-stdout and a log collection path named stdout, thus collecting the standard output of the container into the log-stdout log store.

Describes that the Logstore name cannot contain an underscore (), but can be replaced by -.

- The rules for creating custom Tag s are as follows:

- name: aliyun_logs_{any name that does not contain''}_tags

value: {Tag name}={Tag value}After configuring Tag, when the container's logs are collected, the corresponding fields are automatically attached to the log service.

- If you specify a non-stdout collection path in your collection configuration, you need to create volumnMounts in this section.

In the example, the collection configuration adds the collection of c:log*.log, so the volume Mounts of c:log are added accordingly.

Save the above yaml as test.yaml and apply it to cluster:

$ kubectl create ns virtual $ kubectl create -f test.yaml -n virtual # View pod deployment $ kubectl get pods -n virtual -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE alpine-57c9977fd6-bsvwh 1/1 Running 0 10m 172.18.1.161 cn-hangzhou.10.1.190.228 <none> alpine-57c9977fd6-wc89v 1/1 Running 0 10m 172.18.0.169 cn-hangzhou.10.1.190.229 <none>

view log

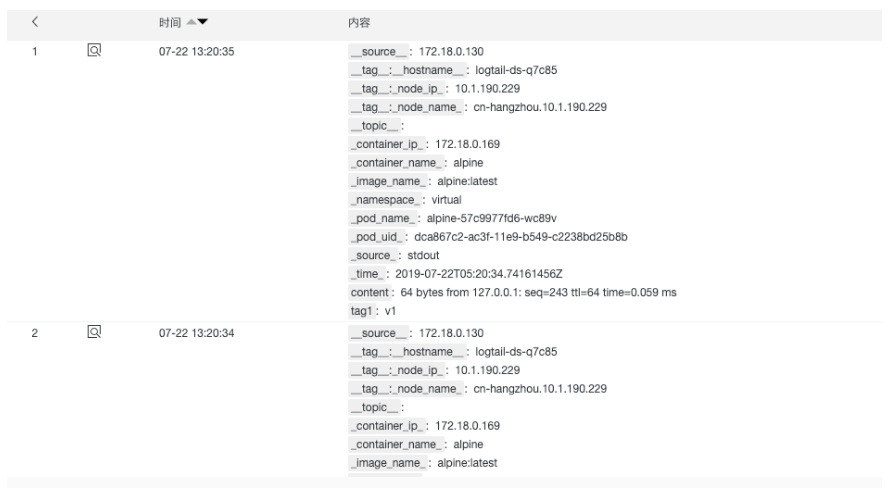

To find the test-stdout logstore under the corresponding project of the log service console, click on the query to see the stdout logs of the common container collected:

Expanding Business Containers to Virtual Nodes

The namespace virtual created above will be marked as deploying using virtual nodes, and then scaling two pod s to virtual nodes.

# Mark namespace $ kubectl label namespace virtual virtual-node-affinity-injection=enabled # scale deployment/alpine $ kubectl scale --replicas=4 deployments/alpine -n virtual # Looking at the pod deployment, you can see that two are deployed in the normal node and two are deployed in the virtual node. $ kubectl get pods -n virtual -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE alpine-57c9977fd6-2ctp7 1/1 Running 0 23s 10.1.190.231 virtual-kubelet <none> alpine-57c9977fd6-b4445 1/1 Running 0 23s 10.1.190.230 virtual-kubelet <none> alpine-57c9977fd6-bsvwh 1/1 Running 0 10m 172.18.1.161 cn-hangzhou.10.1.190.228 <none> alpine-57c9977fd6-wc89v 1/1 Running 0 10m 172.18.0.169 cn-hangzhou.10.1.190.229 <none>

Look at the log again

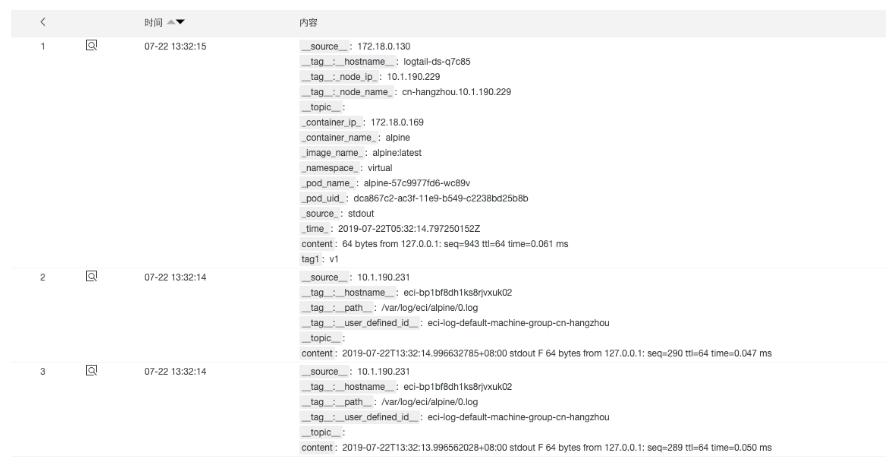

By opening the test-stdout logstore, you can see the mixed stdout logs of common containers and ECI instances collected:

Note: Different logstores in different clusters under your account can not configure ECI instance logs that collect the same matching rules, such as stdout; under the same cluster, different logstores can not configure common containers and ECI instance logs that collect the same matching rules.

Links to the original text

This article is the original content of Yunqi Community, which can not be reproduced without permission.