The Touch event delivery mechanism of Android only knows the process after events are passed into Activity, but how these events are passed to Activity has been unclear. Now let's go back and sort out a few things and share them with you.

The source code was used to share the creation and loading of View and the drawing process of View.

Hand-in-hand teaching you to read the source code, View loading process detailed analysis

Hand-in-hand teaching you to read the source code, View drawing process detailed analysis

Today, along with the Android source code to explore the Android View Touch event in the end how to register and receive, although some gods have shared, but the source code is relatively old, and through their own research will grasp more thoroughly. This article mainly analyses the Java part, but not the bottom C++ part.

The main task of Android input system is to read the original events in device nodes, process and encapsulate them, and then distribute them to a specific window and control in the window, such as Button in an active Activity interface.

At the same time, we know that Android system is the Linux kernel, and its event processing is also completed on the basis of Linux, so we slowly sort out its general processing process from the Linux kernel to the application. First, let's look at how events are registered.

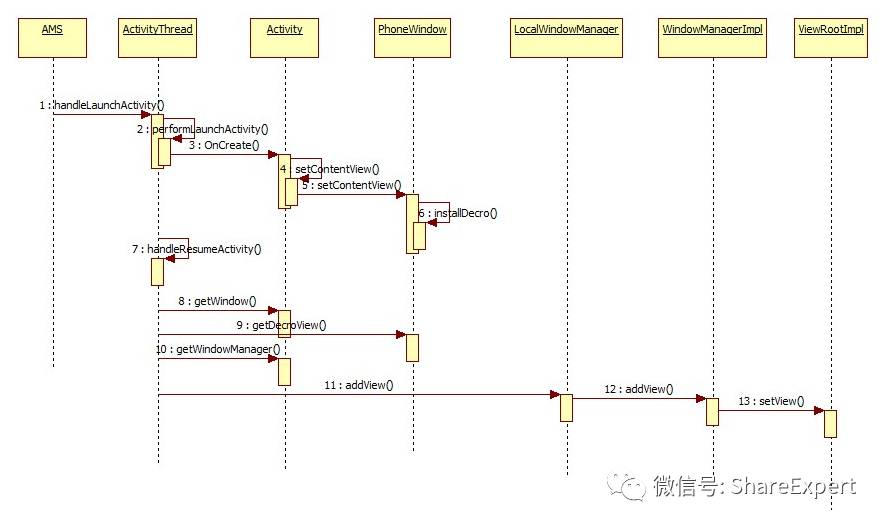

1. Event registration

From the source code analysis of the previous View loading process (if not clear, it is recommended to review the View loading process first), the handleResumeActivity method in ActivityThread calls the wm.addView() method and adds View to mWindows Manger, that is, the addView method of the Windows Manager Impl class, which is also called by the Windows Manager Gl. The obal agent. In the addView method of Windows Manager Global, a ViewRootImpl object is created first, and then the ViewRootImpl.setView() method is called.

setView Method Call Sequence Diagram

The following code is found in the setView method:

/**

* We have one child

*/

public void setView(View view, WindowManager.LayoutParams attrs, View panelParentView) {

synchronized (this) {

if (mView == null) {

mView = view;

...

// Schedule the first layout -before- adding to the window

// manager, to make sure we do the relayout before receiving

// any other events from the system.

requestLayout();

if ((mWindowAttributes.inputFeatures

& WindowManager.LayoutParams.INPUT_FEATURE_NO_INPUT_CHANNEL) == 0) {

mInputChannel = new InputChannel();

}

mForceDecorViewVisibility = (mWindowAttributes.privateFlags

& PRIVATE_FLAG_FORCE_DECOR_VIEW_VISIBILITY) != 0;

try {

mOrigWindowType = mWindowAttributes.type;

mAttachInfo.mRecomputeGlobalAttributes = true;

collectViewAttributes();

res = mWindowSession.addToDisplay(mWindow, mSeq, mWindowAttributes,

getHostVisibility(), mDisplay.getDisplayId(),

mAttachInfo.mContentInsets, mAttachInfo.mStableInsets,

mAttachInfo.mOutsets, mInputChannel);

} catch (RemoteException e) {

mAdded = false;

mView = null;

mAttachInfo.mRootView = null;

mInputChannel = null;

mFallbackEventHandler.setView(null);

unscheduleTraversals();

setAccessibilityFocus(null, null);

throw new RuntimeException("Adding window failed", e);

} finally {

if (restore) {

attrs.restore();

}

}

...

if (view instanceof RootViewSurfaceTaker) {

mInputQueueCallback =

((RootViewSurfaceTaker)view).willYouTakeTheInputQueue();

}

if (mInputChannel != null) {

if (mInputQueueCallback != null) {

mInputQueue = new InputQueue();

mInputQueueCallback.onInputQueueCreated(mInputQueue);

}

mInputEventReceiver = new WindowInputEventReceiver(mInputChannel,

Looper.myLooper());

}

view.assignParent(this);

mAddedTouchMode = (res & WindowManagerGlobal.ADD_FLAG_IN_TOUCH_MODE) != 0;

mAppVisible = (res & WindowManagerGlobal.ADD_FLAG_APP_VISIBLE) != 0;

if (mAccessibilityManager.isEnabled()) {

mAccessibilityInteractionConnectionManager.ensureConnection();

}

if (view.getImportantForAccessibility() == View.IMPORTANT_FOR_ACCESSIBILITY_AUTO) {

view.setImportantForAccessibility(View.IMPORTANT_FOR_ACCESSIBILITY_YES);

}

// Set up the input pipeline.

CharSequence counterSuffix = attrs.getTitle();

mSyntheticInputStage = new SyntheticInputStage();

InputStage viewPostImeStage = new ViewPostImeInputStage(mSyntheticInputStage);

InputStage nativePostImeStage = new NativePostImeInputStage(viewPostImeStage,

"aq:native-post-ime:" + counterSuffix);

InputStage earlyPostImeStage = new EarlyPostImeInputStage(nativePostImeStage);

InputStage imeStage = new ImeInputStage(earlyPostImeStage,

"aq:ime:" + counterSuffix);

InputStage viewPreImeStage = new ViewPreImeInputStage(imeStage);

InputStage nativePreImeStage = new NativePreImeInputStage(viewPreImeStage,

"aq:native-pre-ime:" + counterSuffix);

mFirstInputStage = nativePreImeStage;

mFirstPostImeInputStage = earlyPostImeStage;

mPendingInputEventQueueLengthCounterName = "aq:pending:" + counterSuffix;

}

}

}

After requestLayout, you create an InputChannel object, and then call the addToDisplay method to continue tracking analysis:

@Override

public int addToDisplay(IWindow window, int seq, WindowManager.LayoutParams attrs,

int viewVisibility, int displayId, Rect outContentInsets, Rect outStableInsets,

Rect outOutsets, InputChannel outInputChannel) {

return mService.addWindow(this, window, seq, attrs, viewVisibility, displayId,

outContentInsets, outStableInsets, outOutsets, outInputChannel);

}This method continues to call the addWindow method of Windows Manager Service:

public int addWindow(Session session, IWindow client, int seq,

WindowManager.LayoutParams attrs, int viewVisibility, int displayId,

Rect outContentInsets, Rect outStableInsets, Rect outOutsets,

InputChannel outInputChannel) {

int[] appOp = new int[1];

int res = mPolicy.checkAddPermission(attrs, appOp);

if (res != WindowManagerGlobal.ADD_OKAY) {

return res;

}

boolean reportNewConfig = false;

WindowState attachedWindow = null;

long origId;

final int callingUid = Binder.getCallingUid();

final int type = attrs.type;

synchronized(mWindowMap) {

...

WindowState win = new WindowState(this, session, client, token,

attachedWindow, appOp[0], seq, attrs, viewVisibility, displayContent);

if (win.mDeathRecipient == null) {

// Client has apparently died, so there is no reason to

// continue.

Slog.w(TAG_WM, "Adding window client " + client.asBinder()

+ " that is dead, aborting.");

return WindowManagerGlobal.ADD_APP_EXITING;

}

if (win.getDisplayContent() == null) {

Slog.w(TAG_WM, "Adding window to Display that has been removed.");

return WindowManagerGlobal.ADD_INVALID_DISPLAY;

}

mPolicy.adjustWindowParamsLw(win.mAttrs);

win.setShowToOwnerOnlyLocked(mPolicy.checkShowToOwnerOnly(attrs));

res = mPolicy.prepareAddWindowLw(win, attrs);

if (res != WindowManagerGlobal.ADD_OKAY) {

return res;

}

final boolean openInputChannels = (outInputChannel != null

&& (attrs.inputFeatures & INPUT_FEATURE_NO_INPUT_CHANNEL) == 0);

if (openInputChannels) {

win.openInputChannel(outInputChannel);

}

...

}

if (reportNewConfig) {

sendNewConfiguration();

}

Binder.restoreCallingIdentity(origId);

return res;

}This method first creates a Windows State object, and then calls the openInputChannel method:

void openInputChannel(InputChannel outInputChannel) {

if (mInputChannel != null) {

throw new IllegalStateException("Window already has an input channel.");

}

String name = makeInputChannelName();

InputChannel[] inputChannels = InputChannel.openInputChannelPair(name);

mInputChannel = inputChannels[0];

mClientChannel = inputChannels[1];

mInputWindowHandle.inputChannel = inputChannels[0];

if (outInputChannel != null) {

mClientChannel.transferTo(outInputChannel);

mClientChannel.dispose();

mClientChannel = null;

} else {

// If the window died visible, we setup a dummy input channel, so that taps

// can still detected by input monitor channel, and we can relaunch the app.

// Create dummy event receiver that simply reports all events as handled.

mDeadWindowEventReceiver = new DeadWindowEventReceiver(mClientChannel);

}

mService.mInputManager.registerInputChannel(mInputChannel, mInputWindowHandle);

}

The main purpose of this code is to create a pair of Input Channels, which implements a set of full duplex pipes. When the InputChannel is created, one of the native InputChannel s is assigned to the outInputChannel, which is the initialization of the InputChannel object at the ViewRootImpl end. With the creation of the InputChannel object at both ends of ViewRootImpl and Windows Manager Service, the pipeline communication of the event transmission system is established. . Follow-up specific content is not the focus of analysis for the time being, and there will be opportunities for further analysis in the future.

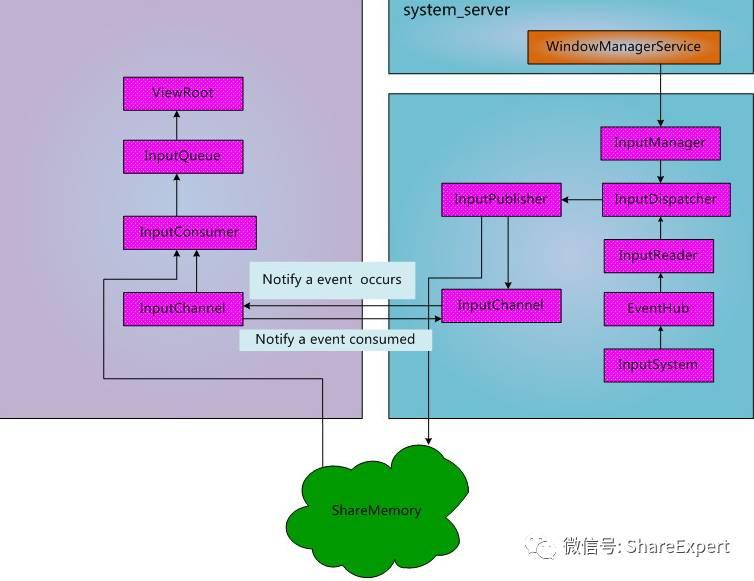

Key press, touch screen and other events are captured by Windows Manager Service and passed to ViewRootImpl through shared memory and pipeline. ViewRootImpl calls dispatch to Application. When an event is input from a hardware device, the system_server side notifies the ViewRootImpl event through a pipe when it detects an event occurring, and then the ViewRootImpl reads the event information in memory.

The following structure diagram is more classic. It is found on the network. Some of the classes are not up to date. Input Manager becomes Input Manager Service and ViewRoot becomes ViewRoot Impl.

Android Event Processing Structural Diagram

2. Event Reception

From the above analysis, we know that the pipeline of event transmission system is established in addView. Then when Linux detects the event, it will be passed to ViewRootImpl layer by layer. We will analyze how the event is passed from Native to Activity.

Continue to analyze the setView method of the ViewRootImpl class. The code before the end of the method first creates a processing object that accepts input events related to the InputChannel generated by the current window, and finally sets up the processing objects that are called in turn by the corresponding types when different types of input events come.

The above analysis generates two InputChannel input event channels, one of which is transferred to the current top-level ViewRootImpl and generates an event processing mInputEventReceiver object associated with the input event channel, and continues with this clue.

final class WindowInputEventReceiver extends InputEventReceiver {

public WindowInputEventReceiver(InputChannel inputChannel, Looper looper) {

super(inputChannel, looper);

}

@Override

public void onInputEvent(InputEvent event) {

enqueueInputEvent(event, this, 0, true);

}

@Override

public void onBatchedInputEventPending() {

if (mUnbufferedInputDispatch) {

super.onBatchedInputEventPending();

} else {

scheduleConsumeBatchedInput();

}

}

@Override

public void dispose() {

unscheduleConsumeBatchedInput();

super.dispose();

}

}

WindowInputEventReceiver mInputEventReceiver;

See that the Windows InputEventReceiver class inherits the InputEventReceiver class:

/**

* Provides a low-level mechanism for an application to receive input events.

* @hide

*/

public abstract class InputEventReceiver {

...

/**

* Called when an input event is received.

* The recipient should process the input event and then call {@link #finishInputEvent}

* to indicate whether the event was handled. No new input events will be received

* until {@link #finishInputEvent} is called.

*

* @param event The input event that was received.

*/

public void onInputEvent(InputEvent event) {

finishInputEvent(event, false);

}

...

// Called from native code.

@SuppressWarnings("unused")

private void dispatchInputEvent(int seq, InputEvent event) {

mSeqMap.put(event.getSequenceNumber(), seq);

onInputEvent(event);

}

// Called from native code.

@SuppressWarnings("unused")

private void dispatchBatchedInputEventPending() {

onBatchedInputEventPending();

}

public static interface Factory {

public InputEventReceiver createInputEventReceiver(

InputChannel inputChannel, Looper looper);

}

}

Notice the dispatchInputEvent method of the InputEventReceiver class, which is invoked by the native layer code when the input event arrives, and then the onInputEvent(event) method is invoked. As you know, the Windows InputEventReceiver class overrides the onInputEvent method, so the event is passed to the enqueueInputEvent method.

void enqueueInputEvent(InputEvent event,

InputEventReceiver receiver, int flags, boolean processImmediately) {

adjustInputEventForCompatibility(event);

QueuedInputEvent q = obtainQueuedInputEvent(event, receiver, flags);

// Always enqueue the input event in order, regardless of its time stamp.

// We do this because the application or the IME may inject key events

// in response to touch events and we want to ensure that the injected keys

// are processed in the order they were received and we cannot trust that

// the time stamp of injected events are monotonic.

QueuedInputEvent last = mPendingInputEventTail;

if (last == null) {

mPendingInputEventHead = q;

mPendingInputEventTail = q;

} else {

last.mNext = q;

mPendingInputEventTail = q;

}

mPendingInputEventCount += 1;

Trace.traceCounter(Trace.TRACE_TAG_INPUT, mPendingInputEventQueueLengthCounterName,

mPendingInputEventCount);

if (processImmediately) {

doProcessInputEvents();

} else {

scheduleProcessInputEvents();

}

}

This method first obtains an input event queue QueuedInputEvent object pointing to the current event, and finally calls the doProcessInputEvents method:

void doProcessInputEvents() {

// Deliver all pending input events in the queue.

while (mPendingInputEventHead != null) {

QueuedInputEvent q = mPendingInputEventHead;

mPendingInputEventHead = q.mNext;

if (mPendingInputEventHead == null) {

mPendingInputEventTail = null;

}

q.mNext = null;

mPendingInputEventCount -= 1;

Trace.traceCounter(Trace.TRACE_TAG_INPUT, mPendingInputEventQueueLengthCounterName,

mPendingInputEventCount);

long eventTime = q.mEvent.getEventTimeNano();

long oldestEventTime = eventTime;

if (q.mEvent instanceof MotionEvent) {

MotionEvent me = (MotionEvent)q.mEvent;

if (me.getHistorySize() > 0) {

oldestEventTime = me.getHistoricalEventTimeNano(0);

}

}

mChoreographer.mFrameInfo.updateInputEventTime(eventTime, oldestEventTime);

deliverInputEvent(q);

}

// We are done processing all input events that we can process right now

// so we can clear the pending flag immediately.

if (mProcessInputEventsScheduled) {

mProcessInputEventsScheduled = false;

mHandler.removeMessages(MSG_PROCESS_INPUT_EVENTS);

}

}

The deliverInputEvent method is called iteratively as long as there are still events to be processed in the current event queue:

private void deliverInputEvent(QueuedInputEvent q) {

Trace.asyncTraceBegin(Trace.TRACE_TAG_VIEW, "deliverInputEvent",

q.mEvent.getSequenceNumber());

if (mInputEventConsistencyVerifier != null) {

mInputEventConsistencyVerifier.onInputEvent(q.mEvent, 0);

}

InputStage stage;

if (q.shouldSendToSynthesizer()) {

stage = mSyntheticInputStage;

} else {

stage = q.shouldSkipIme() ? mFirstPostImeInputStage : mFirstInputStage;

}

if (stage != null) {

stage.deliver(q);

} else {

finishInputEvent(q);

}

}

The stage is assigned based on q.shouldSendToSynthesizer() and q.shouldSkipIme(), and the deliver y method of the stage is called.

/**

* Base class for implementing a stage in the chain of responsibility

* for processing input events.

* <p>

* Events are delivered to the stage by the {@link #deliver} method. The stage

* then has the choice of finishing the event or forwarding it to the next stage.

* </p>

*/

abstract class InputStage {

private final InputStage mNext;

protected static final int FORWARD = 0;

protected static final int FINISH_HANDLED = 1;

protected static final int FINISH_NOT_HANDLED = 2;

/**

* Creates an input stage.

* @param next The next stage to which events should be forwarded.

*/

public InputStage(InputStage next) {

mNext = next;

}

/**

* Delivers an event to be processed.

*/

public final void deliver(QueuedInputEvent q) {

if ((q.mFlags & QueuedInputEvent.FLAG_FINISHED) != 0) {

forward(q);

} else if (shouldDropInputEvent(q)) {

finish(q, false);

} else {

apply(q, onProcess(q));

}

}

/**

* Marks the the input event as finished then forwards it to the next stage.

*/

protected void finish(QueuedInputEvent q, boolean handled) {

q.mFlags |= QueuedInputEvent.FLAG_FINISHED;

if (handled) {

q.mFlags |= QueuedInputEvent.FLAG_FINISHED_HANDLED;

}

forward(q);

}

/**

* Forwards the event to the next stage.

*/

protected void forward(QueuedInputEvent q) {

onDeliverToNext(q);

}

/**

* Applies a result code from {@link #onProcess} to the specified event.

*/

protected void apply(QueuedInputEvent q, int result) {

if (result == FORWARD) {

forward(q);

} else if (result == FINISH_HANDLED) {

finish(q, true);

} else if (result == FINISH_NOT_HANDLED) {

finish(q, false);

} else {

throw new IllegalArgumentException("Invalid result: " + result);

}

}

/**

* Called when an event is ready to be processed.

* @return A result code indicating how the event was handled.

*/

protected int onProcess(QueuedInputEvent q) {

return FORWARD;

}

/**

* Called when an event is being delivered to the next stage.

*/

protected void onDeliverToNext(QueuedInputEvent q) {

if (DEBUG_INPUT_STAGES) {

Log.v(mTag, "Done with " + getClass().getSimpleName() + ". " + q);

}

if (mNext != null) {

mNext.deliver(q);

} else {

finishInputEvent(q);

}

}

protected boolean shouldDropInputEvent(QueuedInputEvent q) {

if (mView == null || !mAdded) {

Slog.w(mTag, "Dropping event due to root view being removed: " + q.mEvent);

return true;

} else if ((!mAttachInfo.mHasWindowFocus

&& !q.mEvent.isFromSource(InputDevice.SOURCE_CLASS_POINTER)) || mStopped

|| (mIsAmbientMode && !q.mEvent.isFromSource(InputDevice.SOURCE_CLASS_BUTTON))

|| (mPausedForTransition && !isBack(q.mEvent))) {

// This is a focus event and the window doesn't currently have input focus or

// has stopped. This could be an event that came back from the previous stage

// but the window has lost focus or stopped in the meantime.

if (isTerminalInputEvent(q.mEvent)) {

// Don't drop terminal input events, however mark them as canceled.

q.mEvent.cancel();

Slog.w(mTag, "Cancelling event due to no window focus: " + q.mEvent);

return false;

}

// Drop non-terminal input events.

Slog.w(mTag, "Dropping event due to no window focus: " + q.mEvent);

return true;

}

return false;

}

void dump(String prefix, PrintWriter writer) {

if (mNext != null) {

mNext.dump(prefix, writer);

}

}

private boolean isBack(InputEvent event) {

if (event instanceof KeyEvent) {

return ((KeyEvent) event).getKeyCode() == KeyEvent.KEYCODE_BACK;

} else {

return false;

}

}

}

Seeing this, do you feel familiar with it? This is the setView method that finally sets up the processing objects called by different types of input events. In the setView method, six subclass objects of InputStage are generated, namely ViewPostImeInputStage, NativePostImeInputStage, EarlyPostImeInputStage, ImeInputStage, ViewPreImeInputStage and NativePreImeInputStage. If it's not the current event, keep pointing to the next until you find the corresponding object.

Here we are analyzing Touch events, so we only focus on ViewPostImeInputStage.

/**

* Delivers post-ime input events to the view hierarchy.

*/

final class ViewPostImeInputStage extends InputStage {

public ViewPostImeInputStage(InputStage next) {

super(next);

}

@Override

protected int onProcess(QueuedInputEvent q) {

if (q.mEvent instanceof KeyEvent) {

return processKeyEvent(q);

} else {

final int source = q.mEvent.getSource();

if ((source & InputDevice.SOURCE_CLASS_POINTER) != 0) {

return processPointerEvent(q);

} else if ((source & InputDevice.SOURCE_CLASS_TRACKBALL) != 0) {

return processTrackballEvent(q);

} else {

return processGenericMotionEvent(q);

}

}

}

...

}

For touch events, here (source & InputDevice. SOURCE_CLASS_POINTER)!= 0 is true, so the processPointerEvent method is called.

private int processPointerEvent(QueuedInputEvent q) {

final MotionEvent event = (MotionEvent)q.mEvent;

mAttachInfo.mUnbufferedDispatchRequested = false;

final View eventTarget =

(event.isFromSource(InputDevice.SOURCE_MOUSE) && mCapturingView != null) ?

mCapturingView : mView;

mAttachInfo.mHandlingPointerEvent = true;

boolean handled = eventTarget.dispatchPointerEvent(event);

maybeUpdatePointerIcon(event);

mAttachInfo.mHandlingPointerEvent = false;

if (mAttachInfo.mUnbufferedDispatchRequested && !mUnbufferedInputDispatch) {

mUnbufferedInputDispatch = true;

if (mConsumeBatchedInputScheduled) {

scheduleConsumeBatchedInputImmediately();

}

}

return handled ? FINISH_HANDLED : FORWARD;

}

Here the View is the top-level view of the window, DecroView, so continue to analyze the dispatchPointerEvent method of the View:

/**

* Dispatch a pointer event.

* <p>

* Dispatches touch related pointer events to {@link #onTouchEvent(MotionEvent)} and all

* other events to {@link #onGenericMotionEvent(MotionEvent)}. This separation of concerns

* reinforces the invariant that {@link #onTouchEvent(MotionEvent)} is really about touches

* and should not be expected to handle other pointing device features.

* </p>

*

* @param event The motion event to be dispatched.

* @return True if the event was handled by the view, false otherwise.

* @hide

*/

public final boolean dispatchPointerEvent(MotionEvent event) {

if (event.isTouchEvent()) {

return dispatchTouchEvent(event);

} else {

return dispatchGenericMotionEvent(event);

}

}The dispatchTouchEvent method is called here. Since DecorView overrides this method, we continue to look at the dispatchTouchEvent method of DecorView:

@Override

public boolean dispatchTouchEvent(MotionEvent ev) {

final Window.Callback cb = mWindow.getCallback();

return cb != null && !mWindow.isDestroyed() && mFeatureId < 0

? cb.dispatchTouchEvent(ev) : super.dispatchTouchEvent(ev);

}

Here's a judgement, when cb! = null and mFeatureId < 0 is to execute cb.dispatchTouchEvent(ev), otherwise execute super.dispatchTouchEvent(ev), which is the dispatchTouchEvent method inherited from ViewGroup by FrameLayout.

The cb object here is acquired by calling the getCallback method of mWindow (i.e. PhoneWindow object), which is assigned when the setCallback is called after the PhoneWindow object is created in the attach method of the previous Activity:

final void attach(Context context, ActivityThread aThread,

Instrumentation instr, IBinder token, int ident,

Application application, Intent intent, ActivityInfo info,

CharSequence title, Activity parent, String id,

NonConfigurationInstances lastNonConfigurationInstances,

Configuration config, String referrer, IVoiceInteractor voiceInteractor,

Window window) {

attachBaseContext(context);

mFragments.attachHost(null /*parent*/);

mWindow = new PhoneWindow(this, window);

mWindow.setWindowControllerCallback(this);

mWindow.setCallback(this);

mWindow.setOnWindowDismissedCallback(this);

mWindow.getLayoutInflater().setPrivateFactory(this);

if (info.softInputMode != WindowManager.LayoutParams.SOFT_INPUT_STATE_UNSPECIFIED) {

mWindow.setSoftInputMode(info.softInputMode);

}

...

}So here cb.dispatchTouchEvent(ev) is the dispatchTouchEvent method of the Activity class.

When Touch events are passed to Activity, it is the familiar activity event delivery process that we often see. Due to the space problem, we will do a specific analysis in the next article. We also welcome your attention. We will continue to introduce more wonderful content in the future.

More articles:

Hand-in-hand teaching you to read the source code, View loading process detailed analysis

Hand-in-hand teaching you to read the source code, View drawing process detailed analysis

Interview Questions with Deep and Intensive Data Structure

Skillful use of templates can not only improve the efficiency of AS development, but also force

Android common memory leaks, learn these six tips to greatly optimize APP performance

The latest and most complete Android Training Institute in history

Program ape's work and life, you really don't understand

Who says programmers don't know romance on Valentine's Day

Today I'll share with you first. More wonderful content will be introduced in the future. Welcome to discuss the progress of learning together.

Copyright of this article is owned by Share Expert. If you reproduce this article, please note the source, hereby declare!