This article covers the following knowledge points: Python crawler, MySQL database, html/css/js foundation, selenium and phantomjs foundation, MVC design pattern, django framework (Python web development framework), apache server, linux (centos 7 as an example) basic operation. Therefore, it is suitable for students who have the above foundation to learn.

Statement: This blog is just for purely technical exchange. Sensitive information will be filtered in this blog. I'm sorry (for any reason, it has nothing to do with me that causes problems on the website of the Academic Affairs Office of Yangtze University).

Realization idea: Without data interface of educational administration (information security of students), it is also necessary to write a crawler to simulate the landing of educational administration, and then crawl the data. In order to prevent the crawler from crashing and causing the crawler to fail, we can cache the data. Next time, we can get the data directly from our own database. What we need to do is update the data and administration regularly. Synchronization is achieved.

Technical architecture: CentOS 7 + Apache 2.4 + mariadb5.5 + Python 2.7.5 + mod_wsgi 3.4 + Django 1.11

------------------------------------------------------------------------

1. Python Reptiles:

1. Look at the login entry first.

Here we use FireFox to do packet analysis, we found that the login is post-up, and with seven parameters, we found that there are authentication codes. At this time, there are two solutions. One is to use the popular technology to do image recognition with DL, the other is to down load and let users lose. The first cost is relatively high. You can try it when you are not busy. Remember that Python has a library called Pillow or PIL for image recognition. Try TF in summer vacation. The second is very low.

2. There is also a tall way, you can ignore the validation code, let's not go into details here, we simulate landing on:

#coding:utf8 from bs4 import BeautifulSoup import urllib import urllib2 import requests import sys reload(sys) sys.setdefaultencoding('gbk') loginURL = "The landing address of the Academic Affairs Department" cjcxURL = "http://jwc2.yangtzeu.edu.cn:8080/cjcx.aspx" html = urllib2.urlopen(loginURL) soup = BeautifulSoup(html,"lxml") __VIEWSTATE = soup.find(id="__VIEWSTATE")["value"] __EVENTVALIDATION = soup.find(id="__EVENTVALIDATION")["value"] data = { "__VIEWSTATE":__VIEWSTATE, "__EVENTVALIDATION":__EVENTVALIDATION, "txtUid":"Account number", "btLogin":"%B5%C7%C2%BC", "txtPwd":"Password", "selKind":"1" } header = { # "Host":"jwc2.yangtzeu.edu.cn:8080", "User-Agent":"Mozilla/5.0 (Windows NT 10.0;... Gecko/20100101 Firefox/54.0", "Accept":"text/html,application/xhtml+x...lication/xml;q=0.9,*/*;q=0.8", "Accept-Language":"zh-CN,zh;q=0.8,en-US;q=0.5,en;q=0.3", "Accept-Encoding":"gzip, deflate", "Content-Type":"application/x-www-form-urlencoded", # "Content-Length":"644", "Referer":"http://jwc2.yangtzeu.edu.cn:8080/login.aspx", # "Cookie":"ASP.NET_SessionId=3zjuqi0cnk5514l241csejgx", # "Connection":"keep-alive", # "Upgrade-Insecure-Requests":"1", } UserSession = requests.session() Request = UserSession.post(loginURL,data,header) Response = UserSession.get(cjcxURL,cookies = Request.cookies,headers=header) soup = BeautifulSoup(Response.content,"lxml") print soup

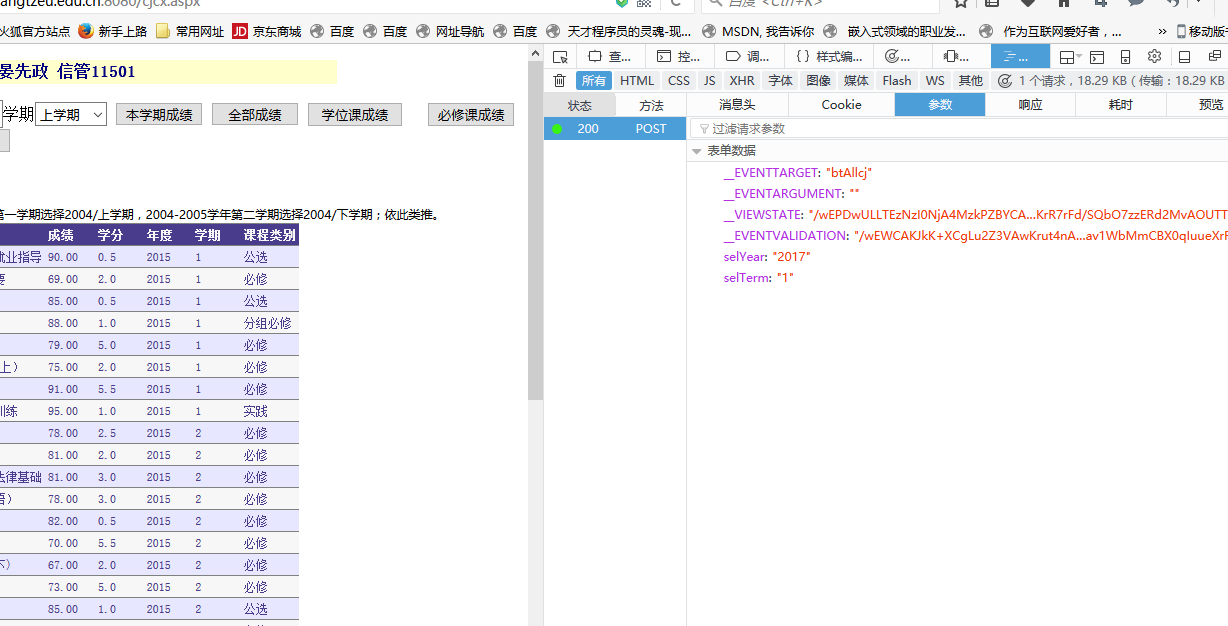

Next we can see:

Then post (this code goes above):

__VIEWSTATE2 = soup.find(id="__VIEWSTATE")["value"] __EVENTVALIDATION2 = soup.find(id="__EVENTVALIDATION")["value"] AllcjData = { "__EVENTTARGET":"btAllcj", "__EVENTARGUMENT":"", "__VIEWSTATE":__VIEWSTATE2, "__EVENTVALIDATION":__EVENTVALIDATION2, "selYear":"2017", "selTerm":"1", # "Button2":"%B1%D8%D0%DE%BF%CE%B3%C9%BC%A8" } AllcjHeader = { # "Host":"jwc2.yangtzeu.edu.cn:8080", "User-Agent":"Mozilla/5.0 (Windows NT 10.0;... Gecko/20100101 Firefox/54.0", "Accept":"text/html,application/xhtml+x...lication/xml;q=0.9,*/*;q=0.8", "Accept-Language":"zh-CN,zh;q=0.8,en-US;q=0.5,en;q=0.3", "Accept-Encoding":"gzip, deflate", "Content-Type":"application/x-www-form-urlencoded", # "Content-Length":"644", "Referer":"http://jwc2.yangtzeu.edu.cn:8080/cjcx.aspx", # "Cookie":, "Connection":"keep-alive", "Upgrade-Insecure-Requests":"1", } Request1 = UserSession.post(cjcxURL,AllcjData,AllcjHeader) Response1 = UserSession.get(cjcxURL,cookies = Request.cookies,headers=AllcjHeader) soup = BeautifulSoup(Response1.content,"lxml") print soup

Find no way... This time the get page is still the original page... I think there are two reasons for this post failure: one is that the VIEWSTATE and EVENTVALIDATION variables of asp.net cause the post failure; the other is that multiple button s of one form use js to make judgments, which leads to the crawler failure. For dynamically loaded pages, the ordinary crawler is not good....

3. Tall selenium + phantomjs (browsers without interfaces, faster than chrome and Firefox)

Selenium installation: pip install selenium

phantomjs installation:

(1) Address: http://phantomjs.org/download.html (I downloaded Linux 64-bit)

(2) Decompression: tar-jxvf phantomjs-2.1.1-linux-x86_64.tar.bz2/usr/share/

(3) Installation dependency: Yum install fontconfig free type libfreetype. so.6 libfontconfig. so.1

(4) Configuration environment variables: export PATH=$PATH:/usr/share/phantomjs-2.1.1-linux-x86_64/bin

(5) Enter phantomjs under the shell. If you can enter the command line, the installation is successful.

Please ignore my comments:

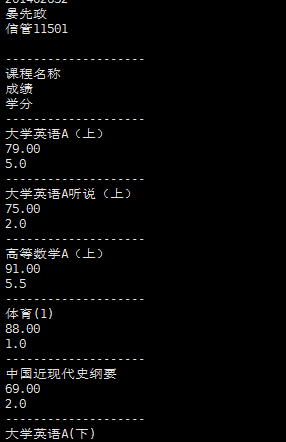

#coding:utf8 from bs4 import BeautifulSoup from selenium import webdriver from selenium.webdriver.common.keys import Keys import time import urllib import urllib2 import sys reload(sys) sys.setdefaultencoding('utf8') driver = webdriver.PhantomJS(); driver.get("Register Address of Academic Affairs Department") driver.find_element_by_name('txtUid').send_keys('Account number') driver.find_element_by_name('txtPwd').send_keys('Password') driver.find_element_by_id('btLogin').click() cookie=driver.get_cookies() driver.get("http://jwc2.yangtzeu.edu.cn:8080/cjcx.aspx") #print driver.page_source #driver.find_element_by_xpath("//input[@name='btAllcj'][@type='button']") #js = "document.getElementById('btAllcj').onclick=function(){__doPostBack('btAllcj','')}" #js = "var ob; ob=document.getElementById('btAllcj');ob.focus();ob.click();)" #driver.execute_script("document.getElementById('btAllcj').click();") #time.sleep(2) #Let the operation stop a little. #driver.find_element_by_link_text("Total Achievements").click() #find'Sign in'Button and click #time.sleep(2) #js1 = "document.Form1.__EVENTTARGET.value='btAllcj';" #js2 = "document.Form1.__EVENTARGUMENT.value='';" #driver.execute_script(js1) #driver.execute_script(js2) #driver.find_element_by_name('__EVENTTARGET').send_keys('btAllcj') #driver.find_element_by_name('__EVENTARGUMENT').send_keys('') #js = "var input = document.createElement('input');input.setAttribute('type', 'hidden');input.setAttribute('name', '__EVENTTARGET');input.setAttribute('value', '');document.getElementById('Form1').appendChild(input);var input = document.createElement('input');input.setAttribute('type', 'hidden');input.setAttribute('name', '__EVENTARGUMENT');input.setAttribute('value', '');document.getElementById('Form1').appendChild(input);var theForm = document.forms['Form1'];if (!theForm) { theForm = document.Form1;}function __doPostBack(eventTarget, eventArgument) { if (!theForm.onsubmit || (theForm.onsubmit() != false)) { theForm.__EVENTTARGET.value = eventTarget; theForm.__EVENTARGUMENT.value = eventArgument; theForm.submit(); } }__doPostBack('btAllcj', '')" #js = "var script = document.createElement('script');script.type = 'text/javascript';script.text='if (!theForm) { theForm = document.Form1;}function __doPostBack(eventTarget, eventArgument) { if (!theForm.onsubmit || (theForm.onsubmit() != false)) { theForm.__EVENTTARGET.value = eventTarget; theForm.__EVENTARGUMENT.value = eventArgument; theForm.submit(); }}';document.body.appendChild(script);" #driver.execute_script(js) driver.find_element_by_name("Button2").click() html=driver.page_source soup = BeautifulSoup(html,"lxml") print soup tables = soup.findAll("table") for tab in tables:

for tr in tab.findAll("tr"):

print "--------------------"

for td in tr.findAll("td")[0:3]:

print td.getText()

Now we can only get the results of compulsory courses... Because all the results are triggered by js generated by ASP. Instead of submit ting it directly... A solution is being sought. Let's start with the design of our database.

Secondly, Mariadb Student Database Design, here we quote the content of the principle of our SQL server database on the computer...

My database statement:

create database jwc character set utf8; use jwc; create table Student( Sno char(9) primary key, Sname varchar(20) unique, Sdept char(20), Spwd char(20) ); create table Course( Cno char(2) primary key, Cname varchar(30) unique, Credit numeric(2,1) ); create table SC( Sno char(9) not null, Cno char(2) not null, Grade int check(Grade>=0 and Grade<=100), primary key(Sno,Cno), foreign key(Sno) references Student(Sno), foreign key(Cno) references Course(Cno) );

3. Construction of Python web Environment (LNMP):

Because the selected http server is apache, it is necessary to install mod_wsgi (python general gateway interface) to realize the interaction between Apache and Python programs. If you use nginx, install and configure uwsgi... java-like servlet s and php-fpm.

Installation: yum install mod_wsgi

Configuration: vim/etc/httpd/conf/httpd.conf

This configuration took me a lot of thought and time... There are many mistakes on the Internet. The most standard Python web django development configuration... No thanks for taking it away.

#config python web LoadModule wsgi_module modules/mod_wsgi.so <VirtualHost *:8080> ServerAdmin root@Vito-Yan ServerName www.yuol.onlne ServerAlias yuol.online Alias /media/ /var/www/html/jwc/media/ Alias /static/ /var/www/html/jwc/static/ <Directory /var/www/html/jwc/static/> Require all granted </Directory> WSGIScriptAlias / /var/www/html/jwc/jwc/wsgi.py # DocumentRoot "/var/www/html/jwc/jwc" ErrorLog "logs/www.yuol.online-error_log" CustomLog "logs/www.yuol.online -access_log" common <Directory "/var/www/html/jwc/jwc"> <Files wsgi.py> AllowOverride All Options Indexes FollowSymLinks Includes ExecCGI Require all granted </Files> </Directory> </VirtualHost>