The python3 crawler encountered a backcrawl

When you excitedly open a web page and find that the resources inside are great, you can download them in batches. Then thank you for writing a crawler download. As a result, after an operation, you find that the website has anti-crawling measures, which is embarrassing.

In the next few articles, we will study all kinds of anti-crawling routines. Of course, the Internet does not have 100% anti-crawling measures. As long as you can use the web pages you visit with your browser, they can be crawled. All people can not stop the crawling, they can only increase the cost of your crawling to a certain extent. To put it plain, they can't let your technology crawl~

Crawlers and anti-crawlers have always been the basis for programmers in this field to fight, from the simplest UA restrictions to slightly more complex IP restrictions, user restrictions, and technology are constantly evolving, but don't be afraid of thieves or thieves. As long as your site content is valuable, can you rest assured that a bunch of crawler coder s are focused on it?

emmmm....

Develop javascript encryption

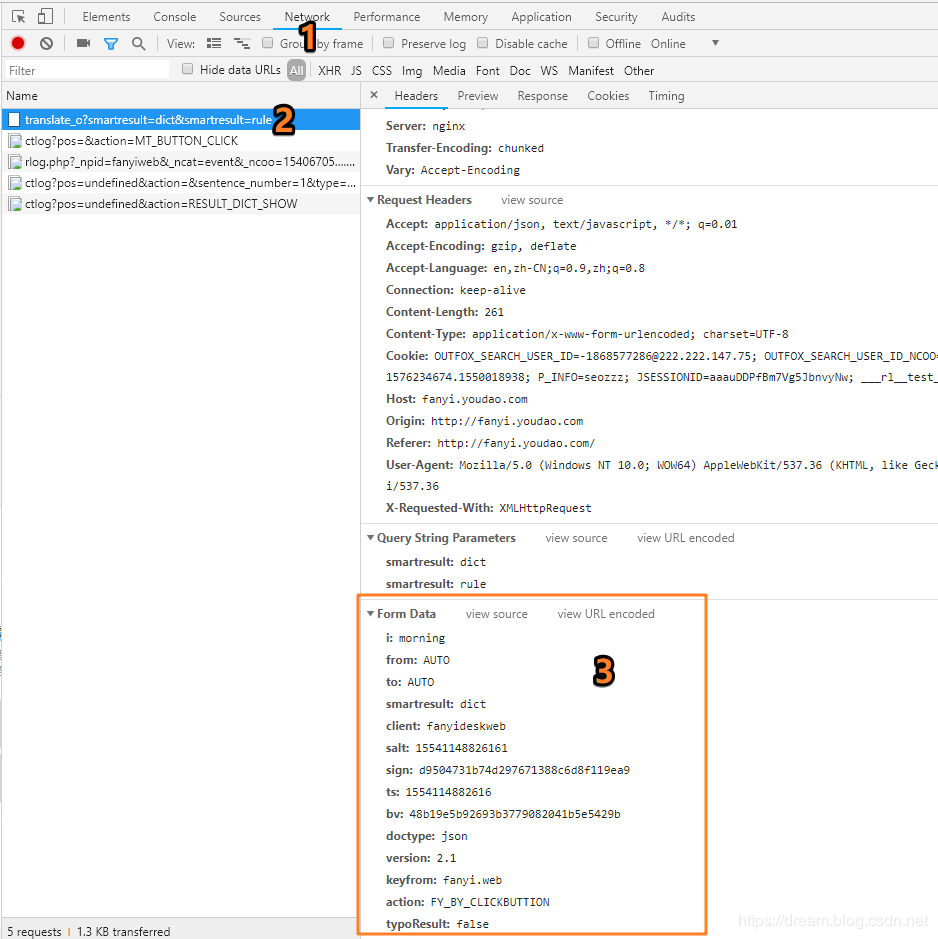

The simplest encryption for js is using md5. We demonstrate this blog content at http://fanyi.youdao.com/

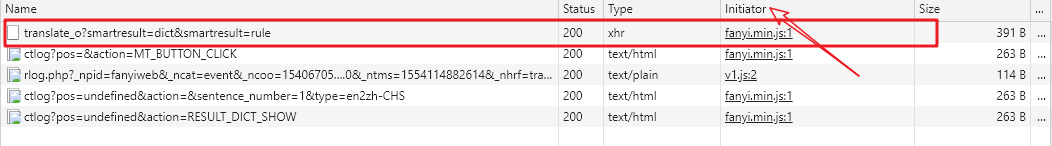

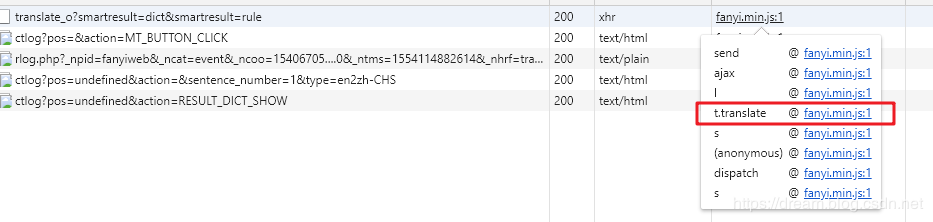

Next you need to note which Js file initiated the request

The file was fetched as fanyi.min.js. Keep tracking. A mouse click on the file name will give you the basic information. We click on the file link for the method related to the request and jump inside the method

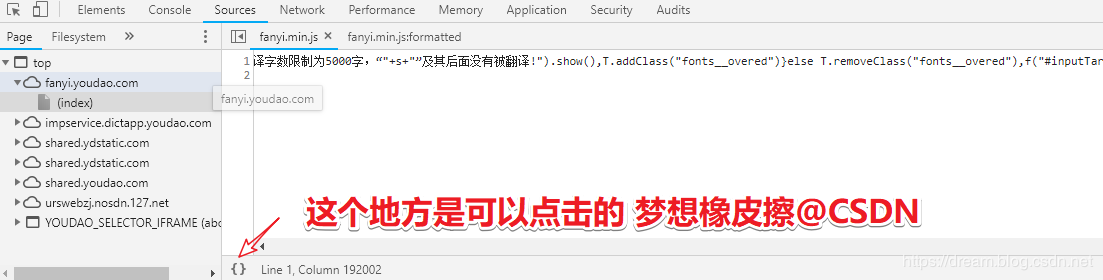

There is an operation detail in this place, you need to learn that after clicking on a file, the JS file you jump to is compressed and formatted

Get Source Code

t.translate = function(e, t) {

_ = f("#language").val();

var n = x.val()

, r = g.generateSaltSign(n)

, i = n.length;

if (F(),

T.text(i),

i > 5e3) {

var a = n;

n = a.substr(0, 5e3),

r = g.generateSaltSign(n);

var s = a.substr(5e3);

s = (s = s.trim()).substr(0, 3),

f("#InputTargetError'). text ('words are limited to 5000 words in channel translation,' + s +'and not translated after it!'). show(),

T.addClass("fonts__overed")

} else

T.removeClass("fonts__overed"),

f("#inputTargetError").hide();

d.isWeb(n) ? o() : l({

i: n,

from: C,

to: S,

smartresult: "dict",

client: k,

salt: r.salt,

sign: r.sign,

ts: r.ts,

bv: r.bv,

doctype: "json",

version: "2.1",

keyfrom: "fanyi.web",

action: e || "FY_BY_DEFAULT",

typoResult: !1

}, t)

}parameter analysis

- i means a word with translation

- from set to AUTO

- To set to AUTO

- smartresult default dict

- Client translation client: should be fanyideskweb by default

- salt first variable needs to see the build rules

- sign second variable needs to consult the build rule

- ts

- bv

- Keep the remaining parameters by default

Key parameters

- salt

- sign

- ts

- bv

Parameter source found in code review

var r = function(e) {

var t = n.md5(navigator.appVersion)

, r = "" + (new Date).getTime()

, i = r + parseInt(10 * Math.random(), 10);

return {

ts: r,

bv: t,

salt: i,

sign: n.md5("fanyideskweb" + e + i + "1L5ja}w$puC.v_Kz3@yYn")

}OK, we've got the parameters

- ts = r represents the current timestamp

- salt adds a random number to r

- sign is a special md5, with the middle focus on e which is actually the word you want to translate

-

navigator.appVersion is an easy one to get by running it in a developer tool

5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36

Code Attempt

With all this stuff ready, all that's left is coding. Let's get started. This place is based on the JS source code and then converted to Python. There's nothing particularly difficult

Generation of parameters

def generate_salt_sign(translate):

# var t = n.md5(navigator.appVersion)

app_version = "5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36"

bv = hashlib.md5(app_version.encode(encoding='UTF-8')).hexdigest()

# r = "" + (new Date).getTime()

ts = str(int(round(time.time(),3)*1000))

# i = r + parseInt(10 * Math.random(), 10);

salt = ts + str(random.randint(1,10))

# sign: n.md5("fanyideskweb" + e + i + "1L5ja}w$puC.v_Kz3@yYn")

sign = hashlib.md5(("fanyideskweb"+translate+salt+"1L5ja}w$puC.v_Kz3@yYn").encode(encoding='utf-8')).hexdigest()

return salt,sign,ts,bvSplicing of parameters and preparation of header

def params():

data = {}

translate = 'morning'

client = 'fanyideskweb'

data['i'] = translate

data['from'] = 'AUTO'

data['to'] = 'AUTO'

data['smartresult'] = 'dict'

data['client'] = client

data['salt'],data['sign'],data['ts'],data['bv'] = generate_salt_sign(translate)

data['doctype'] = 'json'

data['version'] = '2.1'

data['keyfrom'] = 'fanyi.web'

data['action'] = 'FY_BY_REALTIME'

data['typoResult'] = 'false'

return dataInitiate Request

def tran():

data = params()

headers = {}

headers["User-Agent"] = "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36"

headers["Referer"] = "http://fanyi.youdao.com/"

headers["Cookie"] = "OUTFOX_SEARCH_USER_ID=-1868577286@222.222.147.75;"

with requests.post("http://fanyi.youdao.com/translate_o?smartresult=dict&smartresult=rule",headers=headers,data=data) as res:

print(res.text)

if __name__ == '__main__':

tran()Result Display

{"translateResult":[[{"tgt":"Good morning!....","src":"morning"}]],"errorCode":0,"type":"en2zh-CHS","smartResult":{"entries":["","n. Morning; dawn; early days\r\n"],"type":1}}Once we get the data, we're done ~

Anti-crawl content for this blog is complete~

Follow the WeChat public account and reply 0401 to get the source code