Replication environment preparation

In theory, read-write separation has nothing to do with the replication mode. Atlas is responsible for redirecting read-write. As for the replication mode itself, this is the test environment. Before testing the single-machine multi-instance of MGR, the replication mode of single-master mode of MGR, it is convenient to use MGR to do read-write separation based on atlas.

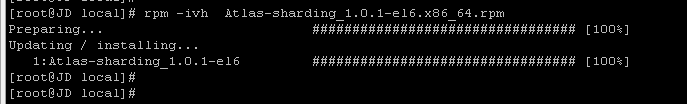

atlas installation

rpm installation, instantaneous completion

The configuration file for atlas (default / usr/local/mysql_proxy/conf/test.cnf) feels very refreshing, and basically the notes for the configuration nodes are very clear.

Because it is a single machine with multiple instances, the slave library instances can be distinguished only by different port numbers. They can be single slave or multiple slave, and they can be separated according to priority.

The IP and port of MySQL slave library connected by # Atlas backend, and the number after @ represents the weight, which is used for load balancing. If omitted, it defaults to 1. It can be set up many times, separated by commas.

proxy-read-only-backend-addresses = ***.***.***.***:8002@1, ***.***.***.***::8003@2

It should be noted that the corresponding user name must exist in each utilization and the password is the same, otherwise there will be errors.

# Username and its corresponding encrypted MySQL password, password encrypt using PREFIX/bin directory encryption program encrypt encryption, downlink user1 and user2 as examples, replace it with your MySQL username and encrypted password!

pwds = username1:DAJnl8cVzy8=, username2:DAJnl8cVzy8=

[mysql-proxy]

#belt#No. 1 is an unnecessary configuration item

#User name of management interface

admin-username = user

#Password of Management Interface

admin-password = pwd

#The IP and port of MySQL main library connected by Atlas backend can be set up in many ways, separated by commas.

proxy-backend-addresses = ***.***.***.***:8001

#The IP and port of MySQL slave library connected by the back end of Atlas, @ the number after @ represents the weight, which is used for load balancing. If omitted, it defaults to 1. It can be set up many times, separated by commas.

proxy-read-only-backend-addresses = proxy-read-only-backend-addresses =***.***.***.***:8002@1,***.***.***.***:8003@2

#Username and its corresponding encrypted MySQL password, password using PREFIX/bin directory encryption program encrypt encryption, downlink user1 and user2 as an example, replace it with your MySQL username and encrypted password!

pwds = user1:DAJnl8cVzy8=, user2:DAJnl8cVzy8=

#Set the running mode of Atlas as true, daemon mode when true, foreground mode when false, false when developing and debugging, true when running online, no space behind true.

daemon = true

#Setting the operation mode of Atlas to true, Atlas will start two processes, one is monitor and the other is worker. Monitor will automatically restart the worker after the worker exits unexpectedly. When set to false, only worker, no monitor, usually false when developing and debugging, true when running online, and no space after true.

keepalive = true

#The number of working threads has a great impact on the performance of Atlas and can be set appropriately according to the situation.

event-threads = 8

#Log level, divided into message, warning, critical, error, debug five levels

log-level = message

#The path of log storage

log-path = /usr/local/mysql-proxy/log

#The switch of the SQL log can be set to OFF, ON and REALTIME. OFF means not to record the SQL log, ON means to record the SQL log, REALTIME means to record the SQL log and write it to disk in real time. The default is OFF.

#sql-log = OFF

#Slow log output settings. When this parameter is set, the log only outputs log records with execution time exceeding sql-log-slow (ms). If this parameter is not set, all logs are output.

#sql-log-slow = 10

#Instance name, used to distinguish between multiple Atlas instances on the same machine

#instance = test

#Work Interface IP and Port for Atlas Monitoring

proxy-address = ***.***.***.***:1234

#Management Interface IP and Port for Atlas Monitoring

admin-address = ***.***.***.***:2345

#In this case, person is the library name, mt is the table name, id is the table field, and 3 is the number of sub-tables. It can be set up many times, separated by commas. If the table is not separated, it is not necessary to set this item.

#tables = person.mt.id.3

#Default Character Set. After setting this item, the client no longer needs to execute SET NAMES statements

#charset = utf8

#The IP that allows clients to connect to Atlas can be either exact IP or IP segments separated by commas. If this item is not set, all IP connections are allowed, otherwise only IP connections in the list are allowed.

#client-ips = 127.0.0.1, 192.168.1

#The IP of the physical network card of LVS attached before Atlas (note that it is not virtual IP). If LVS exists and client-ips is set, this must be set, otherwise it can not be set.

#lvs-ips = 192.168.1.1

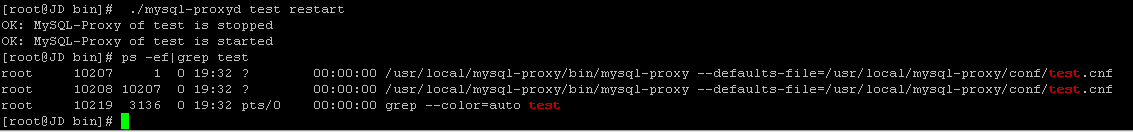

Start atlas

Test Read-Write Separation

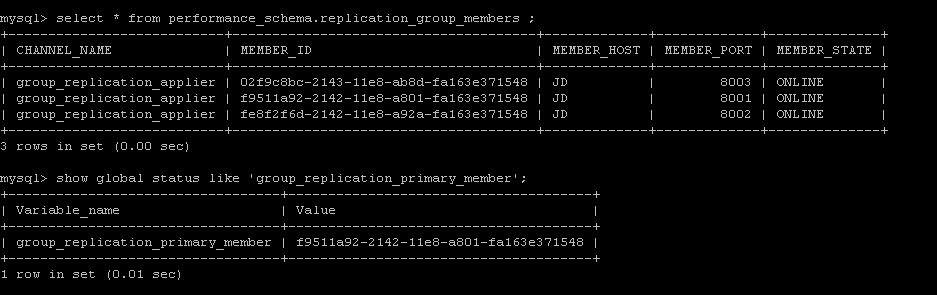

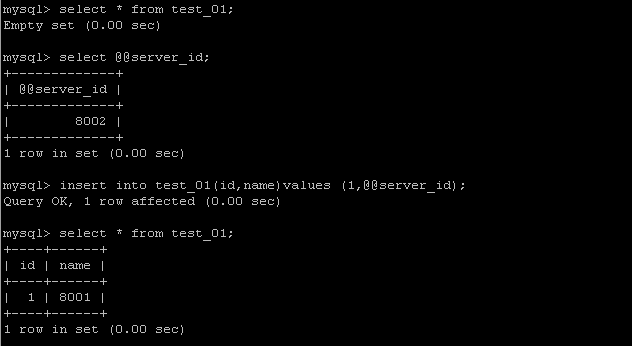

MGR is a master-slave, the master node Server_id is 8001, and the slave node Server_id is 8002 and 8003, respectively.

It can be found that the read information is redirected to 8002 nodes and the write information is redirected to 8001 nodes, thus realizing the separation of read and write.

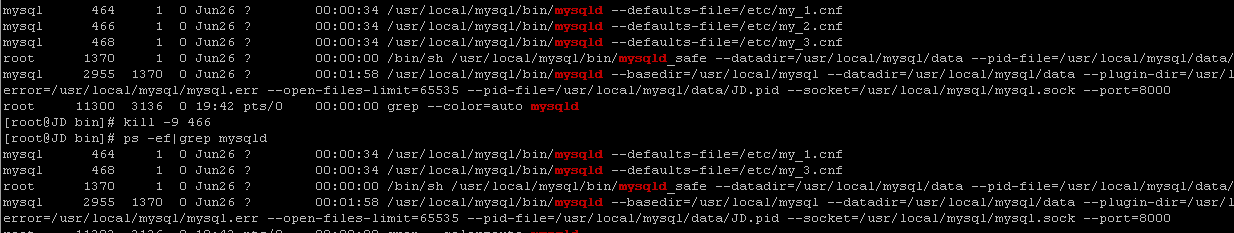

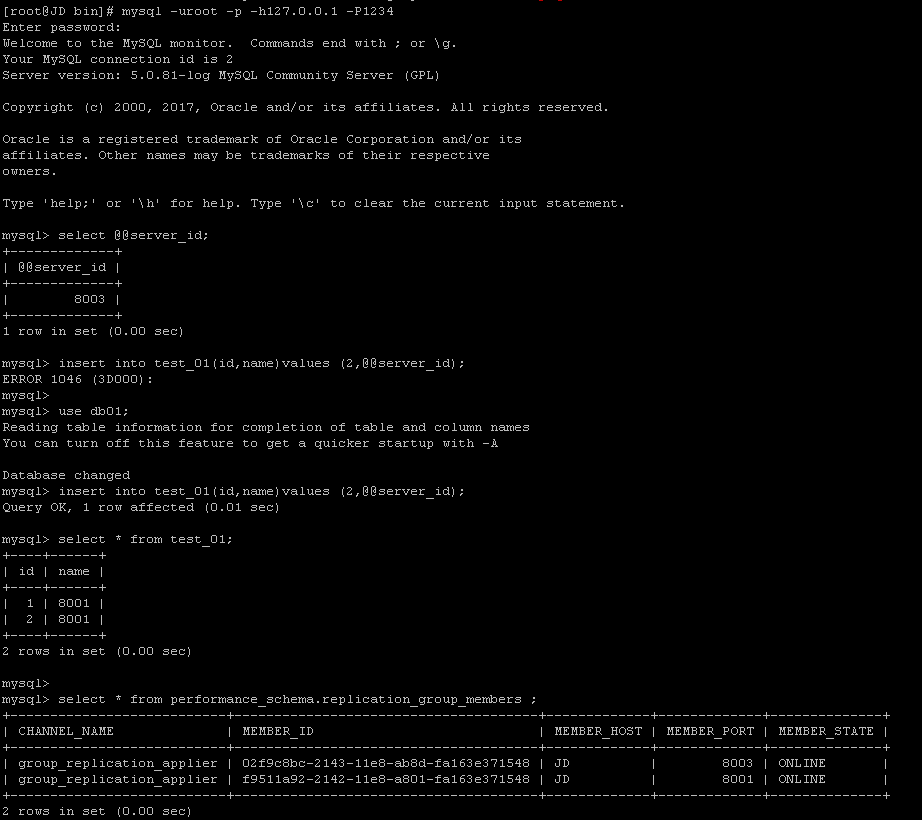

Force a slave node to be turned off and redirect the read to a secondary read node.

Read redirection to 8003 nodes, write is still the main node, MGR status is normal, if you try to close all read nodes, read will automatically redirect to write nodes, indicating that the basic errors are compatible.

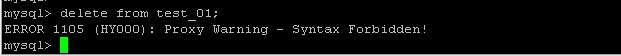

Atlas middleware automatically filters out dangerous operations, such as delete s that cannot be performed without where conditions

There is no sub-table test yet.

Problems encountered:

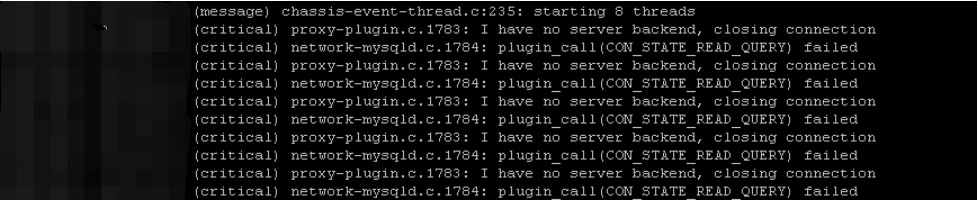

Initially, the service could not start, and the error log proxy-plugin.c.1783: I have no server backend, closing connection was caused by the inconsistency of the configured user information at each node.

Later, the user information of pwds nodes was modified, in which the user's new information was consistent at each node, including user name and password, and the service started normally.

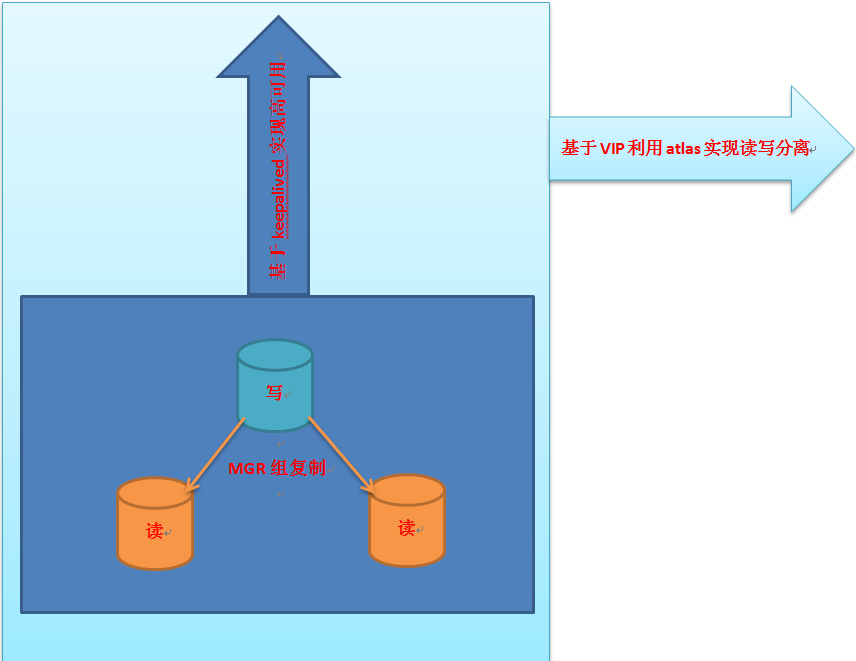

High Availability Add-Read-Write Separation Based on MGR+Keepalived+Atlas

Follow-up can try to do an automatic fault transfer based on MGR and write nodes can do automatic fault transfer based on VIP.

Then on this basis, based on VIP + other nodes to do read-write separation, in theory, it can perfectly achieve high availability + read-write separation of automatic failover.

If YY gets up like this, it feels a little bit like this. Automatic failover and separation of reading and writing are also available. In theory, as long as there is a surviving node, it can provide normal service to the outside world.