Label

PostgreSQL, HTAP, OLTP, OLAP, Scenario and Performance Testing

background

PostgreSQL is a database with a long history, which can be traced back to 1973. It was first awarded by the 2014 Computer Turing Prize winner and the originator of relational database. Michael_Stonebraker PostgreSQL has similar functions, performance, architecture and stability as Oracle.

The PostgreSQL community has many contributors, from all walks of life around the world. Over the years, PostgreSQL releases a large version every year, known for its enduring vitality and stability.

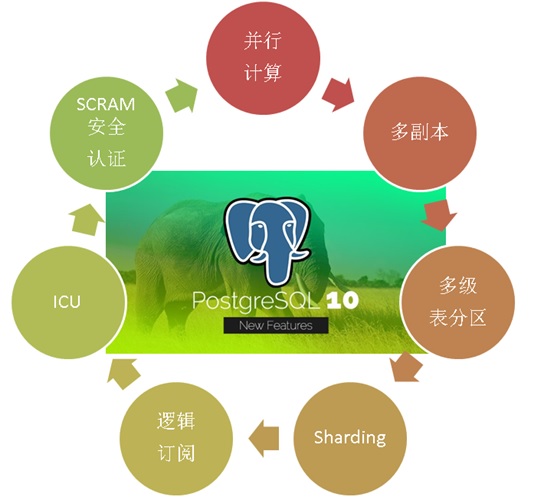

In October 2017, PostgreSQL released version 10, which carries many amazing features. The goal is to meet the requirements of mixed HTAP scenarios of OLAP and OLTP:

PostgreSQL 10 features, the most popular HTAP database for developers

1. Multi-core Parallel Enhancement

2. fdw aggregation push-down

3. Logical Subscription

4, zoning

5. Multi-copies at the financial level

6. json, jsonb full-text retrieval

7. There are also some features of plug-in form, such as vector computing, JIT, SQL graph computing, SQL stream computing, distributed parallel computing, time series processing, gene sequencing, chemical analysis, image analysis, etc.

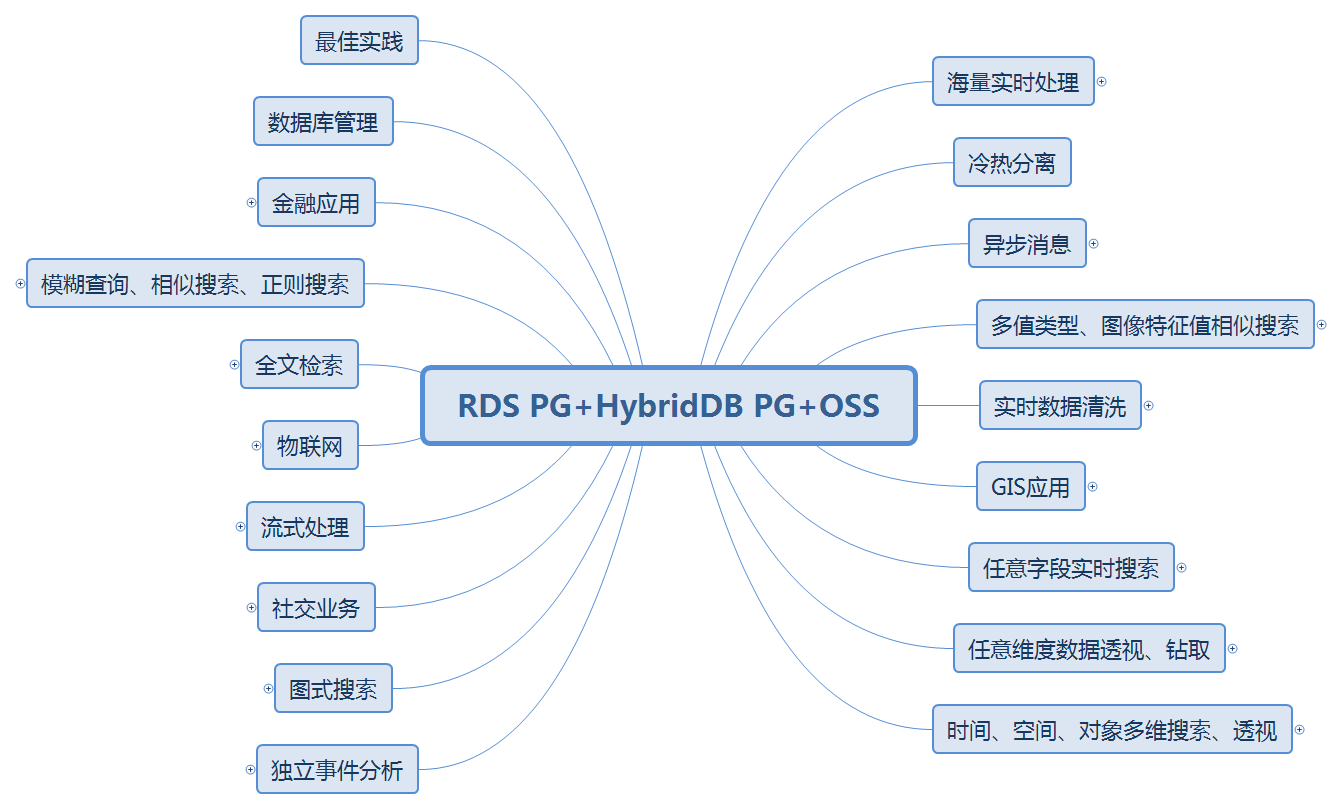

PostgreSQL can be seen in various application scenarios:

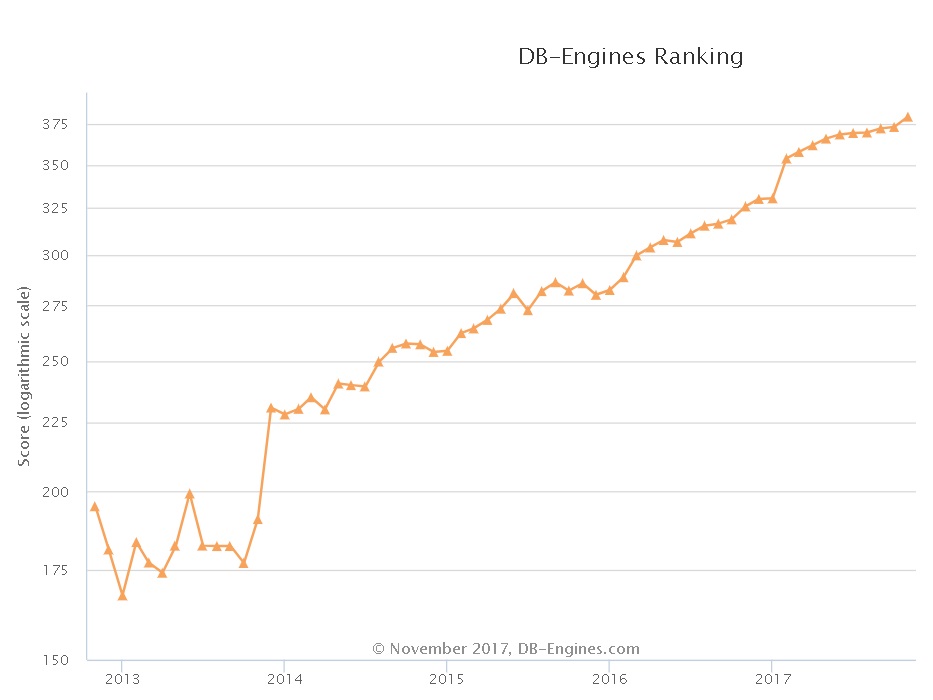

PostgreSQL has developed very rapidly in recent years. From the database scoring trend of dbranking, a well-known database evaluation website, we can see the upward trend of PostgreSQL:

From the annual community meetings held in PostgreSQL China, we can see the same trend. More and more companies are participating, more and more companies are sharing, and more and more subjects are shared. They span traditional enterprises, Internet, medical, financial, state-owned enterprises, logistics, e-commerce, social networking, vehicle networking, sharing XX, cloud, game, public transport, aviation, railway, military, training. Training, consulting services, etc.

The next series of articles will introduce various application scenarios and corresponding performance indicators of PostgreSQL.

Environmental Science

Reference to Environmental Deployment Method:

Aliyun ECS: 56 core, 224G, 1.5TB*2 SSD cloud disk.

Operating System: CentOS 7.4 x64

Database version: PostgreSQL 10

PS: The CPU and IO performance of ECS is discounted by physical opportunity, and can be estimated by one-fold performance decline. Running physical mainframe can be estimated by multiplying the performance tested here by 2.

Scenario - unlogged table without index multi-table batch write (OLTP+OLAP)

1, background

No index, multiple tables (1024 tables), write more than one record at a time. This is a very typical test of TP or AP scenarios, the ability of data to be injected into the scenario in real time.

Unlogged tables are tables that do not record logs. Unlogged tables differ from temporary tables in that they are globally visible and are often used for data that do not require persistence.

2, design

Multiple unlogged table s (1024 tables), without index, single transaction and multiple writes (1000 at a time). High concurrency.

3. Preparing test sheets

create unlogged table t_sensor( id int8, c1 int8 default 0, c2 int8 default 0, c3 int8 default 0, c4 float8 default 0, c5 text default 'aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa', ts timestamp default clock_timestamp() ) with (autovacuum_enabled=off, toast.autovacuum_enabled=off); -- create index idx_t_sensor_ts on t_sensor using btree (ts) tablespace tbs1;

do language plpgsql $$ declare begin for i in 1..1024 loop execute format('create unlogged table t_sensor%s (like t_sensor including all) inherits (t_sensor) with (autovacuum_enabled=off, toast.autovacuum_enabled=off) '||case when mod(i,2)=0 then 'tablespace tbs1' else '' end, i); end loop; end; $$;

4. Preparing test functions (optional)

create or replace function ins_sensor(int, int) returns void as $$ declare begin execute format('insert into t_sensor%s (id) select generate_series(1,%s)', $1, $2); -- In order to stitch table names, a dynamic method is used. SQL,Hard resolution takes time. -- This results in inconsistencies in test results, at least not worse than indexed single-table writing. -- If written in batches, the problem of hard parsing can be masked. end; $$ language plpgsql strict;

5. Preparing test data

6. Preparing test scripts

vi test.sql \set sid random(1,1024) select ins_sensor(:sid, 1000);

Pressure measurement

CONNECTS=56 TIMES=300 export PGHOST=$PGDATA export PGPORT=1999 export PGUSER=postgres export PGPASSWORD=postgres export PGDATABASE=postgres pgbench -M prepared -n -r -f ./test.sql -P 5 -c $CONNECTS -j $CONNECTS -T $TIMES

7, test

transaction type: ./test.sql scaling factor: 1 query mode: prepared number of clients: 56 number of threads: 56 duration: 300 s number of transactions actually processed: 2440478 latency average = 6.883 ms latency stddev = 32.267 ms tps = 8132.806500 (including connections establishing) tps = 8133.783409 (excluding connections establishing) script statistics: - statement latencies in milliseconds: 0.002 \set sid random(1,1024) 6.882 select ins_sensor(:sid, 1000);

TPS: 8133 (= 8133,000 lines / s)

Multiple unlogged table s (1024 tables), without index, single transaction and multiple writes (1000 at a time). High concurrency.

Main bottleneck: disk IO throughput.

Average response time: 6.883 milliseconds

Multiple unlogged table s (1024 tables), without index, single transaction and multiple writes (1000 at a time). High concurrency.

Main bottleneck: disk IO throughput.

Reference resources

PostgreSQL, Greenplum Application Case Book "Tathagata Palm" - Catalogue

"Database Selection - Elephant Eighteen Touches - To Architects and Developers"

PostgreSQL uses pgbench to test sysbench related case s