OkHttp Source Interpretation Summary (V) > OkHttp Core Scheduler Dispatcher Source Summary

Label (Space Separation): OkHttp Source Learning Notes

Preface

- The following summary of relevant knowledge is based on the relevant learning of Mu Course Network and my own views. If necessary, you can check the relevant teaching of Mu Course Network and feel OK.

How does okhttp implement synchronous and asynchronous requests?

Dispatcher

The role of Dispatcher

- Synchronous/asynchronous requests sent are managed in Dispatcher

What exactly is Dispatcher?

- Dispatcher's role is to maintain the status of requests

- A thread pool is maintained to execute requests.

Dispatcher source code

Member variables

//Maximum number of simultaneous requests

private int maxRequests = 64;

//Maximum number of requests for the same Host at the same time

private int maxRequestsPerHost = 5;

private @Nullable Runnable idleCallback;

//Thread pool maintains execution requests and wait requests

private @Nullable ExecutorService executorService;

//Asynchronous waiting queue

private final Deque<AsyncCall> readyAsyncCalls = new ArrayDeque<>();

//Asynchronous execution alignment

private final Deque<AsyncCall> runningAsyncCalls = new ArrayDeque<>();

//Synchronized execution queue

private final Deque<RealCall> runningSyncCalls = new ArrayDeque<>();Why do asynchronous requests require two queues?

As we can see, for asynchronous request queues, there is an asynchronous execution queue and an asynchronous waiting queue.

This can be understood as a producer and consumer model.

- Dispatcher -> Producer (default main thread)

- Executor Service --> Consumers

- readyAsyncCalls -> Asynchronous Cache Queue

- Running AsyncCalls -> Asynchronous Execution Queue

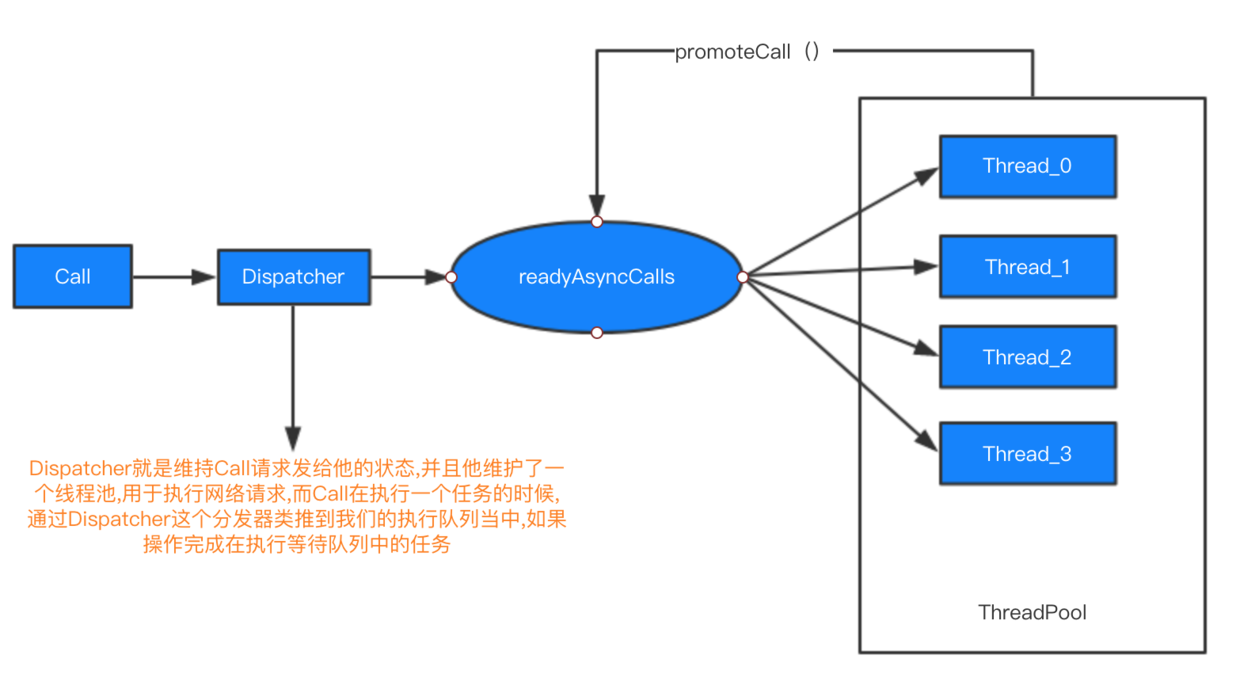

So it's easy to understand the flow chart below. When the client uses the call.enqueue(runnable) method, the Dispatcher will distribute the request. When judging whether the current maximum number of requests is still within the allowable range, the maximum host is also within the allowable range, the request will be thrown into the asynchronous execution queue, and then a new thread will be opened for network requests. When the above rule is not met, the request is thrown into the asynchronous waiting queue. Until the previous asynchronous execution queue has free threads, that is, call promoteCall() to delete the execution queue, the network requests that need to be executed will be retrieved from the asynchronous waiting queue.

For synchronous requests, after calling the execute() method

synchronized void executed(RealCall call) {

runningSyncCalls.add(call);

}This method is also simple, that is, execution adds the Call request to the synchronous execution queue.

For asynchronous requests, when the enqueue() method is called, the final code executes

synchronized void enqueue(AsyncCall call) {

if (runningAsyncCalls.size() < maxRequests && runningCallsForHost(call) < maxRequestsPerHost) {

//When the current AsyncCall can be executed immediately

runningAsyncCalls.add(call);

executorService().execute(call);

} else {

//Failure to execute immediately requires waiting

readyAsyncCalls.add(call);

}

}

ExecutorService

public synchronized ExecutorService executorService() {

if (executorService == null) {

//The KeepLive idle time for non-core threads is listed as SynchronousQueue for the task queue of 60s, which is to say, when there is no request, the thread pool will be emptied after 60s.

executorService = new ThreadPoolExecutor(0, Integer.MAX_VALUE, 60, TimeUnit.SECONDS,

new SynchronousQueue<Runnable>(), Util.threadFactory("OkHttp Dispatcher", false));

}

return executorService;

}This is mainly to schedule the queue being executed and the queue waiting for execution. When the queue being executed is completed, the adjustment of high priority in the waiting queue will be added to the queue being executed, and it will be removed from the queue waiting for execution. Ensure the efficient operation of network requests.

Call execution must remove this thread from the running AsyncCalls queue

- So when will the threads in the readyAsyncCalls queue be executed?

Because of asynchronous requests, we need to put the previously encapsulated AsyncCall(runnable) into the team. Of course, we can look at the execute() method of AsyncCall, which is supposed to be run() method, but in his upper layer (Named Runnable), there is a logic about the run() method, and an execute() method comes out. We can see that the final statement executed by the execute() method of AsyncCall will eventually execute a client. dispatcher (). finish (this); if we follow up to see the source code. At the end of the day, the following block of code is called

finished(runningAsyncCalls, call, true);

//First remove the current request from the executing request queue

if (!calls.remove(call)) throw new AssertionError("Call wasn't in-flight!");

//That is, promoteCalls are always true (when asynchronous requests are made)

if (promoteCalls) promoteCalls();

//Adjusting the asynchronous request queue

private void promoteCalls() {

//Determine whether the number of currently running asynchronous requests is the maximum allowable number?

if (runningAsyncCalls.size() >= maxRequests) return; // Already running max capacity.

//If asynchronous requests (and balances) can also be made to determine whether the waiting queue is empty or if it is empty, return directly

if (readyAsyncCalls.isEmpty()) return; // No ready calls to promote.

//The loop is waiting (ready) to execute the queue

for (Iterator<AsyncCall> i = readyAsyncCalls.iterator(); i.hasNext(); ) {

//Get the AsyncCall instance

AsyncCall call = i.next();

//Determine whether all running hosts are less than the maximum limit

if (runningCallsForHost(call) < maxRequestsPerHost) {

//If they all fit, remove the AsyncCall from the waiting queue

i.remove();

//Add this AsyncCall (waiting) to the executing request queue

runningAsyncCalls.add(call);

//Implementing requests through the online city ExecutorService

executorService().execute(call);

}

if (runningAsyncCalls.size() >= maxRequests) return; // Reached max capacity.

}

}