Label

PostgreSQL, Internet of Things, Shared Charging Pool, Business Analysis System, Multi-table Association, Detailed Completion, Banning Association, ltree Tree Type, Parallel Computing, PostgreSQL 10 Black Technology, Sales Management

background

Share rechargeable treasures, bicycles, umbrellas, girlfriends, cars, etc. Shared economy has developed very rapidly in recent years.

Sharing must involve the management of shared objects, members and so on. In fact, it also belongs to an Internet of Things system.

This paper takes the scenario of shared charging treasure as an example to share the business analysis of shared charging treasure and the design of background database of sales management system. (The boss is concerned about the overall sales performance, as well as the perspective of various channels. The sales manager is concerned about the sales performance of the area under his jurisdiction, and the operation and maintenance personnel are concerned about the status of the equipment.)

Data Structure and Data Quantity

What is the business model?

Charging treasure can be seen in hotels, shopping malls, railway stations, foot baths and other places. Each rechargeable treasure will have a relatively fixed location (for example, in the grandmother's restaurant), each fixed location will have a relatively fixed sales (just like the protection fee of the ancient bamboo), and each sales has a fixed superior.

Users borrow charging treasure operation is very simple, users sweep code, order, borrow; some can not be borrowed, then sweep code, order, charge.

(In addition to charging business, in fact, we can also cooperate with merchants to do some user portraits, advertising and promotion business. Of course, the premise is user portraits.)

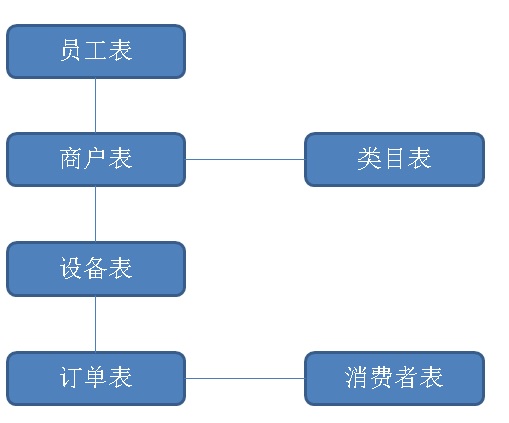

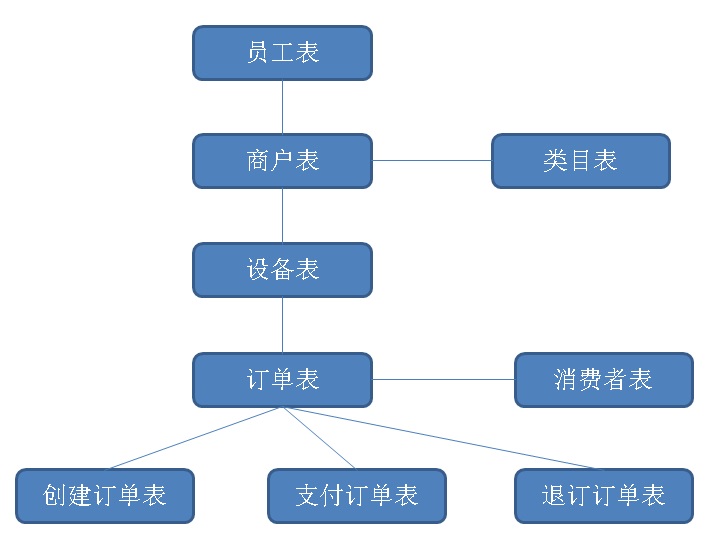

Data structure abstraction

1. Personnel table (BOSS, Sales Director, Store Manager).

Data volume prediction: 3000 +, rarely updated.

2. Category List (Foot Bath Shop, Hotel, Railway Station, Hotel.)

Data volume estimates: 100 +, rarely updated

3. Store List

Data Volume Prediction: Within Million Level, rarely updated

4. Equipment Table

Data Volume Estimation: Million-level, each device reports a heartbeat every N minutes

5. Order Form

Data Volume Estimation: Million level per day, insert and update each order at least once (create order, pay order, return order, etc.) and the order has final status.

II. Analysis of Needs

1. Real-time analysis requirements:

In addition, the perspective is based on the dimensions of day, month and year, as well as the overall situation, employees, employees at the first level, all employees, categories, stores, equipment and other dimensions.

2. Polymerization index:

The number of new equipment, online equipment, off-line equipment, new orders, turnover orders, refund orders, account flow and so on.

3. Time requirements:

There are statistical requirements for orders on the same day of inquiry, for uniform time points on the same day of inquiry and for the previous day, and they are calculated on a year-on-year basis. There are also monthly and annual needs.

4. Query concurrency:

Query concurrency in analysis systems is usually not too high, because it is used by others. It may not exceed 3000 in a minute.

5. Query timeliness:

Monthly and annual statistics are generated offline every day. (This is recommended, because there is no need to look at the business indicators in real time last month.)

Daily dimension statistics are generated in real time. (Daily data volume is not large, real-time generation, real-time query, can meet the needs of concurrency and response time. It also meets the needs of the business.

Response time requirements: tens of milliseconds.

Concurrent requirements: less than 100.

3. Database Selection

PostgreSQL 10: HTAP database, supporting 10TB OLTP and OLAP hybrid requirements. TP has strong performance and rich functions. Support multi-core parallel computing, HASH JOIN and a series of powerful functions, AP performance is also moderate.

HybridDB for PostgreSQL: PB level, pure analytical database, supporting multi-machine parallel computing. AP performance is strong, but TP performance is very weak.

For more details, please refer to:

Which scene to choose in the end? Let's do a DEMO design for both. Compare them.

PostgreSQL 10 Scheme 1

Design table structure

create table a ( -- Employee Hierarchy Information id int primary key, -- number ID nick name, -- Name pid int -- Superior ID ); create table c ( -- Category id int primary key, -- Category ID comment text -- Category name ); create table b ( -- Terminal store id int primary key, -- number nick text, -- Name cid int, -- Category aid int -- store manager ID ); create table d ( -- equipment id int primary key, -- Equipment number bid int, -- Shop number alive_ts timestamp -- Heart beat time of equipment ); create table log ( -- Order log did int, -- equipment ID state int2, -- Order Final Status crt_time timestamp, -- Order creation time mod_time timestamp -- Order revision time ) partition by range (crt_time); create table log_201701 partition of log for values from ('2017-01-01') to ('2017-02-01') with (parallel_workers =32); create table log_201702 partition of log for values from ('2017-02-01') to ('2017-03-01') with (parallel_workers =32); create table log_201703 partition of log for values from ('2017-03-01') to ('2017-04-01') with (parallel_workers =32); create table log_201704 partition of log for values from ('2017-04-01') to ('2017-05-01') with (parallel_workers =32); create table log_201705 partition of log for values from ('2017-05-01') to ('2017-06-01') with (parallel_workers =32); create table log_201706 partition of log for values from ('2017-06-01') to ('2017-07-01') with (parallel_workers =32); create table log_201707 partition of log for values from ('2017-07-01') to ('2017-08-01') with (parallel_workers =32); create table log_201708 partition of log for values from ('2017-08-01') to ('2017-09-01') with (parallel_workers =32); create table log_201709 partition of log for values from ('2017-09-01') to ('2017-10-01') with (parallel_workers =32); create table log_201710 partition of log for values from ('2017-10-01') to ('2017-11-01') with (parallel_workers =32); create table log_201711 partition of log for values from ('2017-11-01') to ('2017-12-01') with (parallel_workers =32); create table log_201712 partition of log for values from ('2017-12-01') to ('2018-01-01') with (parallel_workers =32); create table log_201801 partition of log for values from ('2018-01-01') to ('2018-02-01') with (parallel_workers =32); create index idx_log_201701_1 on log_201701 using btree (crt_time) ; create index idx_log_201702_1 on log_201702 using btree (crt_time) ; create index idx_log_201703_1 on log_201703 using btree (crt_time) ; create index idx_log_201704_1 on log_201704 using btree (crt_time) ; create index idx_log_201705_1 on log_201705 using btree (crt_time) ; create index idx_log_201706_1 on log_201706 using btree (crt_time) ; create index idx_log_201707_1 on log_201707 using btree (crt_time) ; create index idx_log_201708_1 on log_201708 using btree (crt_time) ; create index idx_log_201709_1 on log_201709 using btree (crt_time) ; create index idx_log_201710_1 on log_201710 using btree (crt_time) ; create index idx_log_201711_1 on log_201711 using btree (crt_time) ; create index idx_log_201712_1 on log_201712 using btree (crt_time) ; create index idx_log_201801_1 on log_201801 using btree (crt_time) ;

Initialization data

1. Initialize employee hierarchy (0 is the boss, 1-30 is the sales director, 31-3000 is the store manager). )

do language plpgsql $$ declare begin truncate a; insert into a select generate_series(0,3000); update a set pid=0 where id between 1 and 30; for i in 1..30 loop update a set pid=i where id between 31+100*(i-1) and 31+100*i-1; end loop; end; $$;

2. Initialization Category

insert into c select generate_series(1,100);

3. Initialization of stores

insert into b select generate_series(1,500000), '', ceil(random()*100), 30+ceil(random()*(3000-30));

4. Initialization equipment

insert into d select generate_series(1,1000000), ceil(random()*500000);

5. Generate one-year orders, about 365 million, and actually write 378 million (1 million orders per day, 90% payment, 10% refund)

do language plpgsql $$ declare s date := '2017-01-01'; e date := '2017-12-31'; begin for x in 0..(e-s) loop insert into log select ceil(random()*1000000), case when random()<0.1 then 0 else 1 end, s + x + (i||' second')::interval from generate_series(0,86399) t(i), generate_series(1,12); -- 12 It's a million days divided by 86400. It's mainly convenient to write test data. end loop; end; $$; postgres=# select count(*) from log; count ----------- 378432001 (1 row)

6. Index (Optional Operations, Optimized Items)

It is recommended that real-time data be indexed by btree, static data by BRIN block-level index and static data by BTREE index.

Example

When the order data becomes static historical data, delete the old btree index of the static table and add the following brin index.

create index idx_log_201701_1 on log_201701 using brin (crt_time) ; create index idx_log_201702_1 on log_201702 using brin (crt_time) ; create index idx_log_201703_1 on log_201703 using brin (crt_time) ; create index idx_log_201704_1 on log_201704 using brin (crt_time) ; create index idx_log_201705_1 on log_201705 using brin (crt_time) ; create index idx_log_201706_1 on log_201706 using brin (crt_time) ; create index idx_log_201707_1 on log_201707 using brin (crt_time) ; create index idx_log_201708_1 on log_201708 using brin (crt_time) ; create index idx_log_201709_1 on log_201709 using brin (crt_time) ; create index idx_log_201710_1 on log_201710 using brin (crt_time) ; create index idx_log_201711_1 on log_201711 using brin (crt_time) ; create index idx_log_201712_1 on log_201712 using brin (crt_time) ; create index idx_log_201801_1 on log_201801 using brin (crt_time) ;

Create the necessary UDF functions

1. Create immutable function to get the current time, the day before yesterday and the year before. (Using the immutable function, the optimizer filters partitions that do not need to be queried. If you want to support parallelism, set it to parallel safe.

create or replace function cdate() returns date as $$ select current_date; $$ language sql strict immutable PARALLEL safe; create or replace function cts(interval default '0') returns timestamp as $$ select (now() - $1)::timestamp; $$ language sql strict immutable PARALLEL safe;

Perspective SQL Design

By person, query all levels of subordinate, related stores, related equipment, related orders.

Output statistics:

1. Aggregate items:

Today's total order, today's payment order, year-on-year total order, year-on-year payment order, year-on-year total order, year-on-year payment order

Total orders closed in the same month, payment orders closed in the same month, total orders closed in the previous month, payment orders closed in the previous month

Total orders for the year ended, payment orders for the year ended, total orders for the year ended, payment orders for the year ended, and payment orders for the year ended.

2. Aggregation dimension:

Full volume, TOP

Category, TOP

Store, TOP

All subordinates, TOP

All subordinates, classes, TOP

All subordinates, stores, TOP

Store Manager, TOP

Store Manager, Category, TOP

Store Manager, Store, TOP

Examples of Perspective SQL Performance Indicators

1. Full fluoroscopy, 32 concurrent, 77 milliseconds.

select t1.cnt, t1.succ_cnt, t2.cnt, t2.succ_cnt from ( select count(*) cnt, sum(state) succ_cnt from log where crt_time between cdate() and cts() ) t1, ( select count(*) cnt, sum(state) succ_cnt from log where crt_time between cdate()-1 and cts(interval '1 day') ) t2; cnt | succ_cnt | cnt | succ_cnt --------+----------+--------+---------- 796621 | 716974 | 796620 | 716930 (1 row) Time: 76.697 ms

2. Class TOP, 32 concurrent, 446 milliseconds.

select c.id, count(*) cnt, sum(state) succ_cnt from c join b on (c.id=b.cid) join d on (b.id=d.bid) join log on (d.id=log.did) where crt_time between cdate() and cts() group by c.id order by cnt desc limit 10; id | cnt | succ_cnt ----+------+---------- 39 | 8369 | 7543 70 | 8346 | 7517 64 | 8281 | 7488 13 | 8249 | 7412 29 | 8222 | 7427 3 | 8217 | 7370 90 | 8200 | 7387 79 | 8199 | 7346 71 | 8175 | 7348 75 | 8169 | 7373 (10 rows) Time: 446.977 ms

3. My total sales (including all subordinates), 464 milliseconds.

with recursive recursive grammar is used here. According to the ID of the current logged-in user, the tree queries all subordinates.

with recursive tmp as ( select * from a where id=31 -- Enter my USER ID union all select a.* from a join tmp on (a.pid=tmp.id) ) select count(*) cnt, sum(state) succ_cnt from tmp join b on (tmp.id=b.aid) join d on (b.id=d.bid) join log on (d.id=log.did) where crt_time between cdate() and cts() ; cnt | succ_cnt -----+---------- 296 | 268 (1 row) Time: 463.970 ms

4. My direct subordinate, TOP, 2.6 seconds.

with recursive recursive grammar is used here. According to the ID of the current logged-in user, the tree queries all subordinates.

Regular expressions are also used to aggregate direct subordinates in groups. Get their sales.

with recursive tmp as ( select id::text from a where id=0 -- Enter my USER ID union all select tmp.id||'.'||a.id as id from a join tmp on (a.pid=substring(tmp.id, '([\d]+)$')::int) ) select substring(tmp.id, '^[\d]*\.?([\d]+)'), count(*) cnt, sum(state) succ_cnt from tmp join b on (substring(tmp.id, '([\d]+)$')::int=b.aid) join d on (b.id=d.bid) join log on (d.id=log.did) where crt_time between cdate() and cts() group by 1 order by cnt desc limit 10 ; substring | cnt | succ_cnt -----------+-------+---------- 15 | 27341 | 24615 19 | 27242 | 24500 17 | 27190 | 24481 26 | 27184 | 24481 9 | 27179 | 24466 3 | 27157 | 24323 6 | 27149 | 24481 1 | 27149 | 24402 21 | 27141 | 24473 12 | 27140 | 24439 (10 rows) Time: 2661.556 ms (00:02.662)

5. All my subordinates (recursive), TOP, 642 milliseconds.

with recursive recursive grammar is used here. According to the ID of the current logged-in user, the tree queries all subordinates.

with recursive tmp as ( select * from a where id=30 -- Enter my USER ID union all select a.* from a join tmp on (a.pid=tmp.id) ) select tmp.id, count(*) cnt, sum(state) succ_cnt from tmp join b on (tmp.id=b.aid) join d on (b.id=d.bid) join log on (d.id=log.did) where crt_time between cdate() and cts() group by tmp.id order by cnt desc limit 10 ; id | cnt | succ_cnt ------+-----+---------- 2996 | 385 | 353 2969 | 339 | 301 2935 | 335 | 312 2936 | 332 | 304 2988 | 326 | 290 2986 | 321 | 295 2960 | 319 | 293 2964 | 313 | 276 2994 | 309 | 268 2975 | 308 | 276 (10 rows) Time: 641.719 ms

5. PostgreSQL 10 Scheme Design 2 - Limit Optimization

Optimal Point Analysis of Scheme 1

As you can see, although parallel is used, the efficiency of some perspective queries is not as high as 100 milliseconds.

The main consumption is at the JOIN level. Although hashing JOIN has been done in parallel, the next optimization method is wonderful. It can automatically fill up the real upstream information (ltree, categories, stores, etc.) when the order is written.

After completing the information, the perspective without JOIN can be realized.

How to make it up?

When completing, the sales staff must include all hierarchical relationships, so we chose the PostgreSQL ltree tree type to store this relationship.

When writing an order, it can be automatically filled according to the device number (user ID(ltree), category and store) by trigger.

1. Creating Tree Types

create extension ltree;

2. Create composite types, including tree, category, store information.

create type ntyp as (lt ltree, cid int, bid int);

Adding new fields to the order form

alter table log add column addinfo ntyp;

3. Create materialized view 1 to store real-time employee structure. After materialization, there is no need to query recursively.

CREATE MATERIALIZED VIEW mv1 as select id, ( with recursive tmp as ( select id::text as path from a where id=t.id union all select a.pid||'.'||tmp.path as path from a join tmp on (a.id=substring(tmp.path, '^([\d]+)')::int) ) select * from tmp order by length(path) desc nulls last limit 1 ) from a as t;

3.1. Create UK

create unique index mv1_uk1 on mv1 (id);

3.2. Refresh the new method, when the employee structure changes, just brush it. The refresh speed is very fast.

refresh materialized view CONCURRENTLY mv1;

4. Create materialized view 2, real-time device complement value (category and store ID). After physicalization, categories and stores can be taken out directly by means of equipment number.

CREATE MATERIALIZED VIEW mv2 as select a.id as aid, c.id as cid, b.id as bid, d.id as did from a join b on (a.id=b.aid) join c on (c.id=b.cid) join d on (d.bid=b.id) ;

4.1. Create UK

create unique index mv2_uk1 on mv2(did);

4.2. Incrementally refresh the materialized view. When the relationship between equipment and stores or categories changes, refresh it. The refresh speed is very fast.

refresh materialized view CONCURRENTLY mv2;

5. Create a function to get the information of the device number by the device number: (user ID(ltree), category, store)

create or replace function gen_res (vdid int) returns ntyp as $$ select (mv1.path, mv2.cid, mv2.bid)::ntyp from mv1 join mv2 on (mv1.id=mv2.aid) where mv2.did=vdid; $$ language sql strict;

7. Create triggers for order forms and automatically complete relationships (equipment - > Stores - > categories and sales - > hierarchical relationships)

create or replace function tg() returns trigger as $$ declare begin NEW.addinfo := gen_res(NEW.did); return NEW; end; $$ language plpgsql strict; create trigger tg before insert on log_201701 for each row execute procedure tg(); create trigger tg before insert on log_201702 for each row execute procedure tg(); create trigger tg before insert on log_201703 for each row execute procedure tg(); create trigger tg before insert on log_201704 for each row execute procedure tg(); create trigger tg before insert on log_201705 for each row execute procedure tg(); create trigger tg before insert on log_201706 for each row execute procedure tg(); create trigger tg before insert on log_201707 for each row execute procedure tg(); create trigger tg before insert on log_201708 for each row execute procedure tg(); create trigger tg before insert on log_201709 for each row execute procedure tg(); create trigger tg before insert on log_201710 for each row execute procedure tg(); create trigger tg before insert on log_201711 for each row execute procedure tg(); create trigger tg before insert on log_201712 for each row execute procedure tg(); create trigger tg before insert on log_201801 for each row execute procedure tg();

8, effect

postgres=# insert into log values (1,1,now()); INSERT 0 1 postgres=# select * from log_201709 where did=1; did | state | crt_time | mod_time | addinfo -----+-------+----------------------------+----------+----------------------- 1 | 1 | 2017-09-23 16:58:47.736402 | | (0.17.1702,60,417943)

9. Revise the old data, fill in the empty record of the device number (user ID(ltree), category, store) (for example, at some time, the device number is new, has not been refreshed to MV1, MV2).

update log set addinfo=gen_res(did) where addinfo is null;

Completed data perspective (completely avoiding JOIN), open parallel, fast thieves

1. Full amount (unchanged, performance bar), 74 milliseconds.

select t1.cnt, t1.succ_cnt, t2.cnt, t2.succ_cnt from ( select count(*) cnt, sum(state) succ_cnt from log where crt_time between cdate() and cts() ) t1, ( select count(*) cnt, sum(state) succ_cnt from log where crt_time between cdate()-1 and cts(interval '1 day') ) t2; cnt | succ_cnt | cnt | succ_cnt --------+----------+--------+---------- 836965 | 753286 | 836964 | 753178 (1 row) Time: 74.205 ms

2. Category TOP, 41 milliseconds.

postgres=# select (log.addinfo).cid, count(*) cnt, sum(state) succ_cnt from log where crt_time between cdate() and cts() group by (log.addinfo).cid order by cnt desc limit 10; cid | cnt | succ_cnt -----+------+---------- 70 | 8796 | 7919 39 | 8793 | 7930 64 | 8700 | 7863 13 | 8659 | 7777 29 | 8621 | 7787 71 | 8613 | 7739 79 | 8613 | 7719 3 | 8597 | 7714 75 | 8590 | 7747 90 | 8579 | 7725 (10 rows) Time: 41.221 ms

3. My total sales (including all subordinates), 41 milliseconds

select count(*) cnt, sum(state) succ_cnt from log where crt_time between cdate() and cts() and (log.addinfo).lt ~ '*.1.*' -- seek USER ID = 1 Total sales (including all subordinates) ; cnt | succ_cnt -------+---------- 28502 | 25627 (1 row) Time: 41.065 ms

4. My direct subordinate, TOP

BOSS View, 111 milliseconds.

select substring(((log.addinfo).lt)::text, '\.?(0\.?[\d]*)'), -- USER ID = 0 Direct subordinates, please use the input user ID replace count(*) cnt, sum(state) succ_cnt from log where crt_time between cdate() and cts() and (log.addinfo).lt ~ '*.0.*' -- USER ID = 0,Please use the input user ID Replace. group by 1 -- The first field is grouping order by cnt desc limit 10 ; substring | cnt | succ_cnt -----------+-------+---------- 0.19 | 28656 | 25756 0.15 | 28655 | 25792 0.26 | 28560 | 25721 0.1 | 28548 | 25668 0.9 | 28545 | 25701 0.6 | 28506 | 25706 0.12 | 28488 | 25646 0.17 | 28485 | 25652 0.21 | 28469 | 25665 0.3 | 28459 | 25486 (10 rows) Time: 111.221 ms

Level 1 Sales Manager Perspective, 41 milliseconds

select substring(((log.addinfo).lt)::text, '\.?(1\.?[\d]*)'), -- USER ID = 1 Direct subordinates, please use the input user ID replace count(*) cnt, sum(state) succ_cnt from log where crt_time between cdate() and cts() and (log.addinfo).lt ~ '*.1.*' -- USER ID = 1,Please use the input user ID Replace. group by 1 -- The first field is grouping order by cnt desc limit 10 ; substring | cnt | succ_cnt -----------+-----+---------- 1.120 | 368 | 320 1.59 | 367 | 331 1.54 | 357 | 316 1.93 | 344 | 313 1.80 | 342 | 306 1.37 | 338 | 305 1.64 | 334 | 298 1.90 | 329 | 299 1.66 | 327 | 296 1.109 | 326 | 293 (10 rows) Time: 41.276 ms

5. All my subordinates (recursion), TOP

BOSS perspective (TOP for all end sales), 231 milliseconds.

select (log.addinfo).lt, -- All subordinates(recursion) count(*) cnt, sum(state) succ_cnt from log where crt_time between cdate() and cts() and (log.addinfo).lt ~ '*.0.*' -- USER ID = 0,Please use the input user ID Replace. group by 1 -- The first field is grouping order by cnt desc limit 10 ; lt | cnt | succ_cnt -----------+-----+---------- 0.30.2996 | 405 | 371 0.28.2796 | 402 | 350 0.21.2093 | 393 | 347 0.3.234 | 391 | 356 0.14.1332 | 381 | 347 0.13.1283 | 381 | 344 0.19.1860 | 380 | 347 0.16.1553 | 380 | 341 0.28.2784 | 377 | 346 0.7.672 | 377 | 347 (10 rows) Time: 230.630 ms

Level 1 Sales Manager Perspective, 41 milliseconds

select (log.addinfo).lt, -- All subordinates(recursion) count(*) cnt, sum(state) succ_cnt from log where crt_time between cdate() and cts() and (log.addinfo).lt ~ '*.1.*' -- USER ID = 1,Please use the input user ID Replace. group by 1 -- The first field is grouping order by cnt desc limit 10 ; lt | cnt | succ_cnt ---------+-----+---------- 0.1.59 | 367 | 331 0.1.120 | 367 | 320 0.1.54 | 355 | 315 0.1.93 | 344 | 313 0.1.80 | 341 | 305 0.1.37 | 338 | 305 0.1.64 | 334 | 298 0.1.90 | 328 | 298 0.1.66 | 327 | 296 0.1.109 | 325 | 293 (10 rows) Time: 41.558 ms

Benefits of replenishing order addinfo information

1. When the personnel structure, categories and stores change, the business side should decide whether it is necessary to revise the data in the order (user ID(ltree), categories and stores).

2. Actually, the original method is problematic, such as the equipment laid by manager A. After a month, the person in charge changes. When statistics are made, if the real-time JOIN is used, the order involving last month will be put on the head of the new person in charge, but there are obvious errors.

3. Feeling or filling up the method is more precise, who is who is who, will not make a mistake (mistake sales can be serious, affecting people's performance.)

Summary of PostgreSQL 10

What PostgreSQL database features are used?

1. Recursive Query

2. Parallel Query

3. JOIN Method

4. Inheritance (partition table)

5. Triggers

6. Compound Types

7. ltree tree type

https://www.postgresql.org/docs/9.6/static/ltree.html

7. Greenplum

Greenplum scheme 1

Note that Greenplum's TP capability is weak as mentioned earlier. If the device heartbeat is updated in real time, orders are written in real time, and updates in real time, it may not be able to withstand the pressure. (At present, green plum update, delete are all full-table locks, very large locks.)

Therefore, it is important to note in the design that the heartbeat of the device is updated in batches (e.g. from the TP database, exporting the full amount to Greenplum every few minutes). Insert the order updates (through RULE).

Table Structure Design

create table a ( -- Employee Hierarchy Information id int primary key, -- number ID nick name, -- Name pid int -- Superior ID ) DISTRIBUTED BY(id); create table c ( -- Category id int primary key, -- Category ID comment text -- Category name ) DISTRIBUTED BY(id); create table b ( -- Terminal store id int primary key, -- number nick text, -- Name cid int, -- Category aid int -- store manager ID ) DISTRIBUTED BY(id); create table d ( -- equipment id int primary key, -- Equipment number bid int, -- Shop number alive_ts timestamp -- Heart beat time of equipment ) DISTRIBUTED BY(id); create table log1 ( -- Order log, create order did int, -- equipment ID state int2, -- Order Final Status crt_time timestamp, -- Order creation time mod_time timestamp -- Order revision time ) DISTRIBUTED BY(did) PARTITION BY range (crt_time) (start (date '2017-01-01') inclusive end (date '2018-01-01') exclusive every (interval '1 month')); create table log2 ( -- Order log, final status did int, -- equipment ID state int2, -- Order Final Status crt_time timestamp, -- Order creation time mod_time timestamp -- Order revision time ) DISTRIBUTED BY(did) PARTITION BY range (crt_time) (start (date '2017-01-01') inclusive end (date '2018-01-01') exclusive every (interval '1 month')); -- Create rules, update to insert create rule r1 as on update to log1 do instead insert into log2 values (NEW.*);

Test heartbeat meter import speed

Importing 1 million device data takes about 1 second.

date +%F%T;psql -c "copy d to stdout"|psql -h 127.0.0.1 -p 15432 -U digoal postgres -c "copy d from stdin"; date +%F%T; 2017-09-2319:42:22 COPY 1000000 2017-09-2319:42:23

Test order writing speed

Note that all write operations are recommended for batch operation.

Batch writing is about 870,000 lines/s.

date +%F%T; psql -c "copy (select did,state,crt_time,mod_time from log) to stdout"|psql -h 127.0.0.1 -p 15432 -U digoal postgres -c "copy log1 from stdin"; date +%F%T; 2017-09-2320:04:44 COPY 378432001 2017-09-2320:12:03

Data import

psql -c "copy a to stdout"|psql -h 127.0.0.1 -p 15432 -U digoal postgres -c "copy a from stdin" psql -c "copy b to stdout"|psql -h 127.0.0.1 -p 15432 -U digoal postgres -c "copy b from stdin" psql -c "copy c to stdout"|psql -h 127.0.0.1 -p 15432 -U digoal postgres -c "copy c from stdin" # psql -c "copy d to stdout"|psql -h 127.0.0.1 -p 15432 -U digoal postgres -c "copy d from stdin" # psql -c "copy (select * from log) to stdout"|psql -h 127.0.0.1 -p 15432 -U digoal postgres -c "copy log1 from stdin"

Perspective of SQL Testing

1. Full perspective, 610 milliseconds.

select t1.cnt, t1.succ_cnt, t2.cnt, t2.succ_cnt from ( select count(*) cnt, sum(state) succ_cnt from log1 where crt_time between cdate() and cts(interval '0') ) t1, ( select count(*) cnt, sum(state) succ_cnt from log1 where crt_time between cdate()-1 and cts(interval '1 day') ) t2; cnt | succ_cnt | cnt | succ_cnt --------+----------+--------+---------- 876301 | 788787 | 876300 | 788564 (1 row) Time: 609.801 ms

2. Category TOP, 219 milliseconds.

select c.id, count(*) cnt, sum(state) succ_cnt from c join b on (c.id=b.cid) join d on (b.id=d.bid) join log1 on (d.id=log1.did) where crt_time between cdate() and cts(interval '0') group by c.id order by cnt desc limit 10; id | cnt | succ_cnt ----+------+---------- 70 | 9220 | 8311 39 | 9197 | 8303 64 | 9096 | 8220 79 | 9034 | 8095 13 | 9033 | 8114 29 | 9033 | 8151 75 | 9033 | 8148 3 | 9005 | 8084 71 | 9002 | 8098 90 | 8974 | 8079 (10 rows) Time: 218.695 ms

3. My total sales (including all subordinates), 208 milliseconds.

Returns all subordinates and the current user ID.

create or replace function find_low(int) returns int[] as $$ declare res int[] := array[$1]; tmp int[] := res; begin loop select array_agg(id) into tmp from a where pid = any (tmp); res := array_cat(res,tmp); if tmp is null then exit; end if; end loop; return res; end; $$ language plpgsql strict;

select count(*) cnt, sum(state) succ_cnt from (select unnest(find_low(31)) as id) as tmp join b on (tmp.id=b.aid) join d on (b.id=d.bid) join log1 on (d.id=log1.did) where crt_time between cdate() and cts(interval '0') ; cnt | succ_cnt -----+---------- 342 | 312 (1 row) Time: 208.585 ms

4. My direct subordinate, TOP.

Greenplum does not support recursive syntax for the time being and needs to customize UDF implementations.

5. All my subordinates (recursive), TOP.

Greenplum does not support recursive syntax for the time being and needs to customize UDF implementations.

Greenplus Scheme 2

Like PostgreSQL Solution 2, it materializes the data of "devices corresponding to stores, categories, sales, sales and all his superiors".

Dead work:

1. New fields

alter table log1 add column aid int; alter table log1 add column path text; alter table log1 add column cid int; alter table log1 add column bid int; alter table log2 add column aid int; alter table log2 add column path text; alter table log2 add column cid int; alter table log2 add column bid int;

2. Modify the rule defined before, the update of business is converted to INSERT, and the update operation of batch order completion is not converted.

drop rule r1 on log1; create rule r1 as on update to log1 where (NEW.aid is null) do instead insert into log2 values (NEW.*);

Materialization

1. Physical View 1: Equipment - > Stores - > Categories - > Sales

Create materialized view mv1:

create table mv1 (did int, bid int, cid int, aid int) distributed by (did); create index idx_mv1_did on mv1(did);

Initialize materialized view mv1:

insert into mv1 select d.id as did, b.id as bid, c.id as cid, a.id as aid from d join b on (d.bid=b.id) join c on (b.cid=c.id) join a on (a.id=b.aid);

Refresh materialized view mv1:

begin; update mv1 set bid=t1.bid , cid=t1.cid , aid=t1.aid from ( select d.id as did, b.id as bid, c.id as cid, a.id as aid from d join b on (d.bid=b.id) join c on (b.cid=c.id) join a on (a.id=b.aid) ) t1 where mv1.did=t1.did and (t1.bid<>mv1.bid or t1.cid<>mv1.cid or t1.aid<>mv1.aid); insert into mv1 select t1.* from ( select d.id as did, b.id as bid, c.id as cid, a.id as aid from d join b on (d.bid=b.id) join c on (b.cid=c.id) join a on (a.id=b.aid) ) t1 left join mv1 on (t1.did=mv1.did) where mv1.* is null; end; vacuum mv1;

2. Physical View 2: Sales - > Sales and all his superiors

Create functions that return sales and all of his superiors

create or replace function find_high(int) returns text as $$ declare res text := $1; tmp text := res; begin loop select pid into tmp from a where id = tmp::int; if tmp is null then exit; end if; res := tmp||'.'||res; end loop; return res; end; $$ language plpgsql strict;

Without recursive grammar, Greenplum's function call efficiency is not high:

postgres=# select find_high(id) from generate_series(100,110) t(id); find_high ----------- 0.1.100 0.1.101 0.1.102 0.1.103 0.1.104 0.1.105 0.1.106 0.1.107 0.1.108 0.1.109 0.1.110 (11 rows) Time: 1472.435 ms //The same operation in PostgreSQL takes only 0.5 milliseconds: postgres=# select find_high(id) from generate_series(100,110) t(id); find_high ----------- 0.1.100 0.1.101 0.1.102 0.1.103 0.1.104 0.1.105 0.1.106 0.1.107 0.1.108 0.1.109 0.1.110 (11 rows) Time: 0.524 ms

Verification

postgres=# select find_high(1); find_high ----------- 0.1 (1 row) postgres=# select find_high(0); find_high ----------- 0 (1 row) postgres=# select find_high(100); find_high ----------- 0.1.100 (1 row)

Create materialized view mv2

create table mv2 (aid int, path text) distributed by (aid); create index idx_mv2_did on mv2(aid);

Initialize and refresh materialized view mv2

-- GP It is simple to not support such an operation: insert into mv2 select id, find_high(id) from a; postgres=# select id, find_high(id) from a; ERROR: function cannot execute on segment because it accesses relation "postgres.a" (functions.c:155) (seg1 slice1 tb2a07543.sqa.tbc:25433 pid=106586) (cdbdisp.c:1328) DETAIL: SQL statement "select pid from a where id = $1 " PL/pgSQL function "find_high" line 7 at SQL statement

Create function

create or replace function refresh_mv2() returns void as $$ declare aid int[]; begin select array_agg(id) into aid from a; delete from mv2; insert into mv2 select id, find_high(id) from unnest(aid) t(id); end; $$ language plpgsql strict;

Call function refresh mv2, time is basically unacceptable.

select refresh_mv2();

PS: The proposed program generates this part of the staff tree structure data. Then insert it into GPDB. Because there are only 3001. Or you can generate it in PostgreSQL. PG is so convenient.

Revised order

Scheduling tasks, batch updates:

update log1 set aid=t1.aid, path=t1.path, cid=t1.cid, bid=t1.bid from ( select did, bid, cid, mv1.aid, mv2.path from mv1 join mv2 on (mv1.aid=mv2.aid) ) t1 where log1.did=t1.did and log1.aid is null; UPDATE 378432001 update log2 set aid=t1.aid, path=t1.path, cid=t1.cid, bid=t1.bid from ( select did, bid, cid, mv1.aid, mv2.path from mv1 join mv2 on (mv1.aid=mv2.aid) ) t1 where log2.did=t1.did and log2.aid is null; UPDATE 378432001

Perspective query

1. Full fluoroscopy, 205 milliseconds.

select t1.cnt, t1.succ_cnt, t2.cnt, t2.succ_cnt from ( select count(*) cnt, sum(state) succ_cnt from log1 where crt_time between cdate() and cts(interval '0') ) t1, ( select count(*) cnt, sum(state) succ_cnt from log1 where crt_time between cdate()-1 and cts(interval '1 day') ) t2; cnt | succ_cnt | cnt | succ_cnt --------+----------+--------+---------- 480228 | 432151 | 480228 | 432205 (1 row) Time: 205.436 ms

2. Category TOP, 254 milliseconds.

select c.id, count(*) cnt, sum(state) succ_cnt from c join b on (c.id=b.cid) join d on (b.id=d.bid) join log1 on (d.id=log1.did) where crt_time between cdate() and cts(interval '0') group by c.id order by cnt desc limit 10; id | cnt | succ_cnt ----+------+---------- 64 | 5052 | 4555 29 | 4986 | 4483 34 | 4982 | 4509 70 | 4968 | 4466 71 | 4964 | 4491 5 | 4953 | 4474 79 | 4937 | 4454 63 | 4936 | 4420 66 | 4934 | 4436 18 | 4922 | 4417 (10 rows) Time: 254.007 ms

3. My total sales (including all subordinates), 110 milliseconds.

select count(*) cnt, sum(state) succ_cnt from log1 where crt_time between cdate() and cts(interval '0') and (path like '1.%' or path like '%.1' or path like '%.1.%') -- seek USER ID = 1 Total sales (including all subordinates) ; cnt | succ_cnt -------+---------- 16605 | 14964 (1 row) Time: 110.396 ms

4. My direct subordinate, TOP.

BOSS View, 180 milliseconds.

set escape_string_warning TO off; select substring(path, '\.?(0\.?[0-9]*)'), -- USER ID = 0 Direct subordinates, please use the input user ID replace count(*) cnt, sum(state) succ_cnt from log1 where crt_time between cdate() and cts(interval '0') and (path like '0.%' or path like '%.0' or path like '%.0.%') -- USER ID = 0,Please use the input user ID Replace. group by 1 -- The first field is grouping order by cnt desc limit 10 ; substring | cnt | succ_cnt -----------+-------+---------- 0.3 | 17014 | 15214 0.15 | 17006 | 15285 0.11 | 16958 | 15285 0.22 | 16901 | 15231 0.19 | 16887 | 15217 0.21 | 16861 | 15160 0.6 | 16841 | 15075 0.9 | 16831 | 15123 0.26 | 16787 | 15060 0.14 | 16777 | 15048 (10 rows) Time: 179.950 ms

Level 1 Sales Manager Perspective, 176 milliseconds

select substring(path, '\.?(1\.?[0-9]*)'), -- USER ID = 1 Direct subordinates, please use the input user ID replace count(*) cnt, sum(state) succ_cnt from log1 where crt_time between cdate() and cts(interval '0') and (path like '1.%' or path like '%.1' or path like '%.1.%') -- USER ID = 1,Please use the input user ID Replace. group by 1 -- The first field is grouping order by cnt desc limit 10 ; substring | cnt | succ_cnt -----------+-----+---------- 1.120 | 222 | 202 1.54 | 218 | 193 1.92 | 217 | 192 1.51 | 209 | 187 1.93 | 206 | 181 1.53 | 203 | 182 1.59 | 203 | 187 1.37 | 202 | 188 1.82 | 197 | 177 1.66 | 196 | 180 (10 rows) Time: 176.298 ms

5. All my subordinates (recursive), TOP.

BOSS perspective (TOP for all end sales), 155 milliseconds.

select path, -- All subordinates(recursion) count(*) cnt, sum(state) succ_cnt from log1 where crt_time between cdate() and cts(interval '0') and (path like '0.%' or path like '%.0' or path like '%.0.%') -- USER ID = 0,Please use the input user ID Replace. group by 1 -- The first field is grouping order by cnt desc limit 10 ; path | cnt | succ_cnt -----------+-----+---------- 0.5.482 | 261 | 229 0.28.2796 | 248 | 229 0.24.2348 | 242 | 225 0.13.1318 | 240 | 213 0.21.2093 | 237 | 211 0.26.2557 | 235 | 210 0.4.346 | 233 | 205 0.30.2935 | 231 | 214 0.14.1332 | 229 | 205 0.26.2620 | 229 | 204 (10 rows) Time: 155.268 ms

First-level Sales Manager Perspective, 151 milliseconds

select path, -- All subordinates(recursion) count(*) cnt, sum(state) succ_cnt from log1 where crt_time between cdate() and cts(interval '0') and (path like '1.%' or path like '%.1' or path like '%.1.%') -- USER ID = 1,Please use the input user ID Replace. group by 1 -- The first field is grouping order by cnt desc limit 10 ; path | cnt | succ_cnt ---------+-----+---------- 0.1.120 | 222 | 202 0.1.92 | 218 | 193 0.1.54 | 218 | 193 0.1.51 | 209 | 187 0.1.93 | 207 | 182 0.1.59 | 204 | 187 0.1.53 | 203 | 182 0.1.37 | 202 | 188 0.1.82 | 198 | 178 0.1.66 | 196 | 180 (10 rows) Time: 150.883 ms

Summary of Greenplum

1. The use of Greenplum requires attention to data skew, so please refer to the selection of distribution keys:

2. Greenplum does not support recursive grammar for the time being, so UDF is needed to implement operations like finding all subordinates or completing all subordinates.

3. Scheme 2 of Greenplum. The key point is materialized view and filling (in fact, it doesn't matter if it is not filled in the order), as long as a table (equipment number - > store - > category and employee hierarchy) is generated, it will be much more convenient to query.

4. The delete and update operations of Greenplum lock the whole table and block the insert, delete and update operations of other tables. No blocking queries. Special attention is needed.

5. Batch updating is adopted for order replenishment.

Nine, summary

For this example, it is recommended to use PostgreSQL 10 (especially when the future volume is going to be 100 TB, migrating to PolarDB for PostgreSQL will be particularly convenient and fully compatible.) In terms of performance, both TP and AP meet the requirements. Functional aspects also fully meet the needs, and there are many features that can be used to enhance the user experience:

If you want to use the Greenplum(HybridDB for PostgreSQL) solution, it is recommended that you still use a design method similar to PostgreSQL 10 solution 2 (order completion is implemented using rules, or batch update).

1. Recursive query, which is used to retrieve tree-structured data, such as employee hierarchy, schema search, etc.

2. Parallel query can effectively utilize the ability of multiple CPU s, which is similar to the enlargement in the game, and accelerate the query.

3. JOIN methods, including hash, merge, nestloop and other JOIN methods, can handle any complex JOIN.

4. Inheritance (partition table), order partition by time.

5. Flip-flop, which is used to complete orders automatically.

6. Complete the information of "Equipment - > Store - > Category and Employee Hierarchy".

7. ltree tree type, store the completed employee-supervisor relationship.

https://www.postgresql.org/docs/9.6/static/ltree.html

8. Physical view, which is used to complement the employee rank. One-click refresh does not require business to handle complex personnel change logic. It also facilitates the realization of perspective analysis statements.

9. Regular expressions are used in the regular matching of ltree, such as aggregation by direct subordinate group, aggregation by current login user group, etc.

10. and many features not used in this scheme (such as SQL stream computing, oss_ext object storage external tables, etc.).

Next, Aliyun will launch PolarDB for PostgreSQL, 100TB level, shared storage, one-write multi-read architecture. AWS Aurora and Oracle RAC.

11. The real-time perspective QUERY performance comparison of three schemes (the same hardware resources, 32C):

| programme | use case | response time |

|---|---|---|

| PostgreSQL 10 Scheme 1 | Full perspective | 77 milliseconds |

| PostgreSQL 10 Scheme 1 | Category TOP | 446 milliseconds |

| PostgreSQL 10 Scheme 1 | My total sales (including all subordinates) | 464 milliseconds |

| PostgreSQL 10 Scheme 1 | My immediate subordinate, TOP | 2.6 seconds |

| PostgreSQL 10 Scheme 1 | All my subordinates (recursive), TOP | 642 milliseconds |

| - | - | - |

| PostgreSQL 10 Scheme 2 | Full perspective | 74 milliseconds |

| PostgreSQL 10 Scheme 2 | Category TOP | 41 milliseconds |

| PostgreSQL 10 Scheme 2 | My total sales (including all subordinates) | 41 milliseconds |

| PostgreSQL 10 Scheme 2 | My immediate subordinate, TOP | 41 milliseconds |

| PostgreSQL 10 Scheme 2 | All my subordinates (recursive), TOP | 41 milliseconds |

| - | - | - |

| Greenplum scheme 1 | Full perspective | 610 milliseconds |

| Greenplum scheme 1 | Category TOP | 219 milliseconds |

| Greenplum scheme 1 | My total sales (including all subordinates) | 208 milliseconds |

| Greenplum scheme 1 | My immediate subordinate, TOP | No recursive, untested support |

| Greenplum scheme 1 | All my subordinates (recursive), TOP | No recursive, untested support |

| - | - | - |

| Greenplus Scheme 2 | Full perspective | 205 milliseconds |

| Greenplus Scheme 2 | Category TOP | 254 milliseconds |

| Greenplus Scheme 2 | My total sales (including all subordinates) | 110 milliseconds |

| Greenplus Scheme 2 | My immediate subordinate, TOP | 176 milliseconds |

| Greenplus Scheme 2 | All my subordinates (recursive), TOP | 151 milliseconds |

12. The differences between Greenplum and PostgreSQL products and how to select them can be referred to:

Chapter: The features and selection guidance of Greenplum and PostgreSQL products.