Label

PostgreSQL, 10.0, Time Measurement Standby Delay, pg_stat_replication

background

Four fields in the pg_stat_replication view record WAL loci fed back from the standby. As follows:

postgres=# \d pg_stat_replication View "pg_catalog.pg_stat_replication" Column | Type | Modifiers ------------------+--------------------------+----------- pid | integer | usesysid | oid | usename | name | application_name | text | client_addr | inet | client_hostname | text | client_port | integer | backend_start | timestamp with time zone | backend_xmin | xid | state | text | sent_location | pg_lsn | -- Up to the date that the main library has been sent LSN position write_location | pg_lsn | -- The library has already been built. WRITE Of LSN position flush_location | pg_lsn | -- Standby has been persistent LSN position replay_location | pg_lsn | -- The library has already been built. APPLY Of LSN position sync_priority | integer | sync_state | text |

By calculating, we can get the delay (WAL bytes) of the current backup and main libraries in four dimensions.

pg_current_xlog_insert_location -- Get the WAL insertion site of the current database pg_xlog_location_diff -- Calculating the Gap between Two WAL LSN Loci -- SQL is as follows, calculating the delay of the main standby select pg_size_pretty(pg_xlog_location_diff(pg_current_xlog_insert_location(), sent_location)) from pg_stat_replication; For queries in other dimensions, change send_location.

Using the above method, the latency that can be queried is feedback in byte form.

So there is no good way to convert to time form at present, unless the corresponding LSN RECORD time information is read from the WAL file.

10.0 will add several fields to the pg_stat_replication view to represent time delays (write_lag, flush_lag and replace_lag).

Replication lag tracking for walsenders Adds write_lag, flush_lag and replay_lag cols to pg_stat_replication. Implements a lag tracker module that reports the lag times based upon measurements of the time taken for recent WAL to be written, flushed and replayed and for the sender to hear about it. These times represent the commit lag that was (or would have been) introduced by each synchronous commit level, if the remote server was configured as a synchronous standby. For an asynchronous standby, the replay_lag column approximates the delay before recent transactions became visible to queries. If the standby server has entirely caught up with the sending server and there is no more WAL activity, the most recently measured lag times will continue to be displayed for a short time and then show NULL. Physical replication lag tracking is automatic. Logical replication tracking is possible but is the responsibility of the logical decoding plugin. Tracking is a private module operating within each walsender individually, with values reported to shared memory. Module not used outside of walsender. Design and code is good enough now to commit - kudos to the author. In many ways a difficult topic, with important and subtle behaviour so this shoudl be expected to generate discussion and multiple open items: Test now! Author: Thomas Munro, following designs by Fujii Masao and Simon Riggs Review: Simon Riggs, Ian Barwick and Craig Ringer

principle

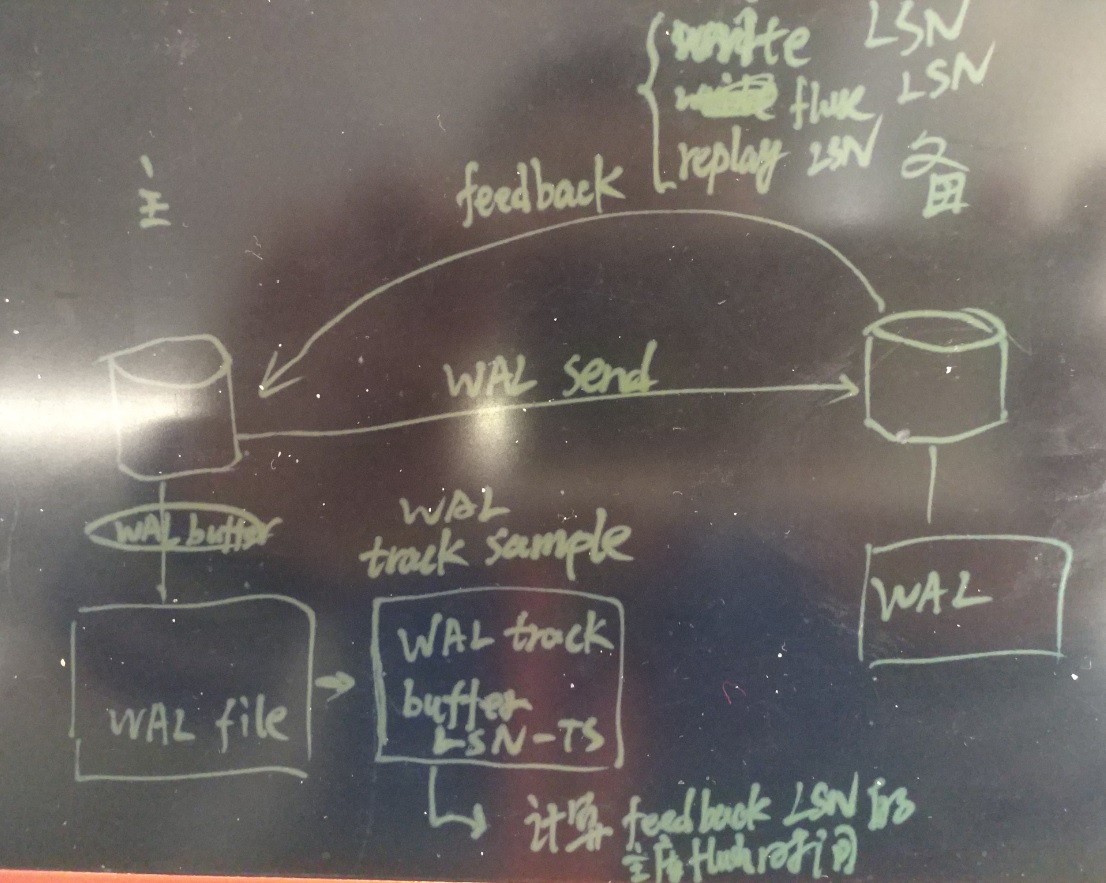

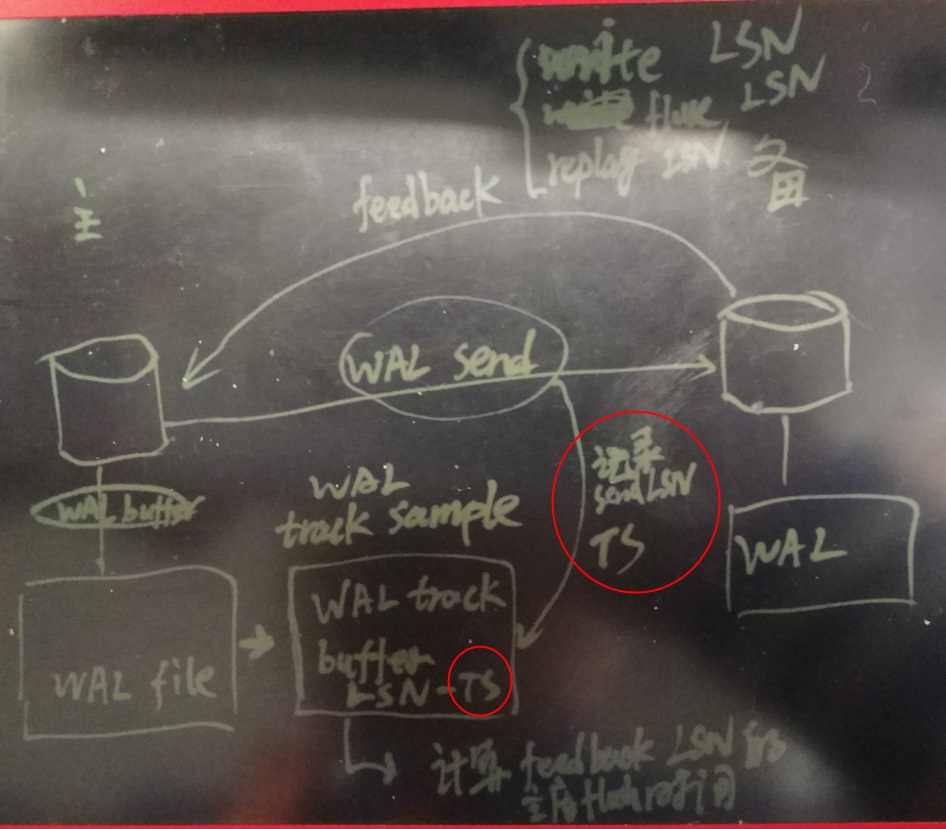

1. The main library opens up a buffer, at the same time sampling and recording the LSN location and corresponding time of the main library flush wal record.

+/* A sample associating a log position with the time it was written. */ +typedef struct +{ + XLogRecPtr lsn; + TimestampTz time; +} WalTimeSample; + +/* The size of our buffer of time samples. */ wal flush lsn And corresponding time sampling +#define LAG_TRACKER_BUFFER_SIZE 8192 + +/* A mechanism for tracking replication lag. */ +static struct +{ + XLogRecPtr last_lsn; + WalTimeSample buffer[LAG_TRACKER_BUFFER_SIZE]; + int write_head; + int read_heads[NUM_SYNC_REP_WAIT_MODE]; -- Corresponding reserve library write, flush, replay Three modes. + WalTimeSample last_read[NUM_SYNC_REP_WAIT_MODE]; -- +} LagTracker; /* SyncRepWaitMode */ #define SYNC_REP_NO_WAIT (-1) #define SYNC_REP_WAIT_WRITE 0 #define SYNC_REP_WAIT_FLUSH 1 #define SYNC_REP_WAIT_APPLY 2 #define NUM_SYNC_REP_WAIT_MODE 3

2. In fact, the current record is not FLUSH WAL record LSN location and corresponding time, but wal sender LSN time, so the current code, we should understand the delay from sending LSN to the standby write, flush, replace three stages of delay.

Instead of delays from main library FLUSH LSN to standby write, flusn, replace.

This is more accurate when the transmission delay of the main and standby WAL is not large. When the transmission delay of the main and standby WAL is large, this is not allowed.

The code is as follows.

/* + * Record the current system time as an approximation of the time at which + * this WAL position was written for the purposes of lag tracking. + * + * In theory we could make XLogFlush() record a time in shmem whenever WAL + * is flushed and we could get that time as well as the LSN when we call + * GetFlushRecPtr() above (and likewise for the cascading standby + * equivalent), but rather than putting any new code into the hot WAL path + * it seems good enough to capture the time here. We should reach this + * after XLogFlush() runs WalSndWakeupProcessRequests(), and although that + * may take some time, we read the WAL flush pointer and take the time + * very close to together here so that we'll get a later position if it + * is still moving. + * + * Because LagTrackerWriter ignores samples when the LSN hasn't advanced, + * this gives us a cheap approximation for the WAL flush time for this + * LSN. + * + * Note that the LSN is not necessarily the LSN for the data contained in + * the present message; it's the end of the the WAL, which might be + * further ahead. All the lag tracking machinery cares about is finding + * out when that arbitrary LSN is eventually reported as written, flushed + * and applied, so that it can measure the elapsed time. + */ + LagTrackerWrite(SendRqstPtr, GetCurrentTimestamp()); +/* + * Record the end of the WAL and the time it was flushed locally, so that + * LagTrackerRead can compute the elapsed time (lag) when this WAL position is + * eventually reported to have been written, flushed and applied by the + * standby in a reply message. + * Exported to allow logical decoding plugins to call this when they choose. + */ +void +LagTrackerWrite(XLogRecPtr lsn, TimestampTz local_flush_time) +{ + bool buffer_full; + int new_write_head; + int i; + + if (!am_walsender) + return; + + /* + * If the lsn hasn't advanced since last time, then do nothing. This way + * we only record a new sample when new WAL has been written. + */ + if (LagTracker.last_lsn == lsn) + return; + LagTracker.last_lsn = lsn; + + /* + * If advancing the write head of the circular buffer would crash into any + * of the read heads, then the buffer is full. In other words, the + * slowest reader (presumably apply) is the one that controls the release + * of space. + */ + new_write_head = (LagTracker.write_head + 1) % LAG_TRACKER_BUFFER_SIZE; + buffer_full = false; + for (i = 0; i < NUM_SYNC_REP_WAIT_MODE; ++i) + { + if (new_write_head == LagTracker.read_heads[i]) + buffer_full = true; + } + + /* + * If the buffer is full, for now we just rewind by one slot and overwrite + * the last sample, as a simple (if somewhat uneven) way to lower the + * sampling rate. There may be better adaptive compaction algorithms. + */ + if (buffer_full) + { + new_write_head = LagTracker.write_head; + if (LagTracker.write_head > 0) + LagTracker.write_head--; + else + LagTracker.write_head = LAG_TRACKER_BUFFER_SIZE - 1; + } + + /* Store a sample at the current write head position. */ + LagTracker.buffer[LagTracker.write_head].lsn = lsn; + LagTracker.buffer[LagTracker.write_head].time = local_flush_time; + LagTracker.write_head = new_write_head; +}

3. Each wal sender maintains a LagTracker.

4. The backup wal receiver process feedbacks the WAL write, flush, replay LSN loci of the backup. After the wal sender process of the main library receives the feedbacks, the backup delay is obtained by LagTrackerRead (LSN + timestamp recorded in BUFFER, and the current time).

The code is as follows

+/* + * Find out how much time has elapsed between the moment WAL position 'lsn' + * (or the highest known earlier LSN) was flushed locally and the time 'now'. + * We have a separate read head for each of the reported LSN locations we + * receive in replies from standby; 'head' controls which read head is + * used. Whenever a read head crosses an LSN which was written into the + * lag buffer with LagTrackerWrite, we can use the associated timestamp to + * find out the time this LSN (or an earlier one) was flushed locally, and + * therefore compute the lag. + * + * Return -1 if no new sample data is available, and otherwise the elapsed + * time in microseconds. + */ +static TimeOffset +LagTrackerRead(int head, XLogRecPtr lsn, TimestampTz now) +{ + TimestampTz time = 0; + + /* Read all unread samples up to this LSN or end of buffer. */ + while (LagTracker.read_heads[head] != LagTracker.write_head && + LagTracker.buffer[LagTracker.read_heads[head]].lsn <= lsn) + { + time = LagTracker.buffer[LagTracker.read_heads[head]].time; + LagTracker.last_read[head] = + LagTracker.buffer[LagTracker.read_heads[head]]; + LagTracker.read_heads[head] = + (LagTracker.read_heads[head] + 1) % LAG_TRACKER_BUFFER_SIZE; + } + + if (time > now) + { + /* If the clock somehow went backwards, treat as not found. */ + return -1; + } + else if (time == 0) + { + /* + * We didn't cross a time. If there is a future sample that we + * haven't reached yet, and we've already reached at least one sample, + * let's interpolate the local flushed time. This is mainly useful for + * reporting a completely stuck apply position as having increasing + * lag, since otherwise we'd have to wait for it to eventually start + * moving again and cross one of our samples before we can show the + * lag increasing. + */ + if (LagTracker.read_heads[head] != LagTracker.write_head && + LagTracker.last_read[head].time != 0) + { + double fraction; + WalTimeSample prev = LagTracker.last_read[head]; + WalTimeSample next = LagTracker.buffer[LagTracker.read_heads[head]]; + + Assert(lsn >= prev.lsn); + Assert(prev.lsn < next.lsn); + + if (prev.time > next.time) + { + /* If the clock somehow went backwards, treat as not found. */ + return -1; + } + + /* See how far we are between the previous and next samples. */ + fraction = + (double) (lsn - prev.lsn) / (double) (next.lsn - prev.lsn); + + /* Scale the local flush time proportionally. */ + time = (TimestampTz) + ((double) prev.time + (next.time - prev.time) * fraction); + } + else + { + /* Couldn't interpolate due to lack of data. */ + return -1; + } + } + + /* Return the elapsed time since local flush time in microseconds. */ + Assert(time != 0); + return now - time; +}

The above applies only to physical replication.

For logical replication, decode plugin is responsible for developing the corresponding code for delayed storage and acquisition.

The drawbacks of current implementation and other design ideas

The current 10.0 approach is to minimize the amount of code changes and achieve an approximate time measure of the backup delay. The disadvantage is that when WAL SENDER is disconnected or there is a large delay in transmission, the delay deviation of backup time calculated by this method will be larger.

Other ways of thinking, such as recording some WAL interpolation in WAL (e.g. recording LSN location and time through wal custom interface), because COMMIT\ROLLBACK WAL RECORD and WAL interpolation record time, backup can get LSN and time directly during parsing, so it can directly feedback the time of write, flush and replace. In this way, the main library does not need to open WAL to track LSN time. The time obtained by this method is relatively accurate, but it will increase the amount of log writing and may need to modify the stream replication protocol.

For this discussion of patch, see Mail Group, URL at the end of this article.

PostgreSQL community style is very rigorous, a patch may be discussed in the mail group for several months or even years, according to your opinion repeated amendments, patch merged into master is very mature, so the stability of PostgreSQL is well known.