Label

PostgreSQL, HTAP, OLTP, OLAP, Scenario and Performance Testing

background

PostgreSQL is a database with a long history, which can be traced back to 1973. It was first awarded by the 2014 Computer Turing Prize winner and the originator of relational database. Michael_Stonebraker PostgreSQL has similar functions, performance, architecture and stability as Oracle.

The PostgreSQL community has many contributors, from all walks of life around the world. Over the years, PostgreSQL releases a large version every year, known for its enduring vitality and stability.

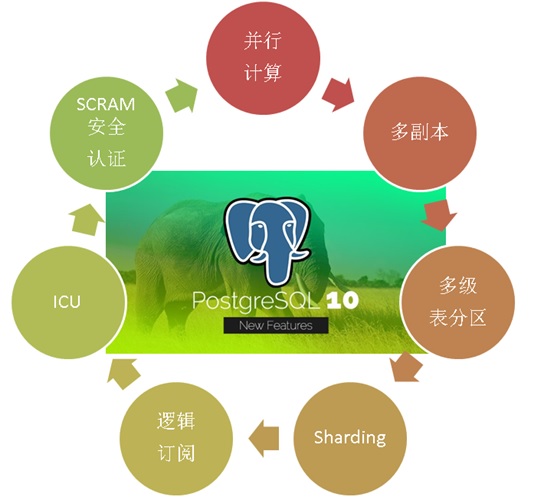

In October 2017, PostgreSQL released version 10, which carries many amazing features. The goal is to meet the requirements of mixed HTAP scenarios of OLAP and OLTP:

PostgreSQL 10 features, the most popular HTAP database for developers

1. Multi-core Parallel Enhancement

2. fdw aggregation push-down

3. Logical Subscription

4, zoning

5. Multi-copies at the financial level

6. json, jsonb full-text retrieval

7. There are also some features of plug-in form, such as vector computing, JIT, SQL graph computing, SQL stream computing, distributed parallel computing, time series processing, gene sequencing, chemical analysis, image analysis, etc.

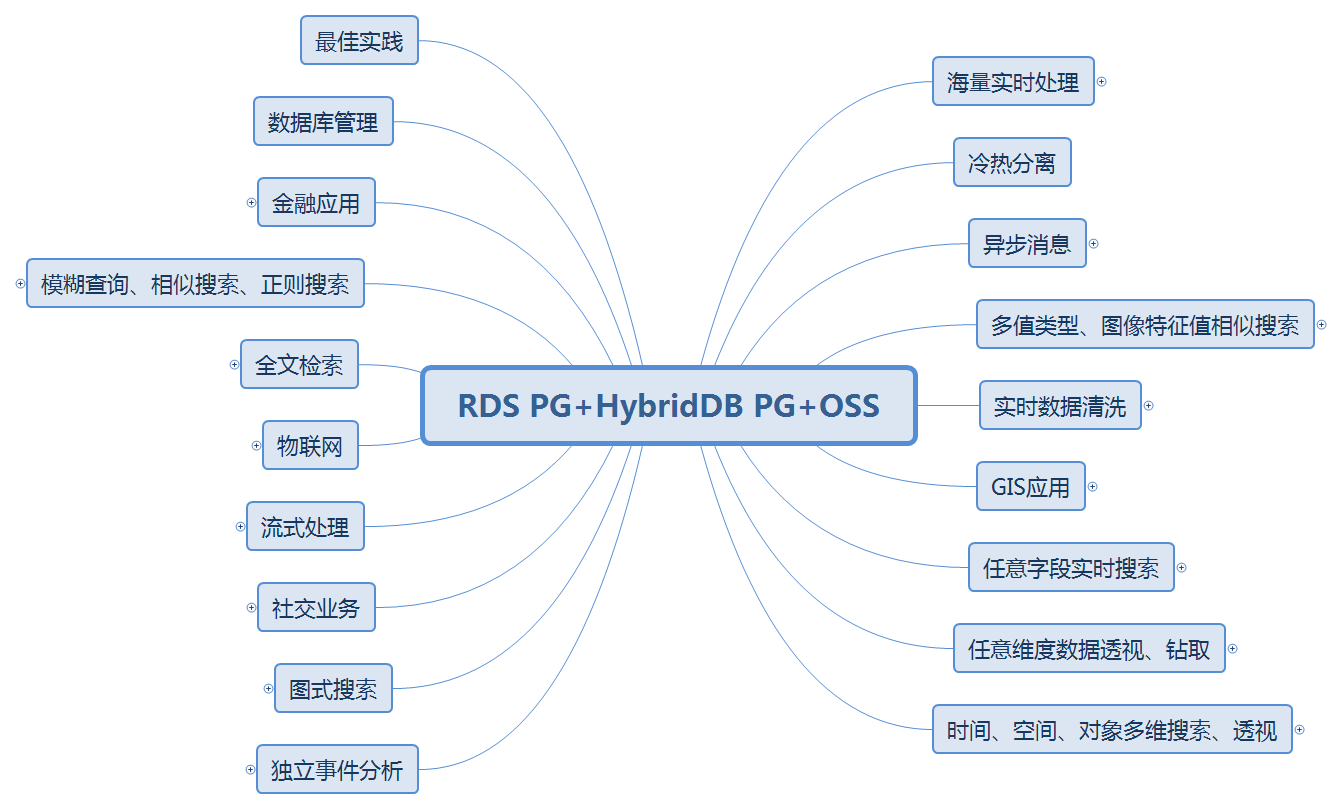

PostgreSQL can be seen in various application scenarios:

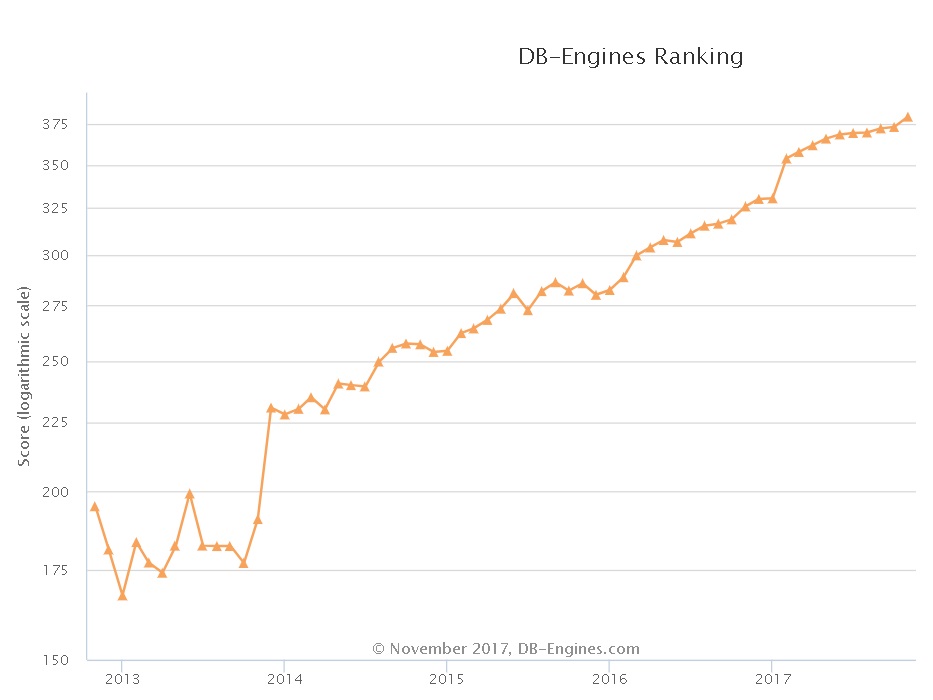

PostgreSQL has developed very rapidly in recent years. From the database scoring trend of dbranking, a well-known database evaluation website, we can see the upward trend of PostgreSQL:

From the annual community meetings held in PostgreSQL China, we can see the same trend. More and more companies are participating, more and more companies are sharing, and more and more subjects are shared. They span traditional enterprises, Internet, medical, financial, state-owned enterprises, logistics, e-commerce, social networking, vehicle networking, sharing XX, cloud, game, public transport, aviation, railway, military, training. Training, consulting services, etc.

The next series of articles will introduce various application scenarios and corresponding performance indicators of PostgreSQL.

Environmental Science

Reference to Environmental Deployment Method:

Aliyun ECS: 56 core, 224G, 1.5TB*2 SSD cloud disk.

Operating System: CentOS 7.4 x64

Database version: PostgreSQL 10

PS: The CPU and IO performance of ECS is discounted by physical opportunity, and can be estimated by one-fold performance decline. Running physical mainframe can be estimated by multiplying the performance tested here by 2.

Scenario-String Search-Front-Back Fuzzy Query (OLTP)

1, background

String search is a very common business requirement, which includes:

1. Prefix + Fuzzy Query. (b-tree index can be used)

2. Suffix + Fuzzy Query. (b-tree index can be used)

3. Fuzzy query before and after. (You can use pg_trgm and gin indexes)

https://www.postgresql.org/docs/10/static/pgtrgm.html

4. Full-text retrieval. (Full-text search types and gin or rum indexes can be used)

5. Regular query. (You can use pg_trgm and gin indexes)

6. Similar query. (You can use pg_trgm and gin indexes)

Generally speaking, the database does not have the ability to speed up after 3 years, but PostgreSQL is very powerful, it can perfectly support the acceleration of such queries. (Refers to queries and writes that do not conflict, and the index BUILD is real-time.)

Users do not need to synchronize data to search engines, and then query, and search engines can only do full-text retrieval, and you do not do regular, similar, ambiguous these requirements.

PostgreSQL can greatly simplify the user's architecture, cost of development, and ensure the absolute real-time data query.

2, design

There are 100 million texts, each of which is a random string of 128 Chinese characters. Fuzzy queries are made according to random provided strings.

PostgreSQL Fuzzy Query Best Practices

3. Preparing test sheets

create extension pg_trgm; create table t_likeall ( id int, info text ); create index idx_t_likeall_1 on t_likeall using gin (info gin_trgm_ops); -- select * from t_likeall where info like '%abcd%'; -- Find Arbitrary Location Containment abcd A string of.

4. Preparing test functions (optional)

-- Generating Random Chinese Character Strings create or replace function gen_hanzi(int) returns text as $$ declare res text; begin if $1 >=1 then select string_agg(chr(19968+(random()*20901)::int), '') into res from generate_series(1,$1); return res; end if; return null; end; $$ language plpgsql strict;

-- Using random strings like Query (for pressure measurement) create or replace function get_t_likeall_test(int) returns setof t_likeall as $$ declare str text; begin select info into str from t_likeall_test where id=$1; return query execute 'select * from t_likeall where info like ''%'||str||'%'' limit 1'; -- return query execute 'select * from t_likeall where info like ''%'||gen_hanzi(6)||'%'' limit 1'; -- select * from t_likeall where info like '%Random string%' limit 1; end; $$ language plpgsql strict;

5. Preparing test data

insert into t_likeall select id, gen_hanzi(128) from generate_series(1,100000000) t(id);

Generate 2.1 million valid test range strings and possibly invalid strings.

create table t_likeall_test (id serial primary key, info text); -- Intercept 6 Chinese characters starting at any position, 200 Ten thousand pieces insert into t_likeall_test (info) select substring(info, (random()*64)::int, 6) from t_likeall limit 2000000; -- Generating 6 Random Chinese Characters, 10 Ten thousand pieces insert into t_likeall_test (info) select gen_hanzi(6) from generate_series(2000001,2100000);

6. Preparing test scripts

vi test.sql \set id random(1,2100000) select * from get_t_likeall_test(:id);

7, test

The response time is less than 1 millisecond. (With bound variables and CACHE hits, the response time is lower. )

postgres=# Select * from t_like all where info like'% Lian tomb is among%'; id | info ----+--------------- 1 | Inlaid musk musk cockroaches are charming. (1 row) Time: 0.783 ms

Pressure measurement

CONNECTS=56 TIMES=300 export PGHOST=$PGDATA export PGPORT=1999 export PGUSER=postgres export PGPASSWORD=postgres export PGDATABASE=postgres pgbench -M prepared -n -r -f ./test.sql -P 5 -c $CONNECTS -j $CONNECTS -T $TIMES

8. Test results

transaction type: ./test.sql scaling factor: 1 query mode: prepared number of clients: 56 number of threads: 56 duration: 300 s number of transactions actually processed: 24437916 latency average = 0.686 ms latency stddev = 1.359 ms tps = 81456.177966 (including connections establishing) tps = 81569.429540 (excluding connections establishing) script statistics: - statement latencies in milliseconds: 0.002 \set id random(1,2100000) 0.702 select * from get_t_likeall_test(:id);

TPS: 81569

Average response time: 0.686 milliseconds

Reference resources

PostgreSQL, Greenplum Application Case Book "Tathagata Palm" - Catalogue

"Database Selection - Elephant Eighteen Touches - To Architects and Developers"

PostgreSQL uses pgbench to test sysbench related case s