Label

PostgreSQL, UDF, Dynamic Format, Format, JOIN, OSS External Table

background

Some businesses need to categorize data into dynamic outputs, such as some common log services, where all users'logs are recorded in a unified format, but each end user has different fields of interest, and even different needs for data conversion for each user.

For example, this business:

I. demand

1. Different formats can be output according to the definition of ToB users.

2. Each ToB user writes to one or more files.

3. One file cannot contain two users'content.

For other needs, see:

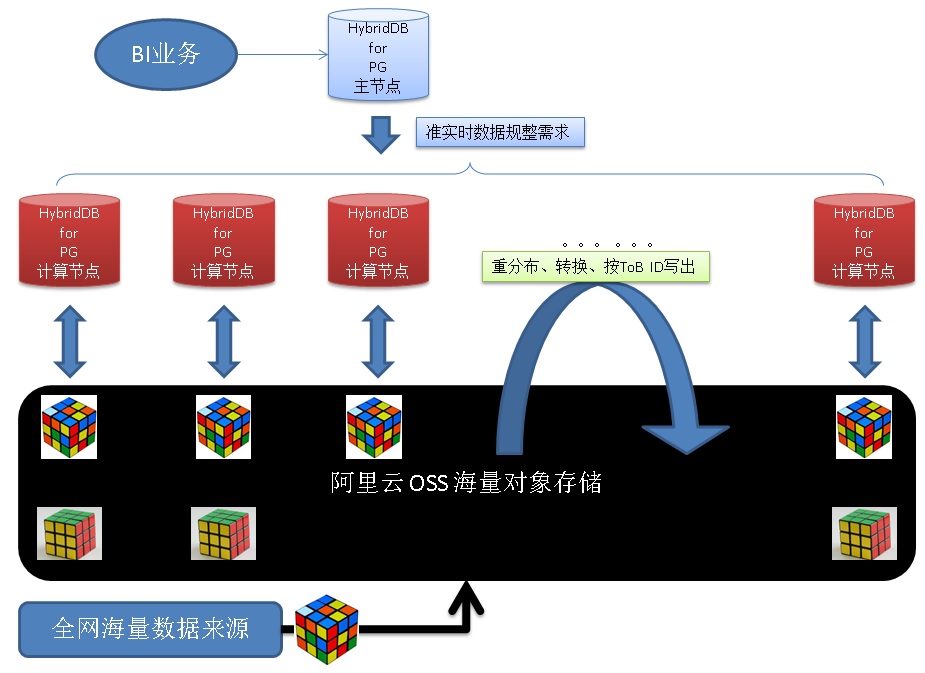

II. Architectural Design

1. Use OSS to store massive public logs loaded in real time.

2. The OSS external table interface of HybridDB for PostgreSQL or RDS PostgreSQL is used to read OSS files directly and in parallel.

3. By the window function of HDB PG, press ToB ID fully.

4. Through UDF, the format of public log is transformed into corresponding format according to the UDF corresponding to ToB ID.

5. Write the converted data into OSS. Automatically switch by ToB ID, absolutely guaranteeing each ToB user to write to one or more files. One file does not show the contents of two users.

The above functions are unique to Aliyun HybridDB for PostgreSQL or RDS PostgreSQL.

DEMO and Performance

Here we introduce how to transform data dynamically. For more information, see the case.

1. Create a common log table

create table t1 (tid int, c1 text, c2 text, c3 int, c4 timestamp, c5 numeric);

2. Write a batch of test data

insert into t1 select random()*100, md5(random()::text), 'test', random()*10000, clock_timestamp(), random() from generate_series(1,1000000);

3. Create UDF meta-information table and store the UDF name corresponding to each ToB ID.

create table t2(tid int, udf name);

4. To create UDF, you need to customize the ToB ID format and create the corresponding UDF.

create or replace function f1(t1) returns text as $$ select format('tid: %L , c2: %L , c4: %L', $1.tid, $1.c2, $1.c4); $$ language sql strict; create or replace function f2(t1) returns text as $$ declare res text := format('%L , %L , %L, %L', $1.tid, upper($1.c2), $1.c4, $1.c3); begin return res; end; $$ language plpgsql strict;

5. Create a dynamic UDF and call the corresponding UDF dynamically according to the input.

create or replace function ff(t1, name) returns text as $$ declare sql text := format('select %I(%L)', $2, $1); res text; begin execute sql into res; return res; end; $$ language plpgsql strict;

6. Write UDF mapping, such as ID of 1-100, use F1 for conversion, ID of 0 uses F2 for conversion.

insert into t2 select generate_series(1,100), 'f1'; insert into t2 values (0, 'f2');

7. Dynamic conversion queries are as follows:

postgres=# select ff(t1, t2.udf) from t1 join t2 on (t1.tid=t2.tid) where t1.tid=0 limit 10; ff ----------------------------------------------------- '0' , 'TEST' , '2017-08-03 18:55:00.48512', '9478' '0' , 'TEST' , '2017-08-03 18:55:00.486426', '9352' '0' , 'TEST' , '2017-08-03 18:55:00.487297', '4026' '0' , 'TEST' , '2017-08-03 18:55:00.488419', '736' '0' , 'TEST' , '2017-08-03 18:55:00.491082', '4334' '0' , 'TEST' , '2017-08-03 18:55:00.491097', '2394' '0' , 'TEST' , '2017-08-03 18:55:00.491839', '2076' '0' , 'TEST' , '2017-08-03 18:55:00.492648', '9935' '0' , 'TEST' , '2017-08-03 18:55:00.493505', '383' '0' , 'TEST' , '2017-08-03 18:55:00.493874', '8546' (10 rows) postgres=# select ff(t1, t2.udf) from t1 join t2 on (t1.tid=t2.tid) where t1.tid=1 limit 10; ff ---------------------------------------------------------- tid: '1' , c2: 'TEST' , c4: '2017-08-03 18:55:00.484799' tid: '1' , c2: 'TEST' , c4: '2017-08-03 18:55:00.485209' tid: '1' , c2: 'TEST' , c4: '2017-08-03 18:55:00.485276' tid: '1' , c2: 'TEST' , c4: '2017-08-03 18:55:00.485744' tid: '1' , c2: 'TEST' , c4: '2017-08-03 18:55:00.48582' tid: '1' , c2: 'TEST' , c4: '2017-08-03 18:55:00.485967' tid: '1' , c2: 'TEST' , c4: '2017-08-03 18:55:00.486067' tid: '1' , c2: 'TEST' , c4: '2017-08-03 18:55:00.486281' tid: '1' , c2: 'TEST' , c4: '2017-08-03 18:55:00.486756' tid: '1' , c2: 'TEST' , c4: '2017-08-03 18:55:00.487714' (10 rows)

8. Compulsory full development in HDB PG:

postgres=# select ff(t1, t2.udf) as ff, row_number() over (partition by t1.tid order by c4) from t1 join t2 on (t1.tid=t2.tid) where t1.tid=0 limit 10; ff | row_number -----------------------------------------------------+------------ '0' , 'TEST' , '2017-08-03 18:55:00.48512', '9478' | 1 '0' , 'TEST' , '2017-08-03 18:55:00.486426', '9352' | 2 '0' , 'TEST' , '2017-08-03 18:55:00.487297', '4026' | 3 '0' , 'TEST' , '2017-08-03 18:55:00.488419', '736' | 4 '0' , 'TEST' , '2017-08-03 18:55:00.491082', '4334' | 5 '0' , 'TEST' , '2017-08-03 18:55:00.491097', '2394' | 6 '0' , 'TEST' , '2017-08-03 18:55:00.491839', '2076' | 7 '0' , 'TEST' , '2017-08-03 18:55:00.492648', '9935' | 8 '0' , 'TEST' , '2017-08-03 18:55:00.493505', '383' | 9 '0' , 'TEST' , '2017-08-03 18:55:00.493874', '8546' | 10 (10 rows)

IV. Technical Points

Here I will only talk about the technical points involved in this article.

1,UDF

PostgreSQL supports a variety of UDF languages (such as C, plpgsql, sql, plpython, pljava, plv8,...), and users define formats to be converted through UDF.

2. Dynamic Call

Through dynamic invocation, users can dynamically invoke the corresponding UDF to generate different formats in a request.

V. Cloud end products

Aliyun HybridDB for PostgreSQL

6. Similar scenarios and cases

Seven, summary

In some common log services, all users'logs are recorded in a uniform format, but each end user has different fields of interest, and even different needs for data conversion for each user.

PostgreSQL supports a variety of UDF languages (such as C, plpgsql, sql, plpython, pljava, plv8,...), and users define formats to be converted through UDF.

Through dynamic invocation, users can dynamically invoke the corresponding UDF to generate different formats in a request.