Label

PostgreSQL, HTAP, OLTP, OLAP, Scenario and Performance Testing

background

PostgreSQL is a database with a long history, which can be traced back to 1973. It was first awarded by the 2014 Computer Turing Prize winner and the originator of relational database. Michael_Stonebraker PostgreSQL has similar functions, performance, architecture and stability as Oracle.

The PostgreSQL community has many contributors, from all walks of life around the world. Over the years, PostgreSQL releases a large version every year, known for its enduring vitality and stability.

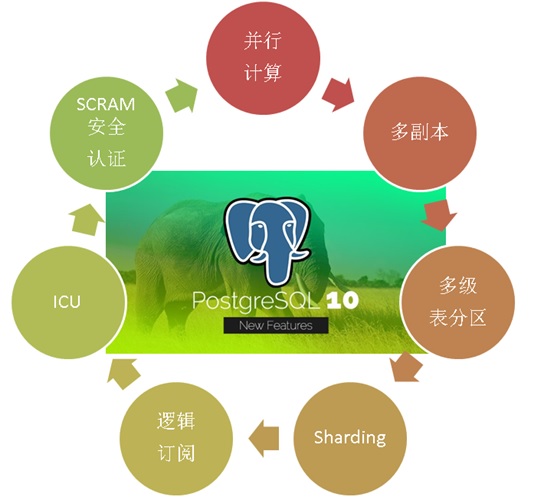

In October 2017, PostgreSQL released version 10, which carries many amazing features. The goal is to meet the requirements of mixed HTAP scenarios of OLAP and OLTP:

PostgreSQL 10 features, the most popular HTAP database for developers

1. Multi-core Parallel Enhancement

2. fdw aggregation push-down

3. Logical Subscription

4, zoning

5. Multi-copies at the financial level

6. json, jsonb full-text retrieval

7. There are also some features of plug-in form, such as vector computing, JIT, SQL graph computing, SQL stream computing, distributed parallel computing, time series processing, gene sequencing, chemical analysis, image analysis, etc.

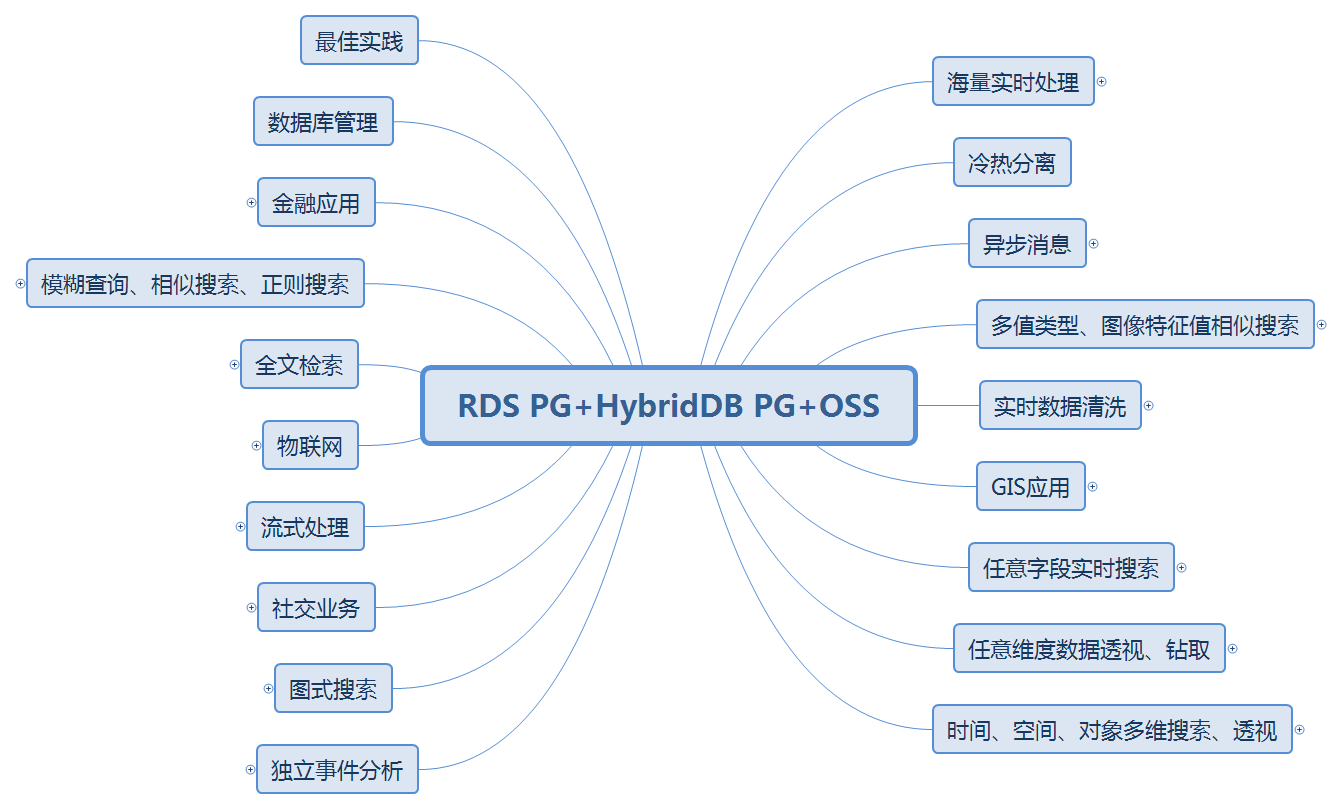

PostgreSQL can be seen in various application scenarios:

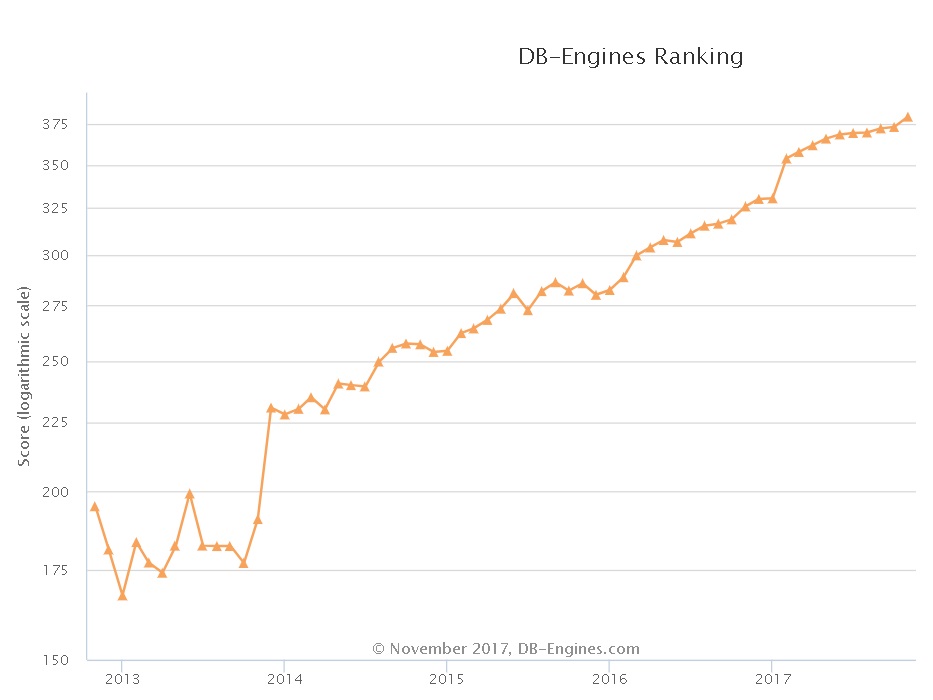

PostgreSQL has developed very rapidly in recent years. From the database scoring trend of dbranking, a well-known database evaluation website, we can see the upward trend of PostgreSQL:

From the annual community meetings held in PostgreSQL China, we can see the same trend. More and more companies are participating, more and more companies are sharing, and more and more subjects are shared. They span traditional enterprises, Internet, medical, financial, state-owned enterprises, logistics, e-commerce, social networking, vehicle networking, sharing XX, cloud, game, public transport, aviation, railway, military, training. Training, consulting services, etc.

The next series of articles will introduce various application scenarios and corresponding performance indicators of PostgreSQL.

Environmental Science

Reference to Environmental Deployment Method:

Aliyun ECS: 56 core, 224G, 1.5TB*2 SSD cloud disk.

Operating System: CentOS 7.4 x64

Database version: PostgreSQL 10

PS: The CPU and IO performance of ECS is discounted by physical opportunity, and can be estimated by one-fold performance decline. Running physical mainframe can be estimated by multiplying the performance tested here by 2.

Scenario-secondkill-high concurrent single point update (OLTP)

1, background

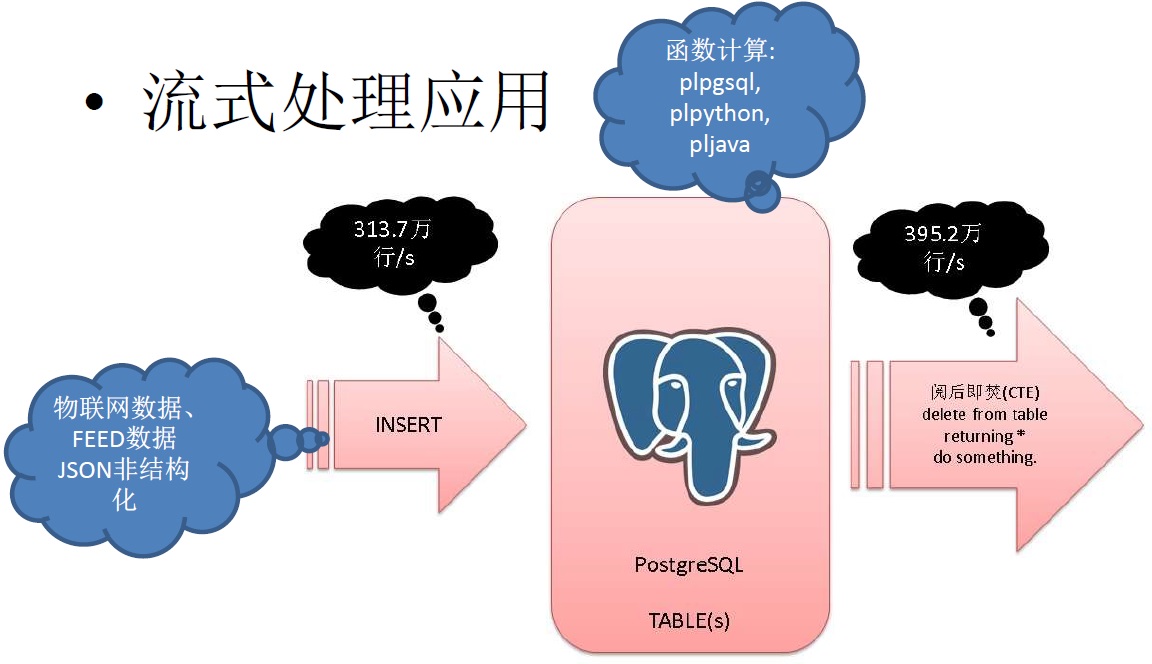

High throughput data writing and consumption are usually MQ's strengths and functional points, but MQ has no data storage capacity, nor computing capacity.

PostgreSQL has the ability of storage and computing, and PG also provides high throughput and reliability.

In environments where high throughput computing is required, PG is a good choice.

If the business needs FIFO mode, it can be achieved by adding a time index, which can achieve such efficiency. Writing and consumption are more than 3 million lines/s:

See:

If the business does not require a strong FIFO, and processing throughput is strong enough, in fact, PG can not need index, because it is a heap table, without index, write and consumption throughput can be greater.

This paper tests bare writing and consumption throughput (consumption, non-calculation) without index.

The next paper combines the ability of function calculation and JSON under high throughput of pressure measurement.

2, design

1. Heap table, multi-table, high throughput write

2. Heap table, multi-table and large throughput consumption

At the same time, pressure measurement writing and consumption.

3. Preparing test sheets

create table t_sensor (sid int, info text, crt_time timestamp) ;

Use 2048 sub-tables.

do language plpgsql $$ declare begin for i in 0..2047 loop execute 'create table t_sensor'||i||'(like t_sensor including all) inherits(t_sensor) '||case when mod(i,2)=0 then ' ' else ' tablespace tbs1' end; end loop; end; $$;

4. Preparing test functions (optional)

1. Function of Batch Generation of Sensor Test Data

CREATE OR REPLACE FUNCTION public.ins_batch(integer,integer) RETURNS void LANGUAGE plpgsql STRICT AS $function$ declare suffix int := mod($1, 2048); begin execute 'insert into t_sensor'||suffix||' select '||$1||', 0.1, now() from generate_series(1,'||$2||')'; end; $function$;

2. The function of mass consumption sensor data, according to the time, from the earliest consumption.

Processing logic can also be put in it, such as early warning logic (using PostgreSQL asynchronous message, CTE grammar).

CREATE OR REPLACE FUNCTION public.consume_batch(integer,integer) RETURNS void LANGUAGE plpgsql STRICT AS $function$ declare suffix int := mod($1, 2048); begin -- Examples of streaming business logic(Use CTE grammar): -- with t1 as (delete from t_sensor$suffix where ctid = any(array(select ctid from t_sensor$suffix limit 1000)) returning *) -- select pg_notify('channel_name', 'reason:xxx::::'||row_to_json(t1)) from t1 where ......; -- -- If there are multiple criteria, you can save them first. TMP TABLE,Until then TMP TABLE Handle. -- Use ordinary TMP table Or use memory TMP TABLE. -- [<PostgreSQL Memory table "](../201608/20160818_01.md) -- This example only tests the situation of consumption without processing logic, focusing on consumption speed. execute format('delete from t_sensor%s where ctid = any(array(select ctid from t_sensor%s limit %s))', suffix, suffix, $2); end; $function$;

5. Preparing test data

6. Preparing test scripts

At the same time, pressure measurement writing and consumption.

1. High throughput write test, 1 million sensors, 1000 in each batch.

vi test.sql \set sid random(1,1000000) select ins_batch(:sid, 1000);

Pressure measurement

CONNECTS=28 TIMES=300 export PGHOST=$PGDATA export PGPORT=1999 export PGUSER=postgres export PGPASSWORD=postgres export PGDATABASE=postgres pgbench -M prepared -n -r -f ./test.sql -P 5 -c $CONNECTS -j $CONNECTS -T $TIMES

2. High throughput consumption test, 1 million sensors, 1000 in each batch.

vi test.sql \set sid random(1,1000000) select consume_batch(:sid, 1000);

Pressure measurement

CONNECTS=28 TIMES=300 export PGHOST=$PGDATA export PGPORT=1999 export PGUSER=postgres export PGPASSWORD=postgres export PGDATABASE=postgres pgbench -M prepared -n -r -f ./test1.sql -P 5 -c $CONNECTS -j $CONNECTS -T $TIMES

7, test

1. High throughput write test, 1 million sensors, 1000 in each batch.

transaction type: ./test.sql scaling factor: 1 query mode: prepared number of clients: 28 number of threads: 28 duration: 300 s number of transactions actually processed: 581437 latency average = 14.446 ms latency stddev = 12.231 ms tps = 1937.869058 (including connections establishing) tps = 1937.999398 (excluding connections establishing) script statistics: - statement latencies in milliseconds: 0.002 \set sid random(1,1000000) 14.445 select ins_batch(:sid, 1000);

2. High throughput consumption test, 1 million sensors, 1000 in each batch.

transaction type: ./test1.sql scaling factor: 1 query mode: prepared number of clients: 28 number of threads: 28 duration: 300 s number of transactions actually processed: 1254322 latency average = 6.697 ms latency stddev = 10.925 ms tps = 4180.897450 (including connections establishing) tps = 4181.104213 (excluding connections establishing) script statistics: - statement latencies in milliseconds: 0.002 \set sid random(1,1000000) 6.696 select consume_batch(:sid, 1000);

I. TPS

At the same time, the throughput of pressure measurement writing and consumption is as follows:

1. Data writing speed: 193,000 lines/s.

2. Speed of data consumption: 4.18 million lines/s.

Average response time

At the same time, the throughput of pressure measurement writing and consumption is as follows:

1. Data writing speed: 14.4 milliseconds

2. Speed of data consumption: 6.7 milliseconds

Reference resources

PostgreSQL, Greenplum Application Case Book "Tathagata Palm" - Catalogue

"Database Selection - Elephant Eighteen Touches - To Architects and Developers"

PostgreSQL uses pgbench to test sysbench related case s