Next, this article. Node.js+jade crawls all blog articles to generate static html files Continue, in this article to achieve the acquisition and static file generation, in the actual acquisition project, should be first put into storage and then selectively generate static files.

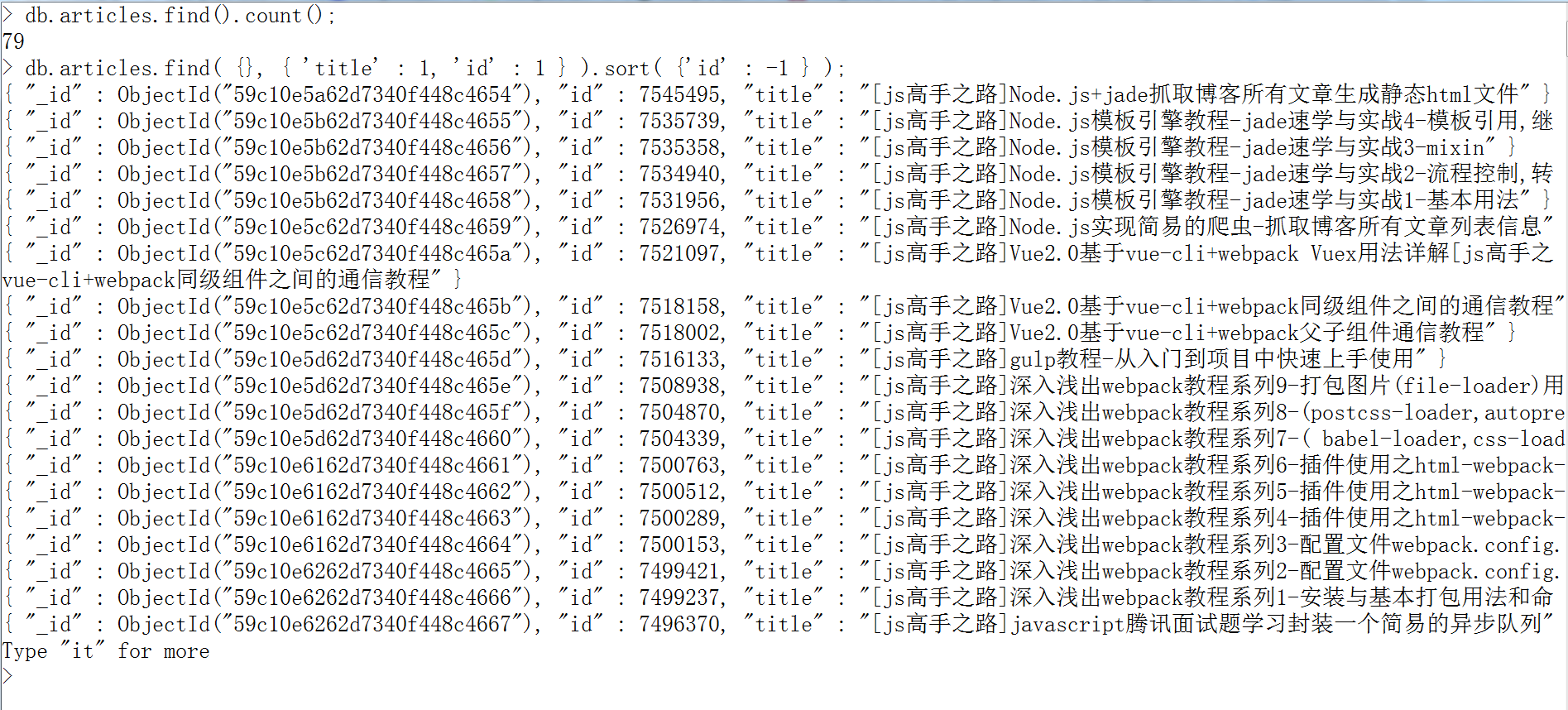

So the database I choose is mongodb, why use this database, because this database is based on set, data operation is basically json, and dom module cheerio has a very large affinity, cheerio processing filtered data can be directly inserted into mongodb, without any processing, very convenient. Of course, the affinity with node.js goes without saying. More importantly, the performance is excellent. In this article, I will not specify the basic usage of mongodb. I will start with another article from 0 to write the basic usage of mongodb.

At this stage, I separated the crawler into two modules, collected the crawler.js, and generated the static file (makeHtml.js).

crawler.js:

1 var http = require('http'); 2 var cheerio = require('cheerio'); 3 var mongoose = require('mongoose'); 4 mongoose.Promise = global.Promise; 5 var DB_URL = 'mongodb://localhost:27017/crawler'; 6 7 var aList = []; //List information of blog articles 8 var aUrl = []; //All blog articles url 9 10 var db = mongoose.createConnection(DB_URL); 11 db.on('connected', function (err) { 12 if (err) { 13 console.log(err); 14 } else { 15 console.log('db connected success'); 16 } 17 }); 18 var Schema = mongoose.Schema; 19 var arcSchema = new Schema({ 20 id: Number, //Article id 21 title: String, //Article title 22 url: String, //Article link 23 body: String, //Article content 24 entry: String, //abstract 25 listTime: Date //Release time 26 }); 27 var Article = db.model('Article', arcSchema); 28 29 function saveArticle(arcInfo) { 30 var arcModel = new Article(arcInfo); 31 arcModel.save(function (err, result) { 32 if (err) { 33 console.log(err); 34 } else { 35 console.log(`${arcInfo['title']} Insert success`); 36 } 37 }); 38 } 39 40 function filterArticle(html) { 41 var $ = cheerio.load(html); 42 var arcDetail = {}; 43 var title = $("#cb_post_title_url").text(); 44 var href = $("#cb_post_title_url").attr("href"); 45 var re = /\/(\d+)\.html/; 46 var id = href.match(re)[1]; 47 var body = $("#cnblogs_post_body").html(); 48 return { 49 id: id, 50 title: title, 51 url: href, 52 body: body 53 }; 54 } 55 56 function crawlerArc(url) { 57 var html = ''; 58 var str = ''; 59 var arcDetail = {}; 60 http.get(url, function (res) { 61 res.on('data', function (chunk) { 62 html += chunk; 63 }); 64 res.on('end', function () { 65 arcDetail = filterArticle(html); 66 saveArticle(arcDetail); 67 if ( aUrl.length ) { 68 setTimeout(function () { 69 if (aUrl.length) { 70 crawlerArc(aUrl.shift()); 71 } 72 }, 100); 73 }else { 74 console.log( 'Collection task completed' ); 75 return; 76 } 77 }); 78 }); 79 } 80 81 function filterHtml(html) { 82 var $ = cheerio.load(html); 83 var arcList = []; 84 var aPost = $("#content").find(".post-list-item"); 85 aPost.each(function () { 86 var ele = $(this); 87 var title = ele.find("h2 a").text(); 88 var url = ele.find("h2 a").attr("href"); 89 ele.find(".c_b_p_desc a").remove(); 90 var entry = ele.find(".c_b_p_desc").text(); 91 ele.find("small a").remove(); 92 var listTime = ele.find("small").text(); 93 var re = /\d{4}-\d{2}-\d{2}\s*\d{2}[:]\d{2}/; 94 listTime = listTime.match(re)[0]; 95 96 arcList.push({ 97 title: title, 98 url: url, 99 entry: entry, 100 listTime: listTime 101 }); 102 }); 103 return arcList; 104 } 105 106 function nextPage(html) { 107 var $ = cheerio.load(html); 108 var nextUrl = $("#pager a:last-child").attr('href'); 109 if (!nextUrl) return getArcUrl(aList); 110 var curPage = $("#pager .current").text(); 111 if (!curPage) curPage = 1; 112 var nextPage = nextUrl.substring(nextUrl.indexOf('=') + 1); 113 if (curPage < nextPage) crawler(nextUrl); 114 } 115 116 function crawler(url) { 117 http.get(url, function (res) { 118 var html = ''; 119 res.on('data', function (chunk) { 120 html += chunk; 121 }); 122 res.on('end', function () { 123 aList.push(filterHtml(html)); 124 nextPage(html); 125 }); 126 }); 127 } 128 129 function getArcUrl(arcList) { 130 for (var key in arcList) { 131 for (var k in arcList[key]) { 132 aUrl.push(arcList[key][k]['url']); 133 } 134 } 135 crawlerArc(aUrl.shift()); 136 } 137 138 var url = 'http://www.cnblogs.com/ghostwu/'; 139 crawler(url);

Other core modules have not changed much, mainly adding database connection, database creation, set creation (set equivalent to table in relational database), Schema (equivalent to table structure of relational database).

mongoose operates on the database (save: insert data). The file generation module is separated.

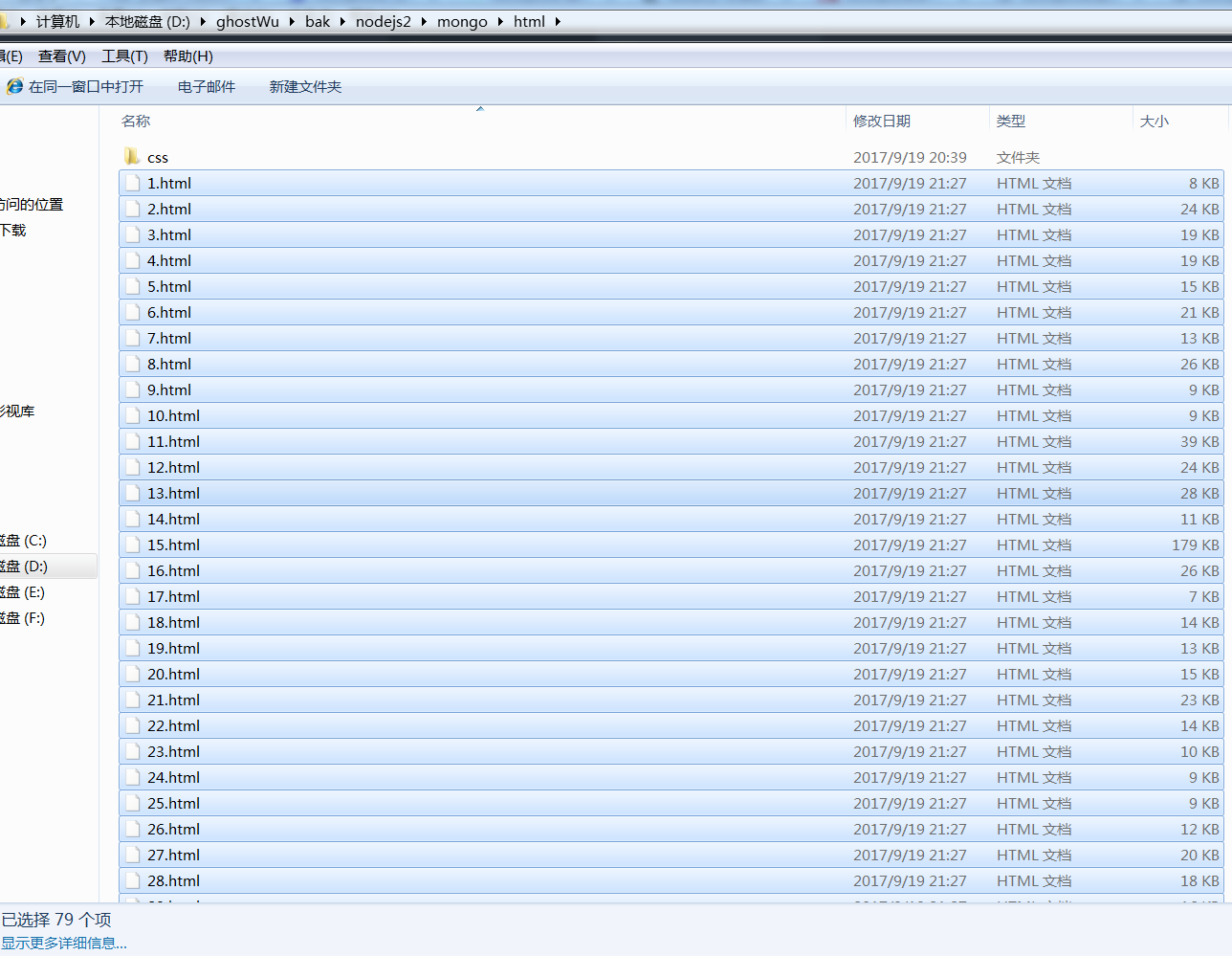

makeHtml.js file

1 var fs = require('fs'); 2 var jade = require('jade'); 3 4 var mongoose = require('mongoose'); 5 mongoose.Promise = global.Promise; 6 var DB_URL = 'mongodb://localhost:27017/crawler'; 7 8 var allArc = []; 9 var count = 0; 10 11 var db = mongoose.createConnection(DB_URL); 12 db.on('connected', function (err) { 13 if (err) { 14 console.log(err); 15 } else { 16 console.log('db connected success'); 17 } 18 }); 19 var Schema = mongoose.Schema; 20 var arcSchema = new Schema({ 21 id: Number, //Article id 22 title: String, //Article title 23 url: String, //Article link 24 body: String, //Article content 25 entry: String, //abstract 26 listTime: Date //Release time 27 }); 28 var Article = db.model('Article', arcSchema); 29 30 function makeHtml(arcDetail) { 31 str = jade.renderFile('./views/layout.jade', arcDetail); 32 ++count; 33 fs.writeFile('./html/' + count + '.html', str, function (err) { 34 if (err) { 35 console.log(err); 36 } 37 console.log( `${arcDetail['id']}.html Create success` + count ); 38 if ( allArc.length ){ 39 setTimeout( function(){ 40 makeHtml( allArc.shift() ); 41 }, 100 ); 42 } 43 }); 44 } 45 46 function getAllArc(){ 47 Article.find( {}, function( err, arcs ){ 48 allArc = arcs; 49 makeHtml( allArc.shift() ); 50 } ).sort( { 'id' : 1 } ); 51 } 52 getAllArc();