In the last article, we obtained the RTSP communication process through WireShark packet capture. In this article, we analyze the working principle of each process through code.

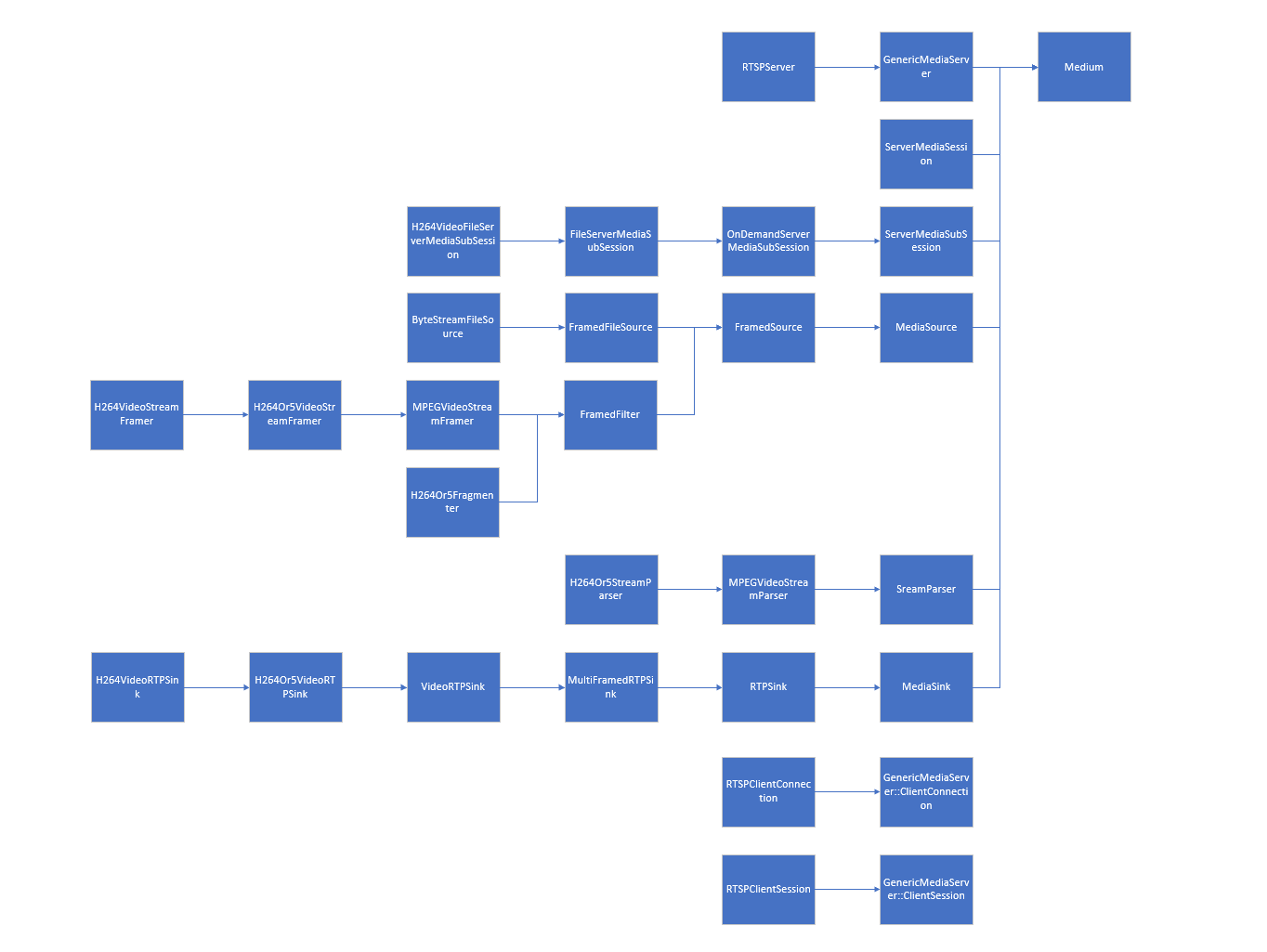

The inheritance relationship of live555 is too complex, so I made a diagram to simply record the class inheritance relationship related to h264 file transfer

1, OPTION

Option is relatively simple, that is, the client requests the available method from the server. After receiving the OPTION instructions from the client, the server calls the function handleCmd_. Options

void RTSPServer::RTSPClientConnection::handleCmd_OPTIONS() {

snprintf((char*)fResponseBuffer, sizeof fResponseBuffer,

"RTSP/1.0 200 OK\r\nCSeq: %s\r\n%sPublic: %s\r\n\r\n",

fCurrentCSeq, dateHeader(), fOurRTSPServer.allowedCommandNames());

}

The server processing is to send its supported commands back to the client according to the appropriate. You can see the RTSP instructions supported by live555

OPTIONS, DESCRIBE, SETUP, TEARDOWN, PLAY, PAUSE, GET_PARAMETER, SET_PARAMETER.

char const* RTSPServer::allowedCommandNames() {

return "OPTIONS, DESCRIBE, SETUP, TEARDOWN, PLAY, PAUSE, GET_PARAMETER, SET_PARAMETER";

}

2, DESCRIBE

live555 the names of key variables of many classes are the same, so a diagram is made to simply record the actual types of key variables when simulating h264RTP. When you feel confused when analyzing the code later, you can look back at this figure.

The process of DESCRIBE is that the client requests the media description file from the server, and the server replies the sdp information to the client.

1. Process the DESCRIBE command

void RTSPServer::RTSPClientConnection

::handleCmd_DESCRIBE(char const* urlPreSuffix, char const* urlSuffix, char const* fullRequestStr) {

char urlTotalSuffix[2*RTSP_PARAM_STRING_MAX];

// enough space for urlPreSuffix/urlSuffix'\0'

urlTotalSuffix[0] = '\0';

if (urlPreSuffix[0] != '\0') {

strcat(urlTotalSuffix, urlPreSuffix);

strcat(urlTotalSuffix, "/");

}

strcat(urlTotalSuffix, urlSuffix);

if (!authenticationOK("DESCRIBE", urlTotalSuffix, fullRequestStr)) return;

// We should really check that the request contains an "Accept:" #####

// for "application/sdp", because that's what we're sending back #####

// Begin by looking up the "ServerMediaSession" object for the specified "urlTotalSuffix":

fOurServer.lookupServerMediaSession(urlTotalSuffix, DESCRIBELookupCompletionFunction, this);

}

You can see the handlecmd of the server_ Describe function. The first is to find the corresponding MediaSession through urlTotalSuffix, and then to call the ESCRIBELookupCompletionFunction function after finding it.

void RTSPServer::RTSPClientConnection

::DESCRIBELookupCompletionFunction(void* clientData, ServerMediaSession* sessionLookedUp) {

RTSPServer::RTSPClientConnection* connection = (RTSPServer::RTSPClientConnection*)clientData;

connection->handleCmd_DESCRIBE_afterLookup(sessionLookedUp);

}

void RTSPServer::RTSPClientConnection

::handleCmd_DESCRIBE_afterLookup(ServerMediaSession* session) {

char* sdpDescription = NULL;

char* rtspURL = NULL;

do {

if (session == NULL) {

handleCmd_notFound();

break;

}

// Increment the "ServerMediaSession" object's reference count, in case someone removes it

// while we're using it:

session->incrementReferenceCount();

// Then, assemble a SDP description for this session:

sdpDescription = session->generateSDPDescription(fAddressFamily);

if (sdpDescription == NULL) {

// This usually means that a file name that was specified for a

// "ServerMediaSubsession" does not exist.

setRTSPResponse("404 File Not Found, Or In Incorrect Format");

break;

}

unsigned sdpDescriptionSize = strlen(sdpDescription);

// Also, generate our RTSP URL, for the "Content-Base:" header

// (which is necessary to ensure that the correct URL gets used in subsequent "SETUP" requests).

rtspURL = fOurRTSPServer.rtspURL(session, fClientInputSocket);

snprintf((char*)fResponseBuffer, sizeof fResponseBuffer,

"RTSP/1.0 200 OK\r\nCSeq: %s\r\n"

"%s"

"Content-Base: %s/\r\n"

"Content-Type: application/sdp\r\n"

"Content-Length: %d\r\n\r\n"

"%s",

fCurrentCSeq,

dateHeader(),

rtspURL,

sdpDescriptionSize,

sdpDescription);

} while (0);

if (session != NULL) {

// Decrement its reference count, now that we're done using it:

session->decrementReferenceCount();

if (session->referenceCount() == 0 && session->deleteWhenUnreferenced()) {

fOurServer.removeServerMediaSession(session);

}

}

delete[] sdpDescription;

delete[] rtspURL;

}

2. Generate SDP information

After finding the session, let the session generate SDP description information. Call the generateSDPDescription function

for (subsession = fSubsessionsHead; subsession != NULL;

subsession = subsession->fNext) {

char const* sdpLines = subsession->sdpLines(addressFamily);

if (sdpLines == NULL) continue; // the media's not available

sdpLength += strlen(sdpLines);

}

When generating sdpdescription, find the subsession of the session and add the sdp information of each subsession.

In our example, there is only one subsession, h264videofileservermedia subsession.

H264VideoFileServerMediaSubsession inherits from OnDemandServerMediaSubsession. Therefore, sdpLines of OnDemandServerMediaSubsession will be called to generate sdp information.

3. Obtain sdp information of subsession

char const*

OnDemandServerMediaSubsession::sdpLines(int addressFamily) {

if (fSDPLines == NULL) {

// We need to construct a set of SDP lines that describe this

// subsession (as a unicast stream). To do so, we first create

// dummy (unused) source and "RTPSink" objects,

// whose parameters we use for the SDP lines:

unsigned estBitrate;

FramedSource* inputSource = createNewStreamSource(0, estBitrate);

if (inputSource == NULL) return NULL; // file not found

Groupsock* dummyGroupsock = createGroupsock(nullAddress(addressFamily), 0);

unsigned char rtpPayloadType = 96 + trackNumber()-1; // if dynamic

RTPSink* dummyRTPSink = createNewRTPSink(dummyGroupsock, rtpPayloadType, inputSource);

if (dummyRTPSink != NULL && dummyRTPSink->estimatedBitrate() > 0) estBitrate = dummyRTPSink->estimatedBitrate();

setSDPLinesFromRTPSink(dummyRTPSink, inputSource, estBitrate);

Medium::close(dummyRTPSink);

delete dummyGroupsock;

closeStreamSource(inputSource);

}

return fSDPLines;

}

Since we don't know the sps and pps information of h264videofileservermedia subsession during initialization, live555 reads and parses the H264 file to obtain the sps and pps information by simulating the way of sending RTP stream.

First, create an input source;

Then create a simulated Groupsock. The simulated Groupsock is an empty address and the port is set to 0;

Then use the Groupsock and the input source to create an RTP consumer. Because the IP ports of the RTPSink are false, it will not really send the RTP stream.

Finally, the SDP information of this subsession is obtained through setSDPLinesFromRTPSink.

Since this process is only to obtain SDP information, after obtaining SDP information, all the simulated media resources created are released.

4. Create media input source

This Subsession is of type h264videofileservermedia Subsession, so createNewStreamSource of h264videofileservermedia Subsession will be called.

FramedSource* H264VideoFileServerMediaSubsession::createNewStreamSource(unsigned /*clientSessionId*/, unsigned& estBitrate) {

estBitrate = 500; // kbps, estimate

// Create the video source:

ByteStreamFileSource* fileSource = ByteStreamFileSource::createNew(envir(), fFileName);

if (fileSource == NULL) return NULL;

fFileSize = fileSource->fileSize();

// Create a framer for the Video Elementary Stream:

return H264VideoStreamFramer::createNew(envir(), fileSource);

}

In this function, the file source will be created first, and a ByteStreamFileSource type byte stream file source will be created.

Then use this file source to create an H264VideoStreamFramer.

H264VideoStreamFramer

::H264VideoStreamFramer(UsageEnvironment& env, FramedSource* inputSource, Boolean createParser,

Boolean includeStartCodeInOutput, Boolean insertAccessUnitDelimiters)

: H264or5VideoStreamFramer(264, env, inputSource, createParser,

includeStartCodeInOutput, insertAccessUnitDelimiters) {

}

H264VideoStreamFramer inherits from H264or5VideoStreamFramer.

H264or5VideoStreamFramer

::H264or5VideoStreamFramer(int hNumber, UsageEnvironment& env, FramedSource* inputSource,

Boolean createParser,

Boolean includeStartCodeInOutput, Boolean insertAccessUnitDelimiters)

: MPEGVideoStreamFramer(env, inputSource),

fHNumber(hNumber), fIncludeStartCodeInOutput(includeStartCodeInOutput),

fInsertAccessUnitDelimiters(insertAccessUnitDelimiters),

fLastSeenVPS(NULL), fLastSeenVPSSize(0),

fLastSeenSPS(NULL), fLastSeenSPSSize(0),

fLastSeenPPS(NULL), fLastSeenPPSSize(0) {

fParser = createParser

? new H264or5VideoStreamParser(hNumber, this, inputSource, includeStartCodeInOutput)

: NULL;

fFrameRate = 30.0; // We assume a frame rate of 30 fps, unless we learn otherwise (from parsing a VPS or SPS NAL unit)

}

A parser will be created to parse h264 files.

5. Obtain SDPLine

void OnDemandServerMediaSubsession

::setSDPLinesFromRTPSink(RTPSink* rtpSink, FramedSource* inputSource, unsigned estBitrate) {

if (rtpSink == NULL) return;

char const* mediaType = rtpSink->sdpMediaType();

unsigned char rtpPayloadType = rtpSink->rtpPayloadType();

struct sockaddr_storage const& addressForSDP = rtpSink->groupsockBeingUsed().groupAddress();

portNumBits portNumForSDP = ntohs(rtpSink->groupsockBeingUsed().port().num());

AddressString ipAddressStr(addressForSDP);

char* rtpmapLine = rtpSink->rtpmapLine();

char const* rtcpmuxLine = fMultiplexRTCPWithRTP ? "a=rtcp-mux\r\n" : "";

char const* rangeLine = rangeSDPLine();

char const* auxSDPLine = getAuxSDPLine(rtpSink, inputSource);

if (auxSDPLine == NULL) auxSDPLine = "";

char const* const sdpFmt =

"m=%s %u RTP/AVP %d\r\n"

"c=IN %s %s\r\n"

"b=AS:%u\r\n"

"%s"

"%s"

"%s"

"%s"

"a=control:%s\r\n";

unsigned sdpFmtSize = strlen(sdpFmt)

+ strlen(mediaType) + 5 /* max short len */ + 3 /* max char len */

+ 3/*IP4 or IP6*/ + strlen(ipAddressStr.val())

+ 20 /* max int len */

+ strlen(rtpmapLine)

+ strlen(rtcpmuxLine)

+ strlen(rangeLine)

+ strlen(auxSDPLine)

+ strlen(trackId());

char* sdpLines = new char[sdpFmtSize];

sprintf(sdpLines, sdpFmt,

mediaType, // m= <media>

portNumForSDP, // m= <port>

rtpPayloadType, // m= <fmt list>

addressForSDP.ss_family == AF_INET ? "IP4" : "IP6", ipAddressStr.val(), // c= address

estBitrate, // b=AS:<bandwidth>

rtpmapLine, // a=rtpmap:... (if present)

rtcpmuxLine, // a=rtcp-mux:... (if present)

rangeLine, // a=range:... (if present)

auxSDPLine, // optional extra SDP line

trackId()); // a=control:<track-id>

delete[] (char*)rangeLine; delete[] rtpmapLine;

delete[] fSDPLines; fSDPLines = strDup(sdpLines);

delete[] sdpLines;

}

This generates information such as sdp media type, load type, IP address, etc.

The focus is on the getAuxSDPLine function, which will generate Sdp information related to h264 files.

Since we are h264videofileservermedia subsession, go back and call the getAuxSDPLine function of h264videofileservermedia subsession.

char const* H264VideoFileServerMediaSubsession::getAuxSDPLine(RTPSink* rtpSink, FramedSource* inputSource) {

if (fAuxSDPLine != NULL) return fAuxSDPLine; // it's already been set up (for a previous client)

if (fDummyRTPSink == NULL) { // we're not already setting it up for another, concurrent stream

// Note: For H264 video files, the 'config' information ("profile-level-id" and "sprop-parameter-sets") isn't known

// until we start reading the file. This means that "rtpSink"s "auxSDPLine()" will be NULL initially,

// and we need to start reading data from our file until this changes.

fDummyRTPSink = rtpSink;

// Start reading the file:

fDummyRTPSink->startPlaying(*inputSource, afterPlayingDummy, this);

// Check whether the sink's 'auxSDPLine()' is ready:

checkForAuxSDPLine(this);

}

envir().taskScheduler().doEventLoop(&fDoneFlag);

return fAuxSDPLine;

}

This function determines whether sdpline has been generated. If not, start the simulation RTPSink to generate sdpline. And check for auxsdpline to check whether it is generated.

Let's first look at the checkForAuxSDPLine function

void H264VideoFileServerMediaSubsession::checkForAuxSDPLine1() {

nextTask() = NULL;

char const* dasl;

if (fAuxSDPLine != NULL) {

// Signal the event loop that we're done:

setDoneFlag();

} else if (fDummyRTPSink != NULL && (dasl = fDummyRTPSink->auxSDPLine()) != NULL) {

fAuxSDPLine = strDup(dasl);

fDummyRTPSink = NULL;

// Signal the event loop that we're done:

setDoneFlag();

} else if (!fDoneFlag) {

// try again after a brief delay:

int uSecsToDelay = 100000; // 100 ms

nextTask() = envir().taskScheduler().scheduleDelayedTask(uSecsToDelay,

(TaskFunc*)checkForAuxSDPLine, this);

}

}

It can be seen that this function is to continuously detect whether SDPLine or SDPLine simulating RTPSink is generated. If it is not generated, continue to detect after 100ms until fDoneFlag is set after generation. At this time, the task scheduler will stop working.

char const* H264VideoRTPSink::auxSDPLine() {

// Generate a new "a=fmtp:" line each time, using our SPS and PPS (if we have them),

// otherwise parameters from our framer source (in case they've changed since the last time that

// we were called):

H264or5VideoStreamFramer* framerSource = NULL;

u_int8_t* vpsDummy = NULL; unsigned vpsDummySize = 0;

u_int8_t* sps = fSPS; unsigned spsSize = fSPSSize;

u_int8_t* pps = fPPS; unsigned ppsSize = fPPSSize;

if (sps == NULL || pps == NULL) {

// We need to get SPS and PPS from our framer source:

if (fOurFragmenter == NULL) return NULL; // we don't yet have a fragmenter (and therefore not a source)

framerSource = (H264or5VideoStreamFramer*)(fOurFragmenter->inputSource());

if (framerSource == NULL) return NULL; // we don't yet have a source

framerSource->getVPSandSPSandPPS(vpsDummy, vpsDummySize, sps, spsSize, pps, ppsSize);

if (sps == NULL || pps == NULL) return NULL; // our source isn't ready

}

// Set up the "a=fmtp:" SDP line for this stream:

u_int8_t* spsWEB = new u_int8_t[spsSize]; // "WEB" means "Without Emulation Bytes"

unsigned spsWEBSize = removeH264or5EmulationBytes(spsWEB, spsSize, sps, spsSize);

if (spsWEBSize < 4) { // Bad SPS size => assume our source isn't ready

delete[] spsWEB;

return NULL;

}

u_int32_t profileLevelId = (spsWEB[1]<<16) | (spsWEB[2]<<8) | spsWEB[3];

delete[] spsWEB;

char* sps_base64 = base64Encode((char*)sps, spsSize);

char* pps_base64 = base64Encode((char*)pps, ppsSize);

char const* fmtpFmt =

"a=fmtp:%d packetization-mode=1"

";profile-level-id=%06X"

";sprop-parameter-sets=%s,%s\r\n";

unsigned fmtpFmtSize = strlen(fmtpFmt)

+ 3 /* max char len */

+ 6 /* 3 bytes in hex */

+ strlen(sps_base64) + strlen(pps_base64);

char* fmtp = new char[fmtpFmtSize];

sprintf(fmtp, fmtpFmt,

rtpPayloadType(),

profileLevelId,

sps_base64, pps_base64);

delete[] sps_base64;

delete[] pps_base64;

delete[] fFmtpSDPLine; fFmtpSDPLine = fmtp;

return fFmtpSDPLine;

}

You can see that this function is to detect whether there is SPS and PPS information. If so, the corresponding SDPLine can be generated according to SPS and PPS.

Then, let's go back and continue to see how SPS and PPS information is generated after RTPSink is started.

6. Start RTPSink

Boolean MediaSink::startPlaying(MediaSource& source,

afterPlayingFunc* afterFunc,

void* afterClientData) {

// Make sure we're not already being played:

if (fSource != NULL) {

envir().setResultMsg("This sink is already being played");

return False;

}

// Make sure our source is compatible:

if (!sourceIsCompatibleWithUs(source)) {

envir().setResultMsg("MediaSink::startPlaying(): source is not compatible!");

return False;

}

fSource = (FramedSource*)&source;

fAfterFunc = afterFunc;

fAfterClientData = afterClientData;

return continuePlaying();

}

After playing, the virtual function continuePlaying will be called. Our RTPSink is of type H264or5VideoRTPSink, so we call the continuePlaying of H264or5VideoRTPSink

Boolean H264or5VideoRTPSink::continuePlaying() {

// First, check whether we have a 'fragmenter' class set up yet.

// If not, create it now:

if (fOurFragmenter == NULL) {

fOurFragmenter = new H264or5Fragmenter(fHNumber, envir(), fSource, OutPacketBuffer::maxSize,

ourMaxPacketSize() - 12/*RTP hdr size*/);

} else {

fOurFragmenter->reassignInputSource(fSource);

}

fSource = fOurFragmenter;

// Then call the parent class's implementation:

return MultiFramedRTPSink::continuePlaying();

}

An H264 or H265 partition manager is created. Then call the parent class MultiFramedRTPSink::continuePlaying;

Boolean MultiFramedRTPSink::continuePlaying() {

// Send the first packet.

// (This will also schedule any future sends.)

buildAndSendPacket(True);

return True;

}

...

void MultiFramedRTPSink::buildAndSendPacket(Boolean isFirstPacket) {

nextTask() = NULL;

fIsFirstPacket = isFirstPacket;

// Set up the RTP header:

unsigned rtpHdr = 0x80000000; // RTP version 2; marker ('M') bit not set (by default; it can be set later)

rtpHdr |= (fRTPPayloadType<<16);

rtpHdr |= fSeqNo; // sequence number

fOutBuf->enqueueWord(rtpHdr);

// Note where the RTP timestamp will go.

// (We can't fill this in until we start packing payload frames.)

fTimestampPosition = fOutBuf->curPacketSize();

fOutBuf->skipBytes(4); // leave a hole for the timestamp

fOutBuf->enqueueWord(SSRC());

// Allow for a special, payload-format-specific header following the

// RTP header:

fSpecialHeaderPosition = fOutBuf->curPacketSize();

fSpecialHeaderSize = specialHeaderSize();

fOutBuf->skipBytes(fSpecialHeaderSize);

// Begin packing as many (complete) frames into the packet as we can:

fTotalFrameSpecificHeaderSizes = 0;

fNoFramesLeft = False;

fNumFramesUsedSoFar = 0;

packFrame();

}

void MultiFramedRTPSink::packFrame() {

// Get the next frame.

// First, skip over the space we'll use for any frame-specific header:

fCurFrameSpecificHeaderPosition = fOutBuf->curPacketSize();

fCurFrameSpecificHeaderSize = frameSpecificHeaderSize();

fOutBuf->skipBytes(fCurFrameSpecificHeaderSize);

fTotalFrameSpecificHeaderSizes += fCurFrameSpecificHeaderSize;

// See if we have an overflow frame that was too big for the last pkt

if (fOutBuf->haveOverflowData()) {

// Use this frame before reading a new one from the source

unsigned frameSize = fOutBuf->overflowDataSize();

struct timeval presentationTime = fOutBuf->overflowPresentationTime();

unsigned durationInMicroseconds = fOutBuf->overflowDurationInMicroseconds();

fOutBuf->useOverflowData();

afterGettingFrame1(frameSize, 0, presentationTime, durationInMicroseconds);

} else {

// Normal case: we need to read a new frame from the source

if (fSource == NULL) return;

fSource->getNextFrame(fOutBuf->curPtr(), fOutBuf->totalBytesAvailable(),

afterGettingFrame, this, ourHandleClosure, this);

}

}

continuePlaying calls the packFrame function. When insufficient data is detected in this function, fSource - > getnextframe will be called. Remember that this fSource is the fragment manager created earlier, and the type is h264or5fragment.

The getNextFrame function will call the virtual function doGetNextFrame, which is implemented by each subclass.

Therefore, the doGetNextFrame function of h264or5fragment will be called here.

if (fNumValidDataBytes == 1) {

// We have no NAL unit data currently in the buffer. Read a new one:

fInputSource->getNextFrame(&fInputBuffer[1], fInputBufferSize - 1,

afterGettingFrame, this,

FramedSource::handleClosure, this);

}

The doGetNextFrame of h264or5fragment will call inputsource - > getnextframe again.

The type of this InputSource is H264VideoStreamFramer. You can go back to the key variable type diagram.

So we call h264videostreamframe:: dogetnextframe. It inherits from h264or5videostreamframe and does not rewrite the virtual function itself, so h264or5videostreamframe:: dogetnextframe is called

else {

// Do the normal delivery of a NAL unit from the parser:

MPEGVideoStreamFramer::doGetNextFrame();

}

It calls mpegvideostreamframe:: dogetnextframe()

void MPEGVideoStreamFramer::doGetNextFrame() {

fParser->registerReadInterest(fTo, fMaxSize);

continueReadProcessing();

}

continueReadProcessing calls the parser's parsing function again.

unsigned acquiredFrameSize = fParser->parse();

This fParser is of type H264or5VideoStreamParser, so it will call H264or5VideoStreamParser::parse.

You can see in the parse function that the file data is read and parsed. After parsing, judge whether it is an nalu of SPS or PPS type. If so, set SPS and PPS and other information. So far, we have obtained SPS and PPS information.

usingSource()->saveCopyOfSPS(fStartOfFrame + fOutputStartCodeSize, curFrameSize() - fOutputStartCodeSize); ... usingSource()->saveCopyOfPPS(fStartOfFrame + fOutputStartCodeSize, curFrameSize() - fOutputStartCodeSize);

After obtaining SPS and PPS information, the corresponding SDPLine information can be generated by combining the previous H264VideoRTPSink::auxSDPLine() function. The SDP information is generated and sent to the client. Complete the whole process of DESCRIBE.