Concurrent programming

abstract

- This chapter discusses concurrent programming, introduces the concept of parallel computing, and points out the importance of parallel computing;

- The sequential algorithm and parallel algorithm, parallelism and concurrency are compared;

- The principle of thread and its advantages over process are explained;

- This paper introduces the thread operation in Pthread through examples, including thread management functions, thread synchronization tools such as mutex, connection, condition variables and barriers;

- Through concrete examples, this paper demonstrates how to use threads for concurrent programming, including matrix calculation, quick sorting and solving linear equations with concurrent threads;

- This paper explains the deadlock problem and explains how to prevent the deadlock problem in concurrent programs;

- Semaphores are discussed and their advantages over conditional variables are demonstrated.

Parallelism and concurrency

- True parallel execution can only be implemented in systems with multiple processing components, such as multiprocessor or multi-core systems.

- In a single CPU system, concurrency is achieved by multitasking.

thread

- Principle of thread

- In kernel mode, each process executes in a unique address space, which is separate from other processes;

- Each process is an independent unit with only one execution path;

- A thread is an independent execution unit in the same address space of a process. If there is only one main thread, there is no essential difference between a process and a thread.

- Advantages of threads

- Thread creation and switching are faster.

- Threads respond faster.

- Threads are more suitable for parallel computing

- Disadvantages of threads

- Due to address space sharing, threads need explicit synchronization from users.

- Many library functions may be thread unsafe.

- On a single CPU system, using threads to solve problems is actually slower than using sequential programs, which is caused by the overhead of creating threads and switching contexts at run time.

- Thread operation

- Threads can execute in kernel mode or user mode.

- In user mode, threads execute in the same address space of the process, but each thread has its own execution stack.

- Thread is an independent execution unit. It can make system call to the kernel according to the scheduling strategy of the operating system kernel, change it into Guiqi activation to continue execution, etc.

- In order to make use of the shared address space of threads, the scheduling strategy of the operating system kernel may give priority to threads in the same process rather than threads in different processes.

- Thread management function

- The Pthread library provides the following API s for thread management

pthread_create(thread, attr, function, arg): create thread pthread_exit(status): terminate thread pthread_cancel(thread) : cancel thread pthread_attr_init(attr) : initialize thread attributes pthread_attr_destroy(attr): destroy thread attribute

- Create thread

- Using pthread_ The create() function creates a thread.

int pthread_create (pthread_t *pthread_id,pthread_attr_t *attr,void * (*func) (void *), void *arg); - Among them, attr is the most complex, and its use steps are

- Define a pthread attribute to index PT: thread_ attr_ tattr.

- With pthread_ attr_ Init (& attr) initializes flexion.

- Set the attribute variable and set it in pthread_ Used in the create() call.

- If necessary, use pthread_ attr_ Destroy (& attr) free attr resources.

- Using pthread_ The create() function creates a thread.

- Thread termination

- After the thread function ends, the thread terminates, or the thread can call the function int pthraad_exit {void *status), where the state is the exit state of the thread.

- Thread connection

- One thread can wait for the termination of another thread by:

int pthread_join (pthread_t thread, void **status__ptr); - The exit status of the terminating thread is returned as status_ptr.

- One thread can wait for the termination of another thread by:

- The Pthread library provides the following API s for thread management

Thread synchronization

-

When multiple threads attempt to modify the same shared variable or data structure, if the modification result depends on the execution order of the threads, it is called race condition.

-

mutex

- In Pthread, locks are called mutually exclusive, which means mutually exclusive.

- Mutex representations are declared with ptbread_mutex_t type, and they must be initialized before use.

- There are two ways to initialize mutex addresses:

- Static method: pthread - mutex_t m = PTHREAD_MUTEX_INITIALIZER, define the mutex m and initialize it with the default attribute.

- Dynamic method, using pthread_mutex_init() function

- There are two ways to initialize mutex addresses:

- Threads protect shared data objects through mutexes

-

Deadlock prevention

- A deadlock is a state in which many execution entities wait for each other and cannot continue.

- Deadlock prevention attempts to prevent deadlock when designing parallel algorithms.

- A simple deadlock prevention method is to sort mutexes and ensure that each thread requests mutexes in only one direction, so that there will be no loops in the request sequence.

-

Conditional variable

- Conditional variables provide a way for threads to collaborate.

- In Pthread, the type pthread_cond_t is used to declare condition variables, and must be initialized before use.

- Like mutually exclusive variables, conditional variables can be initialized in two ways.

- Static method: pthread_cond_t con= PTHREAD_COND_INITIALIZER; define a conditional variable con and initialize it with the default attribute.

- Dynamic method: using the pthread_cond_init() function, you can set the condition variable through the attr parameter. For simplicity, we always use the NULLattr parameter as the default attribute.

-

Semaphore

- Semaphores are the general mechanism of process synchronization.

- Semaphores are data structures

struct sem{ int value; struct process *queue }s;

Problems and Solutions

- The difference and relation between parallelism and concurrency

- The essence of concurrency is that a physical CPU (or multiple physical CPUs) is multiplexed between several programs;

- All concurrent processes have queuing, wake-up, and perform at least three such steps;

- Concurrency, also known as concurrency, refers to the ability to handle multiple simultaneous activities;

- Concurrency is to enforce multi-user sharing of limited physical resources to improve efficiency.

- Parallelism means that two or more events or activities occur at the same time. In a multiprogramming environment, parallelism enables multiple programs to execute simultaneously on different CPU s at the same time.

- Parallelism refers to two concurrent events that occur at the same time, which has the meaning of concurrency. Concurrency is not necessarily parallel, that is, concurrent events do not necessarily occur at the same time.

- The essence of concurrency is that a physical CPU (or multiple physical CPUs) is multiplexed between several programs;

practice

#include <stdio.h>

#include <stdlib.h>

#include <pthread.h>

typedef struct{

int upperbound;

int lowerbound;

}PARM;

#define N 10

int a[N]={5,1,6,4,7,2,9,8,0,3};// unsorted data

int print(){//print current a[] contents

int i;

printf("[");

for(i=0;i<N;i++)

printf("%d ",a[i]);

printf("]\n");

}

void *Qsort(void *aptr){

PARM *ap, aleft, aright;

int pivot, pivotIndex,left, right,temp;

int upperbound,lowerbound;

pthread_t me,leftThread,rightThread;

me = pthread_self();

ap =(PARM *)aptr;

upperbound = ap->upperbound;

lowerbound = ap->lowerbound;

pivot = a[upperbound];//pick low pivot value

left = lowerbound - 1;//scan index from left side

right = upperbound;//scan index from right side

if(lowerbound >= upperbound)

pthread_exit (NULL);

while(left < right){//partition loop

do{left++;} while (a[left] < pivot);

do{right--;}while(a[right]>pivot);

if (left < right ) {

temp = a[left];a[left]=a[right];a[right] = temp;

}

}

print();

pivotIndex = left;//put pivot back

temp = a[pivotIndex] ;

a[pivotIndex] = pivot;

a[upperbound] = temp;

//start the "recursive threads"

aleft.upperbound = pivotIndex - 1;

aleft.lowerbound = lowerbound;

aright.upperbound = upperbound;

aright.lowerbound = pivotIndex + 1;

printf("%lu: create left and right threadsln", me) ;

pthread_create(&leftThread,NULL,Qsort,(void * )&aleft);

pthread_create(&rightThread,NULL,Qsort,(void *)&aright);

//wait for left and right threads to finish

pthread_join(leftThread,NULL);

pthread_join(rightThread, NULL);

printf("%lu: joined with left & right threads\n",me);

}

int main(int argc, char *argv[]){

PARM arg;

int i, *array;

pthread_t me,thread;

me = pthread_self( );

printf("main %lu: unsorted array = ", me);

print( ) ;

arg.upperbound = N-1;

arg. lowerbound = 0 ;

printf("main %lu create a thread to do QS\n" , me);

pthread_create(&thread,NULL,Qsort,(void * ) &arg);//wait for Qs thread to finish

pthread_join(thread,NULL);

printf ("main %lu sorted array = ", me);

print () ;

}

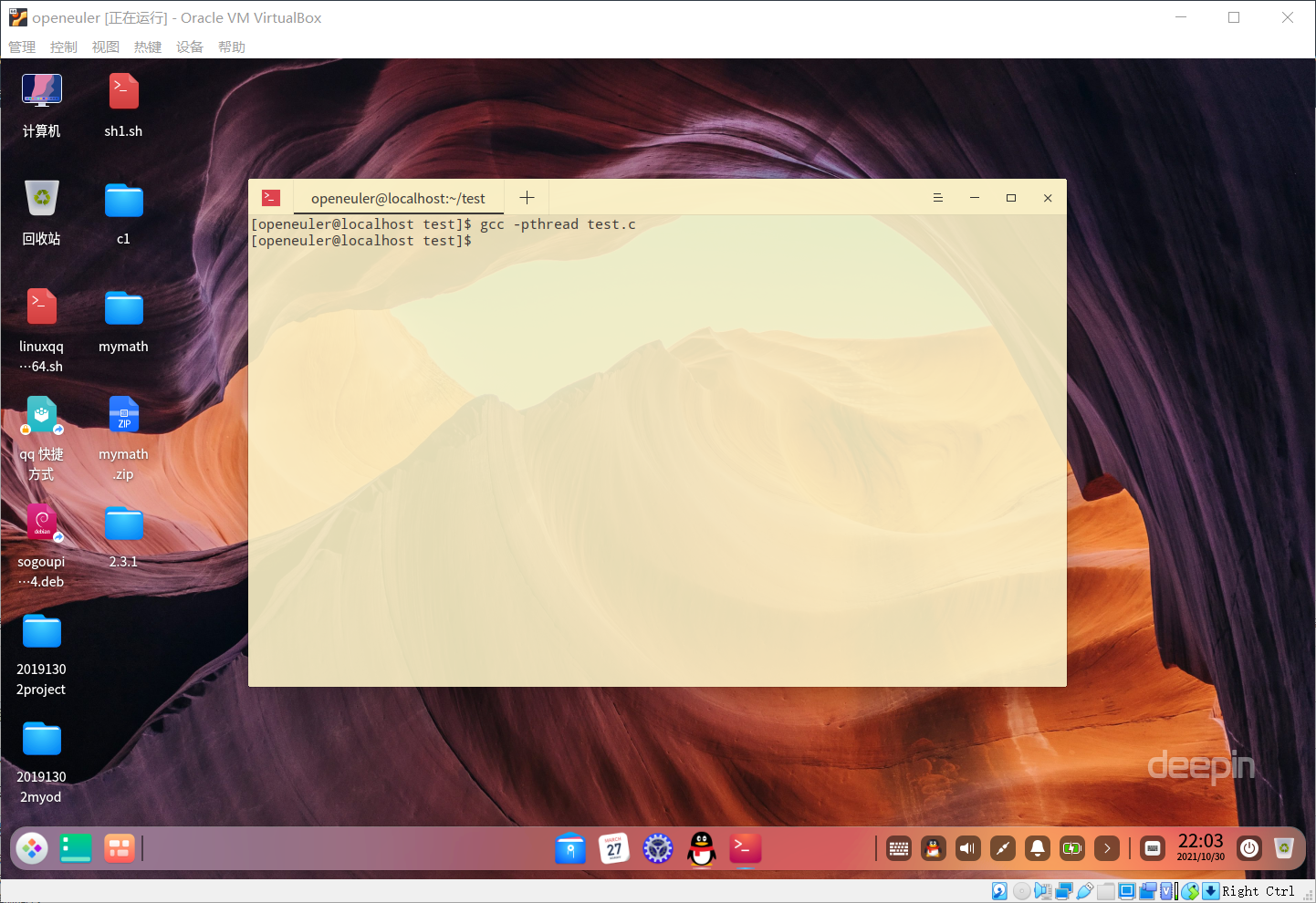

- Operation results