Chapter 1 Advantages of Cluster

Important computational intensive applications in some countries (such as weather forecasting, nuclear test simulation, etc.) require a strong computational processing capability of computers. With the existing technology all over the world, even mainframes, their computing power is limited, and it is difficult to accomplish this task alone. Because the computing time may be quite long, maybe a few days, or even commemoration or longer, for such complex computing business, the use of computer cluster technology, a concentration of tens of hundreds, or even tens of thousands of computers for computing.

Chapter II Common Classification of Clusters

Load balancing clusters, referred to as LBC or LB

High-availability clusters (HAC) for short

High Performance Clusters (HPC) for short

Grid computing

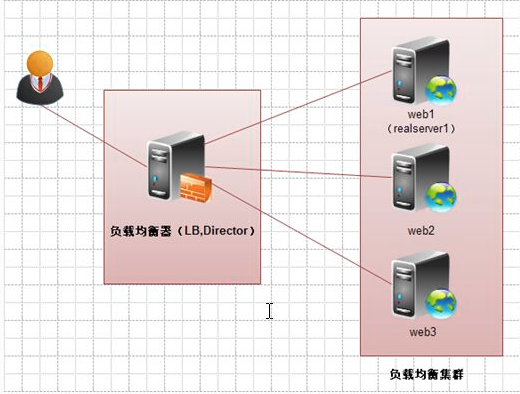

Chapter 3 The Role of Load Balancing Cluster

- Share user access requests and data traffic (load balancing).

- Maintain business continuity, i.e. 7*24-hour service (high availability).

- It is applied to web business and database slave database (read) server business.

- Typical open source software for load balancing cluster includes LVS, Nginx Haproxy, etc.

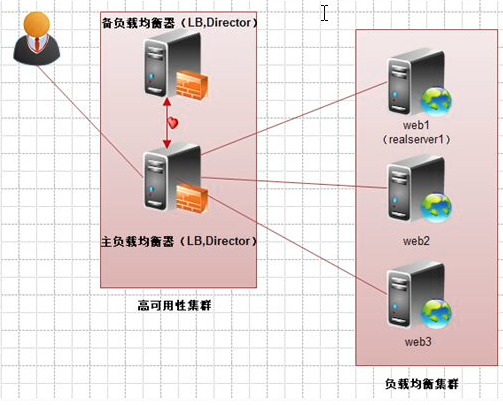

Chapter IV High Availability Cluster

Open source software commonly used in high availability cluster includes Keepalived, Hearbeat, etc.

Chapter V Introduction of Common Software and Hardware in Enterprises

Nginx LVS Haproxy Keepalived Hearbeat is a common open source cluster software for Internet enterprises.

Application Layer (http, https)

Transport Layer (tcp)

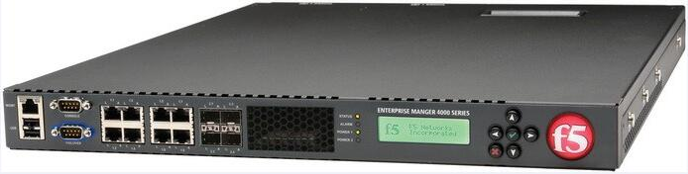

Business cluster hardware commonly used by Internet enterprises are F5, Netscaler, Radware, A10, etc. Working mode is equivalent to haproxy working mode.

Chapter 6 Selection of Common Software and Hardware in Enterprises

- When business is important and technology is weak, and you want to pay for products and better services, you can choose hardware load balancing products, such as F5, Netscaler, Radware and so on. Most of these companies are traditional Daxing non-Internet enterprises, such as banks, securities, finance, BMW, Mercedes-Benz and so on.

- For portals, they mostly use software and hardware products to share the risks of a single product, such as Taobao, Tencent, Sina and so on. Financed companies will buy hardware products, such as websites such as shopping malls.

- Small and medium-sized Internet enterprises, because of their unprofitable start-up stage or low profit, will hope to solve the problem by using open source free solutions, so they will employ specialized operation and maintenance personnel for maintenance.

Chapter 7 Reverse Agent and Load Balancing Concept

Strictly speaking, Nginx is only used as Nginx Proxy reverse proxy, so the performance of this reverse proxy function is the effect of load balancing, so this paper calls it Nginx load balancing.

Ordinary load balancing software, such as LVS. The function of DR mode is only to forward (or possibly change to) the request packet. The obvious feature of DR mode is that from the point of view of the node service under load balancing, the received request is from the real user of the client who accesses the load balancer, but the reverse proxy is different. When the reverse proxy receives the request from the user, it will proxy the user. Re-initiate the node server under the request proxy, and finally return the data to the client user. In the view of the node server, the client user accessing the node server is the reverse proxy server, not the real website user.

Chapter 8 Component Modules for Load Balancing

| Nginx http function module | Module description |

|---|---|

| ngx_http_proxy_module | The proxy proxy module is used to throw requests back to server nodes or upstream server pools. |

| ngx_http_upstream_module | The load balancing module can realize the load balancing function of the website and the health check of the nodes. Create a pool, web server |

Chapter 9 Configuration of nginx web Services

1. Configuration file modification

worker_processes 1;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

sendfile on;

keepalive_timeout 65;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

server {

listen 80;

server_name www.etiantian.org;

location / {

root html/www;

index index.html index.htm;

}

access_log logs/access_www.log main;

}

server {

listen 80;

server_name bbs.etiantian.org;

location / {

root html/bbs;

index index.html index.htm;

}

access_log logs/access_bbs.log main;

}

}2. Create corresponding site directories and home page files

mkdir -p /application/nginx/html/{www,bbs}

for dir in www bbs;do echo "`ifconfig eth0|egrep -o "10.0.0.[0-9]+"` $dir" >/application/nginx/html/$dir/index.html;done

3. Graceful Restart

[root@web02-8 html]# nginx -t

nginx: the configuration file /application/nginx-1.10.2/conf/nginx.conf syntax is ok

nginx: configuration file /application/nginx-1.10.2/conf/nginx.conf test is successful

[root@web02-8 html]# nginx -s reload

4. Load balancing upstream

worker_processes 1;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

sendfile on;

keepalive_timeout 65;

upstream server_pools {

server 10.0.0.7;

server 10.0.0.8;

server 10.0.0.9;

}

server {

listen 80;

server_name www.etiantian.org;

location / {

proxy_pass http://server_pools;

}

}

}

5. Weightt weight module

[root@LB01_5 conf]# vim nginx.conf

worker_processes 1;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

sendfile on;

keepalive_timeout 65;

upstream server_pools {

server 10.0.0.7:80 weight=2;

server 10.0.0.8:80 weight=1;

server 10.0.0.9:80 weight=1;

}

server {

listen 80;

server_name www.etiantian.org;

location / {

proxy_pass http://server_pools;

}

}

}

6. max_fails fail_timeout

upstream server_pools {

server 10.0.0.7:80 weight=4 max_fails=3 fail_timeout=30s;

server 10.0.0.8:80 weight=4 max_fails=3 fail_timeout=30s;

max_fail Maximum number of failed connections that will continue when the connection fails3Subconnection

fail_timeout Failed connection timeout, when connection failure exceeds30s Attempts will be made to reconnect later.

7. backup

upstream server_pools {

server 10.0.0.7:80 weight=4 max_fails=3 fail_timeout=30s;

server 10.0.0.8:80 weight=4 max_fails=3 fail_timeout=30s;

server 10.0.0.9:80 weight=4 max_fails=3 fail_timeout=30s backup;

}

backup Standby server, when joined backup Later, the server is not used. When the other two servers hang up, the server is automatically used.

8. server Label Details

| server tag | Description of parameters |

|---|---|

| server 10.0.0.8:80 | The RS configuration after load balancing can be IP or domain name. If the port is not written, the default is port 80. In high concurrency scenario, IP can be replaced by domain name and load balanced by DNS. |

| weight=1 | The default weight value for the server is 1. The larger the weight number, the larger the proportion of requests accepted. |

| max_fails=1 | Nginx attempts to connect to the back-end host failed times, this value is used in conjunction with three parameters proxy_next_upstarem\ fastcgi_next_upstream and memcached_next_upstream. When Nginx receives the status code defined by the three parameters returned by the back-end server, it forwards the request to the normal working back-end server, such as 404, 502, 503. The default value of max_fails is 1; Enterprise scenario: Recommendation 2-3 times. Beijing East once, blue flood 10 times. Configuration according to business requirements |

| fail_timeout=10s | After the number of failures defined by max_fails, the interval from the next check is 10 s by default; if max_fails is 5, it detects five times, if all five times are 502. Then, it waits 10 seconds to check according to the value of fail_timeout. Or check only once, if it lasts 502, every 10 seconds without reloading the nginx configuration |

| backup | Hot standby configuration (high availability of RS nodes) will automatically enable hot standby RS after all the currently activated RS fail. This indicates that the server serves as a backup server, and if the primary server is down, it will forward requests to it. Note that when the load scheduling algorithm is ip_hash, the backend server cannot be weight ed and backup in load balancing scheduling. |

| down | This indicates that the server will never apply the equivalent annotation, which can be used in conjunction with ip_hash. |

Chapter 10 Uptream Module Scheduling Algorithms

1. rr polling (round robin default scheduling algorithm, static scheduling algorithm)

Assigning client requests to different back-end node servers one by one is equivalent to rr algorithm in LVS. If the back-end node server goes down (by default, nginx detects only 80 ports), the downtime server will be automatically removed from the pool of node servers so that the client's user access is unaffected. New requests are assigned to normal servers.

2. wrr weight polling

On the basis of rr polling algorithm, weights are added, that is, weights polling algorithm. But when using this algorithm, weights are proportional to user access. The larger the weights are, the more requests will be forwarded. The size of weights can be determined according to the configuration and performance of servers, which can effectively solve the problem of request allocation caused by uneven performance of new and old servers.

3. least_conn algorithm

The least_conn algorithm determines the allocation based on the number of connections at the back-end nodes, and distributes when the number of connections is small.

4. url_hash algorithm

Similar to ip_hash, the request is allocated according to the hash result of accessing the URL, and each URL is directed to the same back-end server, which has a significant effect on the cache server. Hash statement is added to upstream, other parameters such as weight cannot be written in server statement, hash algorithm used by hash_method.

5. ip_hash static scheduling algorithm

Each request is allocated according to the hash result of client IP. When a new request arrives, the client IP is hashed out a value through hash algorithm. In subsequent client requests, if the client IP hash value is the same, it will be allocated to the same server. This scheduling algorithm can solve the session sharing problem of dynamic web pages, but sometimes leads to uneven allocation of requests. There is no guarantee of 1:1 load balancing, because most of the domestic companies are NAT network mode, multiple clients will correspond to an external IP, so these clients will be allocated to the same node server, resulting in uneven request allocation. The - p parameter of LVS load balancing and the persistence_timeout 50 parameter in Keepalived configuration are similar to the IP_hash parameter in nginx. Its functions can solve the session sharing problem of dynamic web pages.

Chapter 11 proxy Modules

| http proxy module parameters | Explain |

|---|---|

| proxy_set_header | When a load balancing request is followed by a web server, the request responds. |

| proxy_set_header | Modify the information in the request header to set the http request header item to the back-end server node. For example, the server node in the proxy back-end can get the real IP address of the client user. |

| client_body_buffer_size | The user specifies the size of the client request body buffer, which is easy to understand if you understand the principle of the http request package in the previous article. |

| proxy_connect_timeout | Represents the time-out of connection between the reverse proxy and the back-end single server, i.e. initiating a handshake waiting for the corresponding time-out. |

| proxy_send_timeout | Represents the data return time of the proxy back-end server, that is, the back-end server must pass all the data within the specified time, otherwise, Nginx will disconnect the connection. |

| proxy_read_timeout | Setting the time when Nginx obtains information from the back-end server of the proxy indicates the time when Nginx waits for the response of the back-end server after the connection is established successfully. In fact, the party that Nginx has entered the back-end only waits for processing time. |

9. proxy_set_header Host

server {

listen 80;

server_name bbs.etiantian.org;

location / {

proxy_pass http://server_pools;

proxy_set_header Host $host;

}

}

10. proxy_set_header X-Forwarded-For

server {

listen 80;

server_name bbs.etiantian.org;

location / {

proxy_pass http://server_pools;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $remote_addr;

}

}

[root@LB01_5 conf]# vim nginx.conf

worker_processes 1;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

sendfile on;

keepalive_timeout 65;

upstream server_pools {

server 10.0.0.7:80 weight=4 max_fails=3 fail_timeout=30s;

server 10.0.0.8:80 weight=4 max_fails=3 fail_timeout=30s;

server 10.0.0.9:80 weight=4 max_fails=3 fail_timeout=30s;

}

server {

listen 80;

server_name www.etiantian.org;

location / {

proxy_pass http://server_pools;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $remote_addr;

}

}

server {

listen 80;

server_name bbs.etiantian.org;

location / {

proxy_pass http://server_pools;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $remote_addr;

}

}

}

worker_processes 1;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

sendfile on;

keepalive_timeout 65;

upstream upload_pools {

server 10.0.0.8:80;

}

upstream static_pools {

server 10.0.0.7:80;

}

upstream default_pools {

server 10.0.0.9:80;

}

server {

listen 80;

server_name www.etiantian.org;

location /upload {

proxy_pass http://upload_pools;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $remote_addr;

}

location /static {

proxy_pass http://static_pools;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $remote_addr;

}

location / {

proxy_pass http://default_pools;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $remote_addr;

}

}

}