problem

See a sentence: NMS doesn't understand, what else to do Detection! Tiger's body is shocked... Understand is probably understand, but can the code be written???

In the target detection network, after the proposal is generated, the classification branch is used to give the confidence of each type of each box, and the regression branch is used to correct the position of the box. Finally, NMS method will be used to remove those detection boxes with high IOU overlap and low confidence in the same category.

The following figure shows the effect of NMS in target detection: emmm can let you see the beauty's face more unobstructed hhhh

background knowledge

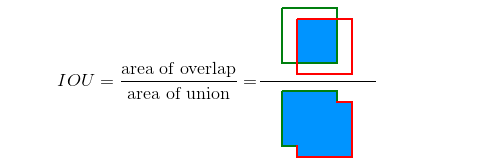

NMS (non maximum suppression) non maximum suppression, i.e. suppression of a detection box that is not a maximum, according to what? In the field of target detection, of course, it is suppressed according to IOU (Intersection over Union). The following figure shows the IOU calculation method of green detection box and red detection box:

NMS principle and example

Note that NMS operates for a specific category. For example, suppose that there are targets to be detected in a picture, including "face" and "cat", and 10 target boxes are detected before NMS. The variable of each target box is expressed as: [ x 1 , y 1 , x 2 , y 2 , s c o r e 1 , s c o r e 2 ] [x_1,y_1,x_2,y_2,score_1,score_2] [x1, y1, x2, y2, score1, score2], where ( x 1 , y 1 ) (x_1,y_1) (x1, y1) represents the coordinates of the upper left corner of the box, ( x 2 , y 2 ) (x_2,y_2) (x2, y2) represents the coordinates of the lower right corner of the box, s c o r e 1 score_1 score1 , represents the confidence of the "face" category, s c o r e 2 score_2 score2 , indicates the confidence level of the cat category. When s c o r e 1 score_1 Score 1 ^ ratio s c o r e 2 score_2 When score2 , is large, the box is classified as "face", otherwise it is classified as "cat". Finally, we assume that 6 of the 10 target boxes are classified as "face".

Next, we will demonstrate how to NMS the target box of the "face" category.

First, for the six target boxes s c o r e 1 score_1 Score 1 ¢ is the descending order of confidence:

| Target box | score_1 |

|---|---|

| A | 0.9 |

| B | 0.85 |

| C | 0.7 |

| D | 0.6 |

| E | 0.4 |

| F | 0.1 |

(1) Take out the target box A with the maximum confidence and save it

(2) Judge the overlap IOU between the five target frames B-F and A respectively. If the IOU is greater than our preset threshold (generally 0.5), the target frame will be discarded. Suppose that the two target frames C and F are discarded at this time, and only three frames B, D and E are left in the sequence.

(3) Repeat the above process until the sorting sequence is empty.

code implementation

# bboxees dimension is [N, 4], scores dimension is [N, 1], and both are np.array()

def single_nms(self, bboxes, scores, thresh = 0.5):

# x1, y1, x2, y2 and scores assignment

x1 = bboxes[:, 0]

y1 = bboxes[:, 1]

x2 = bboxes[:, 2]

y2 = bboxes[:, 3]

# Calculate the area of each detection frame

areas = (x2 - x1 + 1) * (y2 - y1 + 1)

# Sort in descending order according to the score confidence, and order is the sorted index

order = scores.argsort() # argsort is a sort function in python. It is sorted in ascending order by default

order = order[::-1] # Flip ascending results to descending

# Reserved result box index

keep = []

# torch.numel() returns the number of tensor elements

while order.size > 0:

if order.size == 1:

i = order[0]

keep.append(i)

break

else:

i = order[0] # Use item() in pytorch to get the real value of the element, that is, if only i = order[0], then i is still a tensor, so it cannot be assigned to keep

keep.append(i)

# Calculate the upper left and lower right coordinates of the intersection area

xx1 = np.maximum(x1[i], x1[order[1:]])

yy1 = np.maximum(y1[i], y1[order[1:]])

xx2 = np.minimum(x2[i], x2[order[1:]])

yy2 = np.minimum(y2[i], y2[order[1:]])

# Calculate the area of intersection, which is 0 when there is no overlap

w = np.maximum(0.0, xx2 - xx1 + 1)

h = np.maximum(0.0, yy2 - yy1 + 1)

inter = w * h

# Calculated IOU = overlapping area / (area 1 + area 2 - overlapping area)

iou = inter / (areas[i] + areas[order[1:]] - inter)

# bboxes with IOU less than the threshold are reserved

inds = np.where(iou <= thresh)[0]

if inds.size == 0:

break

order = order[inds + 1] # Because the index of the result obtained when we seek iou is offset by one bit compared with order, we need to make it up here

return keep # The index in bboxes is returned here. According to this index, the final detection box result can be obtained from bboxes

reference material

Detailed explanation of NMS algorithm (with pytoch implementation code)

Non maximum suppression (NMS)