Article directory

- 1. Understand multitask programming

- 2. Multi process programming

- 2.1 understanding process

- 2.2 create subprocess

- 2.2.1 method 1: fork creates a subprocess

- 2.2.2 method 2: Process creates a subprocess

- 2.2.3 method 3: Pool creates a subprocess

- 2.3 interprocess communication

- 3. Multithreaded programming

- 4. Association

1. Understand multitask programming

What is multitasking?

Multitasking means that the operating system can run multiple tasks at the same time. For example, when you are surfing the Internet in a browser, listening to MP3 and using Word to do your homework, this is multitasking. At least three tasks are running at the same time. There are many tasks running quietly in the background at the same time, but they are not displayed on the desktop.

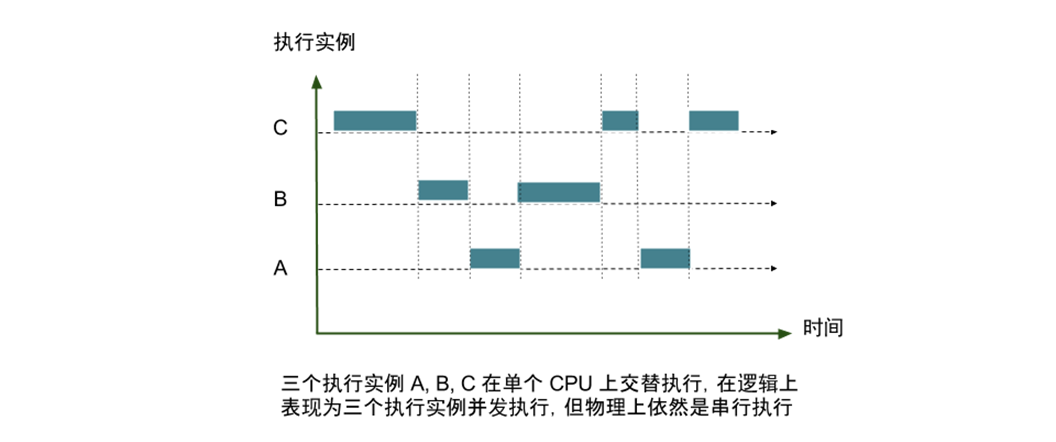

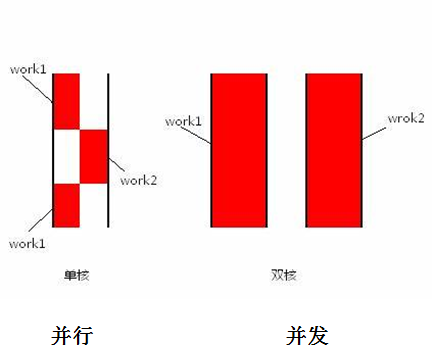

How does single core CPU realize multitasking?

The operating system alternates the execution of each task. Each task is executed for 0.01 seconds, which is repeated. On the surface, each task is executed alternately, but the execution speed of CPU is too fast. It feels like all tasks are executed at the same time.

How to implement multitasking in multi-core CPU?

The real concurrent execution of multi tasks can only be realized on multi-core CPU. However, because the number of tasks is far more than the number of CPU cores, the operating system will automatically schedule many tasks to each core in turn.

2. Multi process programming

2.1 understanding process

Program: finished code, when it is not running

Process: the running code. In addition, in addition to the code, the process also needs to run the environment, etc., so it is different from the program

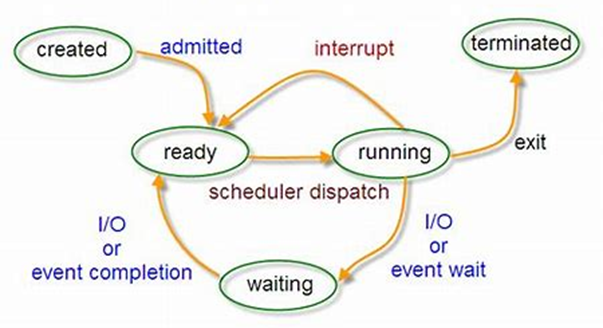

Five states of a process:

2.2 create subprocess

2.2.1 method 1: fork creates a subprocess

Code example:

""" //In multiple processes, all data (including global variables) in each process have one copy, which does not affect each other """ import os import time # Define a global variable money money = 100 print("Of the current process pid:", os.getpid()) print("Parent of current process pid:", os.getppid()) p = os.fork() # Child process returned 0 if p == 0: money = 200 print("Information returned by subprocesses, money=%d" %(money)) # The parent process returns the pid of the child process else: print("Create child process%s, The parent process is%d, money=%d" %(p, os.getppid(), money))

[summary]

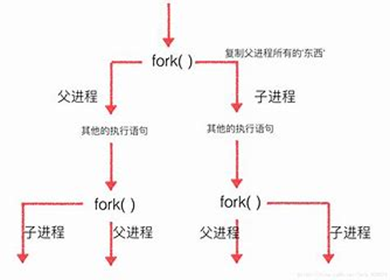

- When executing to os.fork(), the operating system will create a new process to copy all the information (data, including global variables) of the parent process to the child process, and the data will not affect each other

- A normal function call, called once and returned once, but a fork() call once and returned two times

- Both the parent process and the child process will get a return value from the fork() function. The return value of the parent process is 0, and the id number of the parent process (> 0) is returned in the child process

2.2.2 method 2: Process creates a subprocess

Because Windows has no fork call, and Python is cross platform, we use multiprocessing module to implement cross platform version of multiprocessing. The multiprocessing module provides a Process class to represent a Process object.

Use of Process class:

from multiprocessing import Process Process([group [, target [, name [, args [, kwargs]]]]]) - target: Represents the object called by this process instance; - args: Represents the parameters of the calling object, which is of tuple data type; - kwargs: Represents the keyword parameter of the calling object, which is a dictionary; - name: Is the alias of the current process instance; - group: Not used in most cases;

Common methods of Process class:

my_process = Process(target=my_func) - my_process.is_alive(): Judge whether the process instance is still executing; - my_process.join([timeout]): Whether to wait for the process instance to finish executing, or how many seconds to wait; - my_process.start(): Start process instance (create child process); - my_process.run(): If not given target Parameter, when this object calls start() Method, the run() Method; - my_process.terminate(): Terminate immediately whether the task is completed or not;

- Process create child process -- instantiate object, code example:

""" Process([group [, target [, name [, args [, kwargs]]]]]) target: Represents the object called by this process instance; args: Represents the location parameter tuple of the calling object; kwargs: Key parameter dictionary representing the calling object; name: Is the alias of the current process instance; group: A kind of in most cases A kind of; Process Class common methods: is_alive(): Determine whether the process instance is still executing; join([timeout]): Whether to wait for the process instance execution to finish, or how many seconds to wait; start(): Start process instance (create process); run(): If not given target Parameter, call the start()At the time of law, //Will execute the run() method in the object; terminate(): Whether the task is completed or not, A kind of is the end; """ from multiprocessing import Process import time def task1(): print("Listening to music......") time.sleep(1) def task2(): print("Programming now......") time.sleep(0.5) def no_multi(): task1() task2() def use_multi(): p1 = Process(target=task1) # Instanced object p2 = Process(target=task2) p1.start() p2.start() p1.join() p2.join() # join logic: ''' p.join(): Blocking the current process, //After p1.start(), P1 prompts the main process that it needs to wait for P1 process to finish executing before executing down, //Then the main process will wait for p1 and p2 to finish, //That is to say, after the use multi() is executed, the end time is calculated //This is in line with the logic of time calculation ''' # [process.join() for process in processes] if __name__ == '__main__': # Main process start_time= time.time() # no_multi() use_multi() end_time = time.time() print(end_time-start_time)

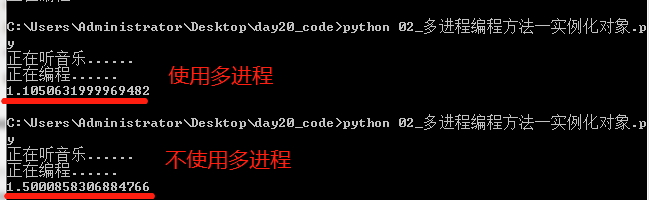

Execution result:

- Process create sub process -- inherit to create sub class, code example:

""" //Create subclass, inherit way """ from multiprocessing import Process import time class MyProcess(Process): """ //Create your own Process. The parent class is Process """ def __init__(self, music_name): super(MyProcess, self).__init__() # Inherit the init function in the parent class self.music_name = music_name def run(self): """Rewrite run Method, content is the task you want to perform""" print("hear%s Music" %(self.music_name)) time.sleep(1) # Start process: p.start() < =======> p.run() if __name__ == '__main__': for i in range(10): p = MyProcess("Music%d" %(i)) p.start() # Execute the contents of the run() function

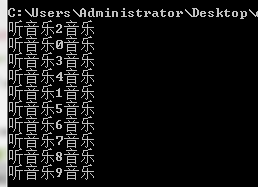

Execution result:

2.2.3 method 3: Pool creates a subprocess

Why do I need a process Pool?

-

When the number of manipulated objects is small, you can directly use the Process in multiprocessing to dynamically generate multiple processes, a dozen of which are OK, but if there are hundreds or thousands of goals, it is too cumbersome to manually limit the number of processes. At this time, you can play the role of Process pool.

-

Pool can provide a specified number of processes for users to call. When a new request is submitted to pool =, if the pool is not full, a new process will be created to execute the request. However, if the number of processes in the pool has reached the specified maximum, the request will wait until the end of a process in the pool, a new process will be created to it. Better manage the relationship between the number of processes and the number of CPU cores, and better realize "real multitasking"

Code example:

from multiprocessing import Process def is_prime(num): """Judgement primes""" if num == 1: return False for i in range(2, num): if num % i == 0: return False else: return True def task(num): if is_prime(num): print("%d Prime number" % (num)) # Judge all prime numbers between 1000-1200 def use_mutli(): ps = [] # Don't open too many processes. It will take time and space (memory) to create subprocesses; for num in range(1, 10000): # Instantiate child process object p = Process(target=task, args=(num,)) # Open subprocess p.start() # Store all child process objects ps.append(p) # Block subprocesses, wait for all subprocesses to finish executing, and then execute the main process to calculate the running time; [p.join() for p in ps] # Judge all prime numbers between 1000-1200 def no_mutli(): for num in range(1, 100000): task(num) def use_pool(): """Use process pool""" from multiprocessing import Pool from multiprocessing import cpu_count # 4 p = Pool(cpu_count()) p.map(task, list(range(1, 100000))) p.close() # Close process pool p.join() # Block, wait for all subprocesses to finish executing, and then execute the main process; if __name__ == '__main__': import time start_time = time.time() # Data size # 1000-1200 # 1-10000 # 1-100000 # no_mutli() # 0.0077722072601 # 1.7887046337127686 # 90.75180315971375 # use_mutli() # 1.806459665298462 use_pool() # 0.15455389022827148 # 1.2682361602783203 # 35.63375639915466 end_time = time.time() print(end_time - start_time)

2.3 interprocess communication

In multiple processes, all data (including global variables) in each process have one copy, which does not affect each other.

Purpose of process communication:

- data transmission

- shared data

- Notification event

- resource sharing

- Process control

Mode of process communication: [important]

- The Conduit

- signal

- Message queue

- Semaphore

- socket

3. Multithreaded programming

3.1 understanding threads

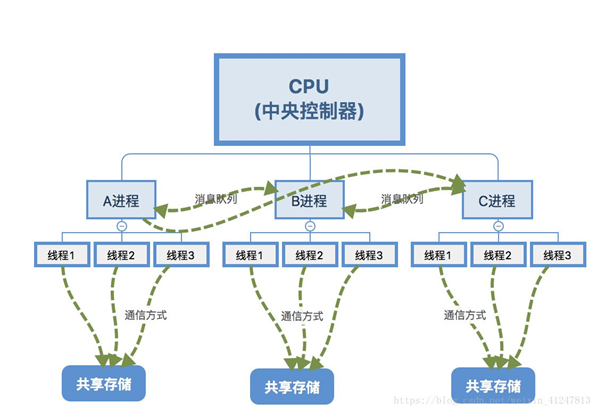

thread is the smallest unit that CPU can schedule operations. It is included in the process and is the actual operating unit of the process.

Each process has at least one thread, the process itself. Processes can start multiple threads. The operating system executes these threads like parallel "processes.".

Difference between thread and process: [important]

- Process is the smallest unit of resource allocation, and thread is the unit of CPU scheduling.

- A program has at least one process, and a process has at least one thread

- The process has its own independent address space. Threads share the data in the process and use the same address space

- Communication between processes needs to be carried out in the form of communication (IPC). The communication between threads is more convenient. Threads in the same process share global variables, static variables and other data. The difficulty lies in dealing with synchronization and mutual exclusion.

3.2 creating child threads

- Thread create sub thread - instantiate object, code example:

""" //Multithreading by instantiating objects """ import time import threading def task(): """Current tasks to perform""" print("Listen to the music........") time.sleep(1) if __name__ == '__main__': start_time = time.time() threads = [] for count in range(5): t = threading.Thread(target=task) # Let the thread start executing the task t.start() threads.append(t) # Wait for the execution of all sub threads to finish, and then execute the main thread; [thread.join() for thread in threads] end_time = time.time() print(end_time-start_time)

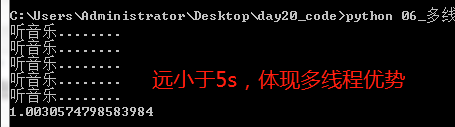

Execution result:

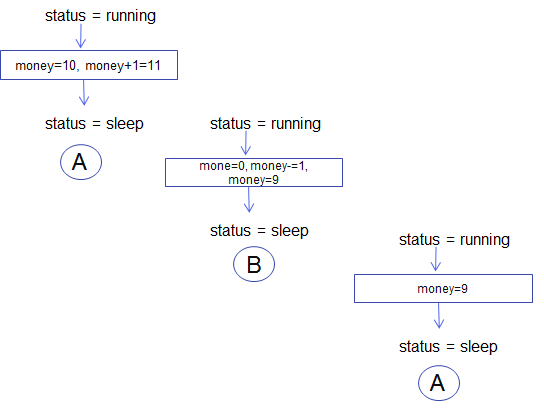

Principle analysis of multithreading:

- The execution order of multithreaded programs is uncertain.

- When the sleep statement is executed, the thread will be Blocked. After the end of the sleep, the thread will enter the ready state and wait for scheduling. And thread scheduling will choose to execute as thread itself.

- The code can only ensure that each thread runs a complete run function, but the start order of the thread and the execution order of each cycle in the run function cannot be determined.

- Thread create sub thread -- inherit to create a sub class, code example:

"""

Inherit to create subclass

***Project case: batch host survival detection based on Multithreading***

Project Description: if you want to determine which addresses are active or which computers are active on your local network,

You can use this script. We will ping the address in turn, waiting for a few seconds each time to return the value.

This can be programmed in Python with a for loop and an os.popen ("ping -q -c2" + ip) in the address range of the ip address.

Project bottleneck: a solution without threads is very inefficient because the script has to wait for every ping.

"""

from threading import Thread

class GetHostAliveThread(Thread):

"""

Create a sub thread, execute the task: judge whether the specified IP survives

"""

def __init__(self, ip):

super(GetHostAliveThread, self).__init__()

self.ip = ip

def run(self):

#Override run method: judge whether the specified IP is alive

#If the return value of >;

# ...

# >>> os.system('ping -c1 -w1 172.25.254.49 &> /dev/null')

# 0

# >>> os.system('ping -c1 -w1 172.25.254.1 &> /dev/null')

# 256

import os

#shell commands to execute

cmd = 'ping -c1 -w1 %s &> /dev/null' %(self.ip)

#If the return value is 0, the command is executed correctly and no error is reported; if it is not 0, the command is executed and an error is reported;

result = os.system(cmd)

if result != 0:

Print (""% s host has no ping pass ""% (self.ip))

if __name__ == '__main__':

print("print IP address not used by 172.25.254.0 network segment". center(50, '*'))

for i in range(1, 255):

ip = '172.25.254.' + str(i)

thread = GetHostAliveThread(ip)

thread.start()

3.3 inter thread resource management

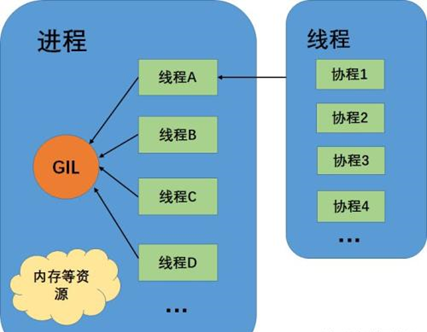

3.3.1 global interpretation lock GIL

Sharing global variables among multiple threads

Advantages: all threads in a process share global variables, and can complete data sharing among multiple threads without using other methods (this is better than multi processes)

Disadvantages: threads change global variables at will, which may cause confusion of global variables among multiple threads (i.e. thread is not safe)

Sharing global variables: how to solve thread insecurity?

GIL(global interpreter lock): only one thread is executing at any time in the python interpreter

The execution of Python code is controlled by Python virtual machine (also known as interpreter main loop, CPython version). At the beginning of design, python considered that it should be in the interpreter main loop, and only one thread is executing, that is, at any time, only one thread is running in the interpreter. Access to the python virtual machine is controlled by the global interpreter lock (GIL), which ensures that only one thread is running at the same time.

3.3.2 thread synchronization - > thread lock

**Thread synchronization: * * that is, when a thread is operating on memory, other threads cannot operate on this memory address until the thread completes the operation.

Synchronization is to coordinate the pace and run in the predetermined order. If you finish, I'll talk about it.

The word "Tong" is easy to understand literally as "starting an action",

The word "Tong" should refer to coordination, assistance and mutual cooperation.

How to realize thread synchronization – > thread lock, code example:

money = 0 # global variable def add(): for i in range(1000000): global money lock.acquire() # Lock up money += 1 # The statement includes two parts: the + 1 part and the assignment part, so you need to ensure that the statement is completely executed by a thread lock.release() # Unlock def reduce(): for i in range(1000000): global money lock.acquire() money -= 1 lock.release() if __name__ == '__main__': from threading import Thread, Lock # Create thread lock lock = Lock() t1 = Thread(target=add) t2 = Thread(target=reduce) t1.start() t2.start() t1.join() t2.join() print(money)

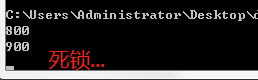

3.3.3 deadlock

When sharing multiple resources between online programs, if two threads occupy part of the resources respectively and wait for the resources of each other at the same time, it will cause deadlock.

Code example:

""" //When sharing multiple resources between online programs, if two threads occupy part of the resources respectively and wait for the resources of each other at the same time, it will cause deadlock. """ import time import threading class Account(object): def __init__(self, id, money, lock): self.id = id self.money = money self.lock = lock def reduce(self, money): self.money -= money def add(self, money): self.money += money def transfer(_from, to, money): if _from.lock.acquire(): _from.reduce(money) time.sleep(1) if to.lock.acquire(): to.add(money) to.lock.release() _from.lock.release() if __name__ == '__main__': a = Account('a', 1000, threading.Lock()) # 900 b = Account('b', 1000, threading.Lock()) # 1100 t1 = threading.Thread(target=transfer, args=(a, b, 200)) t2 = threading.Thread(target=transfer, args=(b, a, 100)) t1.start() t2.start() print(a.money) print(b.money)

Execution result:

4. Association

4.1 understanding the process

Co process, also known as micro thread, fiber process. The English name is Coroutine. Smaller execution unit than thread, with CPU context. It looks like a subroutine, but in the process of execution, it can be interrupted inside the subroutine, and then it will execute other subroutines, and return to execute when appropriate.

Relationships among processes, threads, and processes:

Advantages of the process:

- The execution efficiency is very high, because subroutine switching (function) is not thread switching, but controlled by the program itself.

- There is no cost of switching threads. Therefore, compared with multithreading, the more threads there are, the more obvious the advantage of CO programming performance.

- There is no need for multi-threaded locking mechanism, because there is only one thread, there is no conflict of writing variables at the same time, and there is no need to lock when controlling shared resources, so the execution efficiency is much higher.

4.2 realization method of cooperation process

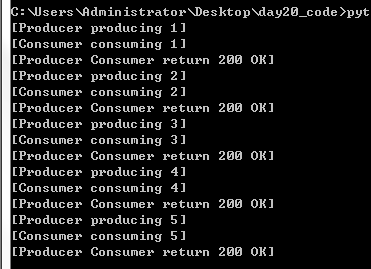

Method 1: yield implementation method producer consumer model

Code example:

import time def consumer(): r = '' while True: n = yield r if not n: return print("[Consumer consuming %s]" % n) time.sleep(1) r = '200 OK' def producer(c): c.__next__() n = 0 while n < 5: n += 1 print("[Producer producing %s]" % n) r = c.send(n) print("[Producer Consumer return %s]" % r) c.close() if __name__ == '__main__': c = consumer() producer(c)

Execution result:

Method 2: gevent implementation method

Code example:

import gevent import requests import json from gevent import monkey from sqlalchemy import create_engine, Column, Integer, String from sqlalchemy.ext.declarative import declarative_base from sqlalchemy.orm import sessionmaker from threading import Thread from gevent import monkey # Patch up monkey.patch_all() def task(ip): """Get assigned IP The city and country of and stored in the database""" # Get the return content of the web address url = 'http://ip-api.com/json/%s' % (ip) try: response = requests.get(url) except Exception as e: print("Page get error:", e) else: # String is returned by default """ {"as":"AS174 Cogent Communications","city":"Beijing","country":"China","countryCode":"CN","isp":"China Unicom Shandong Province network","lat":39.9042,"lon":116.407,"org":"NanJing XinFeng Information Technologies, Inc.","query":"114.114.114.114","region":"BJ","regionName":"Beijing","status":"success","timezone":"Asia/Shanghai","zip":""} """ contentPage = response.text # Convert the json string of the page into a dictionary that is easy to handle; data_dict = json.loads(contentPage) # Get corresponding cities and countries city = data_dict.get('city', 'null') # None country = data_dict.get('country', 'null') print(ip, city, country) # ips stored in database table ipObj = IP(ip=ip, city=city, country=country) session.add(ipObj) session.commit() if __name__ == '__main__': engine = create_engine("mysql+pymysql://root:westos@172.25.254.123/pymysql", encoding='utf8', # echo=True ) # Create cache object Session = sessionmaker(bind=engine) session = Session() # Declaration base class Base = declarative_base() class IP(Base): __tablename__ = 'ips' id = Column(Integer, primary_key=True, autoincrement=True) ip = Column(String(20), nullable=False) city = Column(String(30)) country = Column(String(30)) def __repr__(self): return self.ip # Create data table Base.metadata.create_all(engine) # gevent implementation gevents = [gevent.spawn(task, '1.1.1.' + str(ip + 1)) for ip in range(10)] gevent.joinall(gevents) print("end of execution....")