1. Deploy highly available kube-controller-manager

1.1 Introduction to highly available kube-controller-manager

In this experiment, a Three-instance cluster of kube-controller-manager is deployed. After starting, a leader node will be generated through the competitive election mechanism, and the other nodes will be blocked.When the leader node is unavailable, the blocked node will elect a new leader node again to ensure service availability.

To ensure communication security, this document generates an x509 certificate and a private key, which is used by kube-controller-manager in two cases:

- Communicate with the secure port of kube-apiserver;

- Output prometheus metrics on secure port (https, 10252).

1.2 Create a kube-controller-manager certificate and private key

1 [root@k8smaster01 ~]# cd /opt/k8s/work 2 [root@k8smaster01 work]# cat > kube-controller-manager-csr.json <<EOF 3 { 4 "CN": "system:kube-controller-manager", 5 "hosts": [ 6 "127.0.0.1", 7 "172.24.8.71", 8 "172.24.8.72", 9 "172.24.8.73" 10 ], 11 "key": { 12 "algo": "rsa", 13 "size": 2048 14 }, 15 "names": [ 16 { 17 "C": "CN", 18 "ST": "Shanghai", 19 "L": "Shanghai", 20 "O": "system:kube-controller-manager", 21 "OU": "System" 22 } 23 ] 24 } 25 EOF 26 #Create CA Certificate Request File for kube-controller-manager

Explanation:

The hosts list contains all the kube-controller-manager node IP;

Both CN and O are system:kube-controller-manager, and the built-in ClusterRoleBindings system:kube-controller-manager of kubernetes grants kube-controller-manager the privileges required to function.

1 [root@k8smaster01 ~]# cd /opt/k8s/work 2 [root@k8smaster01 work]# cfssl gencert -ca=/opt/k8s/work/ca.pem \ 3 -ca-key=/opt/k8s/work/ca-key.pem -config=/opt/k8s/work/ca-config.json \ 4 -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager #Generate CA key (ca-key.pem) and certificate (ca.pem)

1.3 Distributing certificates and private keys

1 [root@k8smaster01 ~]# cd /opt/k8s/work 2 [root@k8smaster01 work]# source /opt/k8s/bin/environment.sh 3 [root@k8smaster01 work]# for master_ip in ${MASTER_IPS[@]} 4 do 5 echo ">>> ${master_ip}" 6 scp kube-controller-manager*.pem root@${master_ip}:/etc/kubernetes/cert/ 7 done

1.4 Create and distribute kubeconfig

Kube-controller-manager accesses apiserver using a kubeconfig file that provides the apiserver address, embedded CA certificate, and kube-controller-manager certificate:

1 [root@k8smaster01 ~]# cd /opt/k8s/work 2 [root@k8smaster01 work]# source /opt/k8s/bin/environment.sh 3 [root@k8smaster01 work]# kubectl config set-cluster kubernetes \ 4 --certificate-authority=/opt/k8s/work/ca.pem \ 5 --embed-certs=true \ 6 --server=${KUBE_APISERVER} \ 7 --kubeconfig=kube-controller-manager.kubeconfig 8 9 [root@k8smaster01 work]# kubectl config set-credentials system:kube-controller-manager \ 10 --client-certificate=kube-controller-manager.pem \ 11 --client-key=kube-controller-manager-key.pem \ 12 --embed-certs=true \ 13 --kubeconfig=kube-controller-manager.kubeconfig 14 15 [root@k8smaster01 work]# kubectl config set-context system:kube-controller-manager \ 16 --cluster=kubernetes \ 17 --user=system:kube-controller-manager \ 18 --kubeconfig=kube-controller-manager.kubeconfig 19 20 [root@k8smaster01 work]# kubectl config use-context system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig 21 22 [root@k8smaster01 ~]# cd /opt/k8s/work 23 [root@k8smaster01 work]# source /opt/k8s/bin/environment.sh 24 [root@k8smaster01 work]# for master_ip in ${MASTER_IPS[@]} 25 do 26 echo ">>> ${master_ip}" 27 scp kube-controller-manager.kubeconfig root@${master_ip}:/etc/kubernetes/ 28 done

1.5 Create systemd for kube-controller-manager

1 [root@k8smaster01 ~]# cd /opt/k8s/work 2 [root@k8smaster01 work]# source /opt/k8s/bin/environment.sh 3 [root@k8smaster01 work]# cat > kube-controller-manager.service.template <<EOF 4 [Unit] 5 Description=Kubernetes Controller Manager 6 Documentation=https://github.com/GoogleCloudPlatform/kubernetes 7 8 [Service] 9 WorkingDirectory=${K8S_DIR}/kube-controller-manager 10 ExecStart=/opt/k8s/bin/kube-controller-manager \\ 11 --profiling \\ 12 --cluster-name=kubernetes \\ 13 --controllers=*,bootstrapsigner,tokencleaner \\ 14 --kube-api-qps=1000 \\ 15 --kube-api-burst=2000 \\ 16 --leader-elect \\ 17 --use-service-account-credentials\\ 18 --concurrent-service-syncs=2 \\ 19 --bind-address=##MASTER_IP## \\ 20 --secure-port=10252 \\ 21 --tls-cert-file=/etc/kubernetes/cert/kube-controller-manager.pem \\ 22 --tls-private-key-file=/etc/kubernetes/cert/kube-controller-manager-key.pem \\ 23 --port=0 \\ 24 --authentication-kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \\ 25 --client-ca-file=/etc/kubernetes/cert/ca.pem \\ 26 --requestheader-allowed-names="" \\ 27 --requestheader-client-ca-file=/etc/kubernetes/cert/ca.pem \\ 28 --requestheader-extra-headers-prefix="X-Remote-Extra-" \\ 29 --requestheader-group-headers=X-Remote-Group \\ 30 --requestheader-username-headers=X-Remote-User \\ 31 --authorization-kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \\ 32 --cluster-signing-cert-file=/etc/kubernetes/cert/ca.pem \\ 33 --cluster-signing-key-file=/etc/kubernetes/cert/ca-key.pem \\ 34 --experimental-cluster-signing-duration=8760h \\ 35 --horizontal-pod-autoscaler-sync-period=10s \\ 36 --concurrent-deployment-syncs=10 \\ 37 --concurrent-gc-syncs=30 \\ 38 --node-cidr-mask-size=24 \\ 39 --service-cluster-ip-range=${SERVICE_CIDR} \\ 40 --pod-eviction-timeout=6m \\ 41 --terminated-pod-gc-threshold=10000 \\ 42 --root-ca-file=/etc/kubernetes/cert/ca.pem \\ 43 --service-account-private-key-file=/etc/kubernetes/cert/ca-key.pem \\ 44 --kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \\ 45 --logtostderr=true \\ 46 --v=2 47 Restart=on-failure 48 RestartSec=5 49 50 [Install] 51 WantedBy=multi-user.target 52 EOF

1.6 Distributing systemd

1 [root@k8smaster01 ~]# cd /opt/k8s/work 2 [root@k8smaster01 work]# source /opt/k8s/bin/environment.sh 3 [root@k8smaster01 work]# for (( i=0; i < 3; i++ )) 4 do 5 sed -e "s/##MASTER_NAME##/${MASTER_NAMES[i]}/" -e "s/##MASTER_IP##/${MASTER_IPS[i]}/" kube-controller-manager.service.template > kube-controller-manager-${MASTER_IPS[i]}.service 6 done #Correct corresponding IP 7 [root@k8smaster01 work]# ls kube-controller-manager*.service 8 [root@k8smaster01 ~]# cd /opt/k8s/work 9 [root@k8smaster01 work]# source /opt/k8s/bin/environment.sh 10 [root@k8smaster01 work]# for master_ip in ${MASTER_IPS[@]} 11 do 12 echo ">>> ${master_ip}" 13 scp kube-controller-manager-${master_ip}.service root@${master_ip}:/etc/systemd/system/kube-controller-manager.service 14 done #Distribution system

2. Start and Verify

2.1 Start the kube-controller-manager service

1 [root@k8smaster01 ~]# cd /opt/k8s/work 2 [root@k8smaster01 work]# source /opt/k8s/bin/environment.sh 3 [root@k8smaster01 work]# for master_ip in ${MASTER_IPS[@]} 4 do 5 echo ">>> ${master_ip}" 6 ssh root@${master_ip} "mkdir -p ${K8S_DIR}/kube-controller-manager" 7 ssh root@${master_ip} "systemctl daemon-reload && systemctl enable kube-controller-manager && systemctl restart kube-controller-manager" 8 done

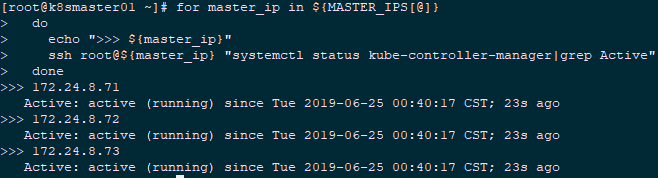

2.2 Check the kube-controller-manager service

1 [root@k8smaster01 ~]# source /opt/k8s/bin/environment.sh 2 [root@k8smaster01 ~]# for master_ip in ${MASTER_IPS[@]} 3 do 4 echo ">>> ${master_ip}" 5 ssh root@${master_ip} "systemctl status kube-controller-manager|grep Active" 6 done

2.3 View metrics of output

1 [root@k8smaster01 ~]# curl -s --cacert /opt/k8s/work/ca.pem --cert /opt/k8s/work/admin.pem --key /opt/k8s/work/admin-key.pem https://172.24.8.71:10252/metrics |head

Note: The above command is executed on the kube-controller-manager node.

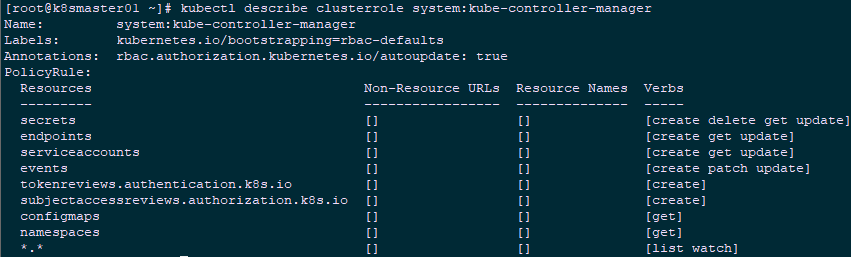

2.4 View Permissions

1 [root@k8smaster01 ~]# kubectl describe clusterrole system:kube-controller-managerClusterRole system:kube-controller-manager has very small permissions and can only create resource objects such as secret, service account, etc. The permissions of each controller are distributed to ClusterRole system:controller:XXX.

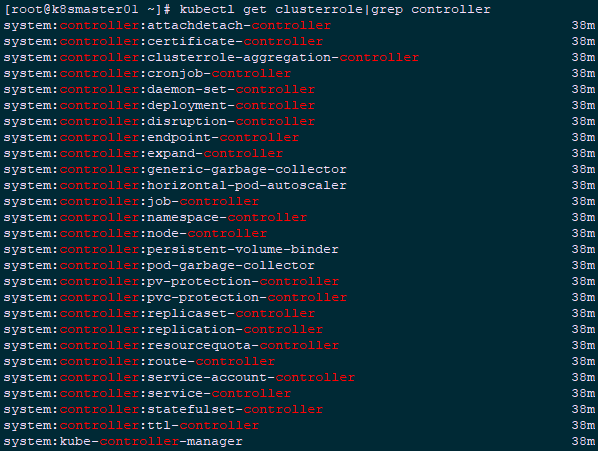

When the--use-service-account-credentials=true parameter is added to the start parameter of the kube-controller-manager, the main controller creates the corresponding ServiceAccount XXX-controller for each controller.The built-in ClusterRoleBinding system:controller:XXX grants ClusterRole system:controller:XXX permissions to each XXX-controller ServiceAccount.

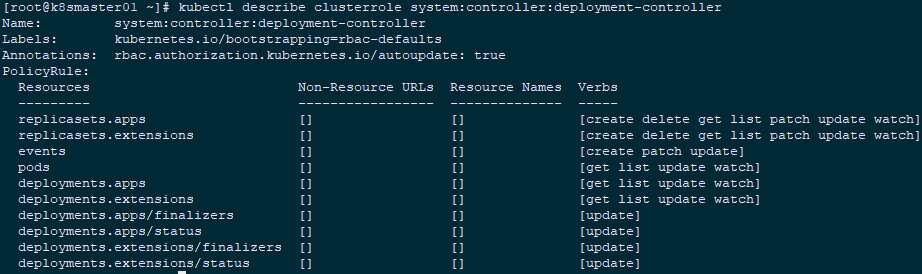

1 [root@k8smaster01 ~]# kubectl get clusterrole|grep controllerFor example, deployment controller:

1 [root@k8smaster01 ~]# kubectl describe clusterrole system:controller:deployment-controller2.5 View the current leader

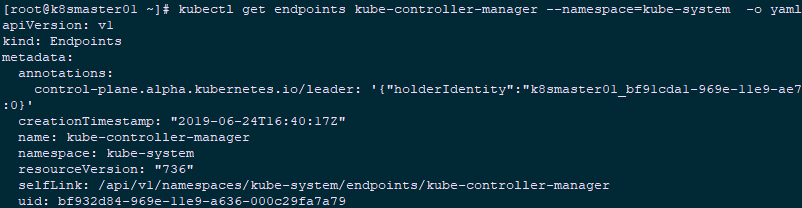

1 [root@k8smaster01 ~]# kubectl get endpoints kube-controller-manager --namespace=kube-system -o yaml

Kubelet authentication and authorization: https://kubernetes.io/docs/admin/kubelet-authentication-authorization/#kubelet-authorization